Conduent has partnered with Microsoft to use Microsoft Azure OpenAI to underpin its GenAI innovation initiatives with clients.

Its GenAI journey includes:

- Selecting use cases focused on improving quality, throughput, and cycle times

- Adoption of pilots in healthcare claims adjudication, fraud detection, and customer service enhancement

- Subsequently, moving to MVPs and industrializing use cases.

Use Case Selection Criteria Focused on Improving Quality, Throughput, and Cycle Times

Conduent recognizes that GenAI is an expensive technology and that its adoption will typically incur costs in changing existing processes and technology stacks. This makes it difficult to build GenAI business cases on already optimized operations based solely on cost reduction. Hence, Conduent is focusing on “innovative additive opportunities” to make the business case work.

The outcomes that Conduent is targeting from GenAI initiatives are:

- Improved quality, reducing error rates across standardized workflows

- Increased throughput of business process transactions

- Faster cycle times by consolidating value chain steps for faster processing.

At the same time, Conduent’s client relationships tend to involve relatively deep end-to-end service provision across a range of processes rather than single-process support. These combinations of services are tailored for specific clients rather than being standalone commoditized services.

Accordingly, Conduent’s document management services and CX services are generally supplied as part of a wider capability rather than as standalone services. Within this pattern, most of its solutions include elements of:

- Document management, including summarization & analysis, and extracting and contextualizing text within images

- User interaction/call center with multiple clients across various sectors, including enhancing agent assist, virtual agent, and call center agent assessment

- Search & analytics.

These three areas are regarded as core competencies by Conduent and all its GenAI use cases for the immediate future will fall into one of these areas and will be capable of delivering improved quality, throughput, and cycle times.

Initial GenAI PoCs Focus on Healthcare Claims Adjudication, Fraud Detection, and Customer Service Enhancement

Conduent has announced three GenAI pilot areas covering healthcare claims adjudication, state government program fraud detection, and customer service enhancement.

Conduent is a major provider of healthcare claims adjudication services. Here, it is working on a PoC with several healthcare clients to apply GenAI to reduce the error rate in data extraction and achieve faster cycle times in claims adjudication. GenAI is being used within document management to summarize highly unstructured documents, such as appeals documents and medical records, using its contextualization capabilities, including image-to-text. The technologies used are Azure AI Document Intelligence and Azure OpenAI Service.

Secondly, Conduent is working on a fraud detection PoC to support social programs in the U.S. state government sector. This PoC uses GenAI for search & analytics across multiple structured and unstructured data sets to increase the volume and speed of fraud detection. The technologies used here are Azure Data Factory and Azure OpenAI Service.

Finally, Conduent is using GenAI to enhance the use of agent assist and virtual agents by training virtual agents on existing data so that they can be deployed much faster.

Moving to MVPs and Industrializing GenAI Use Cases

Conduent initially undertook PoCs in the above areas to get both Conduent and the client comfortable with the results of applying GenAI and prove the use case. The PoC process is iterative and very granular, and Conduent perceives that organizations need to be extremely prescriptive to get the right results. This typically means defining the specific inputs and outputs of the use case very tightly, including defining what GenAI should do in the absence of individual inputs.

Conduent is now moving towards MVP and building client business cases in several of these pilots, including in some pilots in the document management space. Since Conduent has taken a horizontal approach to its use case selection, many of these have the potential to scale across multiple industry segments and clients.

In addition, Conduent provides BPaaS services to many of its clients, so it is looking to embed proven GenAI use cases in its proprietary technology platforms.

Existing use cases are business unit-sponsored but curated centrally, with the center providing enablement and cross-pollination across business units. However, the intention is to incubate future GenAI capability within individual business units.

]]>

Capgemini has launched a new digital transformation service, One Operations, with the specific goal of driving client revenue growth.

One Operations: Key Principles

Some of One Operations’ principles, such as introducing benchmark-driven best practice operations models, taking an end-to-end approach to operations across silos, and using co-invested innovation funds, are relatively well established in the industry. However, what is new is building on these principles to incorporate an overriding focus on delivering revenue growth. The business case for a One Operations assignment focuses on facilitating the client’s revenue growth and taking a B2B2C approach focused on the end customer, emphasizing the delivery of insights that enable client personnel to make earlier decisions focused on the enterprise’s customers.

Capgemini’s One Operations account teams involve consulting and operations working together, with Capgemini Invent contributing design and consulting and the operational RUN organization provided by Capgemini’s Business Services global business line.

Implementing a One Operations philosophy across the client organization and Capgemini is achieved through shared targets to reduce vendor/client friction and co-invested innovation funds. One Operations assignments involve setting joint targets with a continuously replenished co-invested innovation fund of ~10–15% of Capgemini revenues used to fund digital transformation.

One Operations is very industry-focused, and Capgemini is initially targeting selected clients within the CPG sector, looking to assist them in growing within an individual country or small group of countries by localizing within their global initiatives. The key to this approach is demonstrating to clients that it understands and can support both the ’grow’ and ’run’ elements of their businesses and having an outcome-based conversation. Capgemini is typically looking to enable enterprises to achieve 4X growth by connecting the sales organization to the supply chain.

Assignments commence with working sessions brainstorming the possibilities with key decision-makers. The One Operations client team is jointly led by a full-time executive from Capgemini Invent and an executive from Capgemini’s Business Services. The Capgemini Invent executive remains part of the One Operations client team until go-live. The appropriate business sector expertise is drawn more widely from across the Capgemini group.

One Operations assignments typically have three phases:

- Deployment planning (3–6 months) to understand the processes and associated costs and create the business case

- Deployment (6–15 months) to create the ’day one’ operating model

- Sustain, involving go-live and continuous improvement.

At this stage, Capgemini has two live One Operations assignments with further discussions taking place with clients.

Using End-to-End Process Integration to Speed Up Growth-Oriented Insights

Capgemini’s One Operations has three key design principles:

- Re-inventing the organization by embedding a growth mindset by reducing business operations complexity and enabling an AI-augmented workforce to focus on their customers and higher-value services

- Increasing the level of end-to-end integration by improving data accuracy and incorporating AI to achieve ’touchless forecasting & planning’ and enable better decisions and speed of innovation. ’Frictionless’ end-to-end integration is used to support more connected decisions and planning across the value chain

- Transforming at speed and scale.

These transformations involve:

- Shaping the strategic transformation agenda through defining the target operating model based on peer benchmarks and using standardized operating model design, assets, and accelerators

- Using a digital-first framework incorporating One Operations pre-configured digital process evaluation and digital twins

- Deployment of D-GEM technology accelerators, including AI-augmented workforce solutions and Capgemini IP such as Tran$4orm and ranging from platforms to microtools

- Augmented operations using Capgemini Business Services.

Changing the mindset within the enterprise involves freeing personnel from tactical transactional activities and providing relevant information supporting their new goals.

Capgemini aims to achieve the growth mindset in client enterprises by enabling an integrated end-to-end view from sales to delivery, facilitating teams with digital tools for process execution and growth-oriented data insights. Within this growth focus, Capgemini offers an omnichannel model to drive sales, augmented teams to enable better customer interactions, predictive technology to identify the next best customer actions, and data orchestration to reduce customer friction.

One Operations also enables touchless planning to improve forecast accuracy, increase the order fill rate, reduce time spent planning promotions, and accelerate cash collections to reduce DSO, while improving promotions accuracy and product availability are also key to revenue growth within CPG and retail environments.

Shortening Forecasting Process & Enhancing Quality of Promotional Decisions: Keys to Growth in CPG

The overriding aim within One Operations is to free enterprise employees to focus on their customers and business growth. In one example, Capgemini is looking to assist an enterprise in increasing its sales within one geography from ~$1bn to $4bn.

The organization needed to free up its operational energies to focus on growth and create an insight-driven consumer-first mindset. However, the organization faced the following issues:

- 70% of its planning effort was spent analyzing past performance, and ~100 touches were required to deliver a monthly forecast

- Order processing efficiency was below the industry average

- Approx. 30% of its trucks were leaving the warehouse half-empty

- Launching products was taking longer than expected.

Capgemini took a multidisciplinary approach end-to-end across plan-to-cash. One key to growth is the provision of timely information. Capgemini is aiming to improve the transparency of business decisions. For example, the company has rationalized the coding of PoS data so that it can be directly interfaced with forecasting, shortening the forecasting process from weeks to days and enhancing the quality of promotional decisions.

Capgemini also implemented One Operations, leveraging D-GEM to develop a best-in-class operating model resulting in a €150m increase in revenue, 15% increase in forecasting accuracy, 50% decrease in time spent on setting up marketing promotions, and a 20% increase in order fulfillment rate.

]]>

Digital transformation and the associated adoption of Intelligent Process Automation (IPA) remains at an all-time high. This is to be encouraged, and enterprises are now reinventing their services and delivery at a record pace. Consequently, enterprise operations and service delivery are increasingly becoming hybrid, with delivery handled by tightly integrated combinations of personnel and automations.

However, the danger with these types of transformation is the omnipresent risk in intelligent process automation projects of putting the technology first, regarding people as secondary considerations, and alienating the workforce through reactive communication and training programs. As many major IT projects have discovered over the decades, the failure to adopt professional organizational change management procedures can lead to staff demotivation, poor system adoption, and significantly impaired ROI.

The greater the organizational transformation, the greater the need for professional organizational change management. This requires high workforce-centricity and taking a structured approach to employee change management.

In the light of this trend, NelsonHall's John Willmott interviewed Capgemini's Marek Sowa on the company’s approach to organizational change management.

JW: Marek, what do you see as the difference between organizational change management and employee communication?

MS: Employee communication tends to be seen as communicating a top-down "solution" to employees, whereas organizational change management is all about empowering employees and making them part of the solution at an individual level.

JW: What are the best practices for successful organizational change management?

MS: Capgemini has identified three best practices for successful organizational change management, namely integrated OCM, active and visible sponsorship, and developing a tailored case for change:

- Integrated OCM – OCM will be most effective when integrated with project management and involved in the project right from the planning/defining phase. It is critical that OCM is regarded as an integral component of organizational transformation and not as a communications vehicle to be bolted on to the end of the roll-out.

- Active and visible sponsorship – C-level executives should become program sponsors and provide leadership in creating a new but safe environment for employees to become familiar with new tools and learn different practices. Throughout the project, leaders should make it a top priority to prove their commitment to the transformation process, reward risk-taking, and incorporate new behaviors into the organization's day-to-day operations.

- Tailored case for change – The new solution should be made desirable and relevant for employees by presenting the change vision, outlining the organization's goals, and illustrating how the solution will help employees achieve them. It is critical that the case for change is aspirational, using evidence based on real data and a compelling vision, and that employees are made to feel part of the solution rather than threatened by technological change.

JW: So how should organizations make this approach relevant at the workgroup and individual level?

MS: A key step in achieving the goals of organizational change management is identifying and understanding all the units and personnel in the organization that will be impacted both directly and indirectly by the transformation. Each stakeholder or stakeholder group will likely find itself in a different place when it comes to perspective, concerns, and willingness to accept new ways of working. It is critical to involve each group in the transformation and get them involved in shaping and driving the transformation. One useful concept in OCM for achieving this is WIIFM (What's In It For Me), with WIIFM identified at a granular level for each stakeholder group.

Much of the benefit and expected ROI is tied to people accepting and taking ownership for the new approach and changing their existing ways of working. Successfully deployed OCM motivates personnel by empowering employees across the organization to improve and refine the new solution continually, stimulating revenue growth, and securing ROI. People need to be both aware of how the new solution is changing their work and that they are active in driving it – and thanks to that, they are actively making the organization a "powerhouse" for continuous innovation.

How an enterprise embeds change across its various siloes is very important. In fact, in the context of AI, automatization is not only about adopting new tools and software but mostly about changing the way the enterprise's personnel think, operate and do business.

JW: How do you overcome employees' natural fear of new technology?

MS: To generate enthusiasm within the organization while avoiding making the vision seem unattainable or scary, enterprises need to frame and sell transformations incorporating, for example, AI as evolutions of something the employees are doing already, not merely as "just the next logical step" but reinventions of the whole process – from both the business and experience perspective. They need to retain the familiarity which gives people comfort and confidence but, on the other hand, reassure them that the new tool/solution adds to their existing capability, allowing them to fulfill their true potential – something that is not automatable.

]]>

For some time, life & annuities carriers have suffered from a multitude of legacy platforms, with each implemented to handle a particular style of product that was either not handled by its predecessor or was added through the acquisition of a set of blocks from another carrier. The resulting stable of platforms has always been expensive to maintain. In recent years, this has been compounded by the increasing importance of digital customer experience and the ability to launch new products quickly.

The pandemic has further emphasized these needs with consumers increasingly moving online, the need for new types of insurance products, and the vast majority of companies increasing their digital transformation emphasis. While most of Infosys McCamish’s current pipeline is driven by mergers & acquisitions and platform rationalization and optimization to modernize legacy environments, they have also onboarded new clients to provide end-to-end services for open blocks, becoming a viable partner for organizations looking to expand their new business pipeline.

Infosys McCamish Focuses on Client Interaction

Indeed, while life companies need to launch new products at speed, insurance product functionality is now increasingly taken as table stakes. Life & annuities producers are now much more focused on client interaction functionality. This includes the omni-channel experience and the ability to deliver a zero-touch digital engagement, incorporating, for example, machine learning to deliver straight-through processing and next-best actions.

In line with these requirements, Infosys McCamish has taken a 3-tiered approach:

- For policy owners & agents: aiming for zero-touch, omnichannel, and responsive design via portals, e-delivery, e-sign, smart video, SMS notify, and chatbots

- For operations: aiming for ‘one & done’ via the use of workflow, dashboards, content management, and document management

- Core administration functionality: aiming for seamless integration via APIs together with an extensive product library.

Infosys McCamish’s preference is to convert policies from client legacy platforms to its own VPAS platform. Its conversion accelerator identifies data cleanliness and produces balance and control reports before moving the policy data to VPAS. Not all data is moved to VPAS, with data beyond the reinstatement period being moved to a separate source data repository. Infosys McCamish will aim to have 13-24 months of re-processable data on its platform, converting all the history as it was processed on the original platform so that in the future, it is possible to view exactly what happened on the prior platform.

VPAS supports a wide range of life & annuity products, including term life, traditional life, universal life, deferred annuities, immediate annuities, and flexible spending accounts, and Infosys McCamish estimates that on mapping a carrier’s current products with the current configurations in VPAS, there is typically around 97% full compatibility. VPAS currently supports ~40m policies across 22 distinct product families.

However, where necessary or where conversion for some policy types is impossible, it can also wrap its customer experience tools around legacy insurance platforms to provide a common and digital customer experience. Infosys McCamish platforms make extensive use of an API library that supports synchronous and asynchronous communication between Infosys McCamish systems and customer systems.

Incorporating “Smart Video” into the Customer Experience

Infosys McCamish has enhanced its customer experience to enable policy/contract owners to go beyond viewing policies online and transact in real-time, further introducing:

- Mobile App and chatbot functionality

- Smart video, which uses APIs to extract data and present it to the customer in video form

- Wearables to support wellness products.

Customers can view their billing and premium information and obtain policy quotes online, with personalized smart video used to enhance the customer experience. They can also initiate policy surrenders online. Depending on carrier policy, surrenders to a certain value are handled automatically, with higher value surrenders being passed to a senior person for verification. Similarly, if a customer is seeking to extend their coverage online, the request is routed by the workflow to an underwriter or senior manager. DocuSign is used to facilitate the use of e-signatures rather than paper documents. All correspondence can be viewed online by customers, with AI-enabled web chat used to support customer queries.

Digital adoption depends on carrier policy and is running at ~25%, with customers being prompted to use digital in all correspondence. Single-touch and no-touch processing account for ~75% of transactions.

Workflow & Dashboards Guiding Agents to Reduce Time to Onboard

Infosys McCamish has integrated BPM and workflow and low-code development to support the back-office and call center service layers to provide operations with inbuilt automation to achieve increased levels of straight-through processing and fewer opportunities for manual errors. It incorporates business rules so that data is only keyed once with, for example, relevant customer updates applied to one policy type being applied across all of their policies.

The VPAS customer service work desk is built on Pega, with the workflow configured for contact center and back-office services and supporting the customer and agent self-service portals.

The agent dashboard is dynamic with the view shown based on the agent role, and the call center dashboard provides drill-downs on service requests by type, SLA performance details such as average handling times, and the full audit trails of each transaction.

The workflow also guides the call center agent through the steps in a transaction, provides scripting, and uses AI to recommend additional actions when communicating with a customer. This improves the quality of each interaction and significantly reduces the time taken to train new agents.

The above is supported by experience enablers underpinned by the data warehouse, which is updated in real-time as changes are made in the policy administration system. The data warehouse is accessed via APIs by Infosys Nia analytics or third-party tools such as PowerBI or Tableau.

Product Configuration Based on Cloning Existing Products

New products are typically created within the product management module by cloning an existing product or template and business rules; for example, customizing to add or remove certain features or coverages, rather than by creating new product features and functionality.

VPAS new business supports digital new business, including E-App and underwriting case management, and integrations with other new business platforms such as iPipeline and FireLight.

Agent Management & Compensation Increasingly Bundled with Product Administration

In addition to the VPAS life and annuity product administration system, Infosys McCamish’s life & annuity platforms also include PMACS, a producer management & compensation system, supporting agent onboarding, licensing, and commission management.

Infosys McCamish is experiencing a greater requirement for end-to-end capability, with PMACS increasingly being bundled with VPAS. The emphasis within PMACS has moved beyond commission management, where the system shows the agent how each commission was calculated, to agent onboarding, licensing, and appointments, allowing agents to view their pipelines and their client policy portfolios.

PMACS has also moved beyond supporting life & annuities and group & critical illness to support property & casualty.

Summary

Infosys McCamish is increasingly looking to assist life & annuities carriers in the adoption of modern digital platforms, and their VPAS ecosystem emphasizes:

- Digitalization, with a high emphasis on separation of systems of engagement from systems of record

- Componentization, to facilitate low impact enhancements and speed to market

- Self-service for both customers and agents, in support of zero-touch processing and multi-channel access

- Integrated BPM and workflow and low-code development in support of the back-office and call center service layers

- Use of CI/CD to identify the impact that changes in one component will have on other software components.

John Willmott and Rachael Stormonth

]]>

The pandemic has changed organizations’ attitudes towards the need for change, greatly increasing their emphasis on adopting new digital process models and digital transformation. Partly this is driven by the need to enhance their transactional efficiency and effectiveness rapidly, but at least equally importantly, it has brought a much greater requirement for real-time information and analytics to drive the business. All these pressures are keenly felt within the finance department.

WNS introduces Quote-to-Sustain

In response, WNS has looked to reinvent order-to-cash in the form of Quote-to-Sustain. Some of the issues that WNS is aiming to address with its Quote-to-Sustain offering include:

- Improving billing timeliness and accuracy by improving the integration and consistency of data between quotations, digital contracts, order management & fulfillment, and billing systems

- Maximizing revenue by releasing credit holds for good customers

- Protecting the enterprise with more dynamic credit control than periodic credit review

- Improving collections by cleansing master data in real-time.

In addition to delivering enhanced end-to-end visibility, stakeholder experience, and analytics, WNS has also reimagined its Quote-to-Sustain service to deliver greater variability in F&A process costs as business volumes fluctuate and become more unpredictable as a result of the pandemic. Its new Quote-to-Sustain offering bundles technology and services and allows clients to “pay by the drink”.

Specifically, the goal is for clients to remain cash neutral and, subject to some volume commitment, to pay only for transactions, with a decrease in costs emerging from year two onwards. WNS funds all change management.

Quote-to-Sustain modules

The Quote-to-Sustain module structure is:

- Quote-to-Order: consisting of unified master data, cognitive credit, digital contracts, and smart orders

- Bill-to-Cash: consisting of integrated billing, intelligent collections, predictive dispute management, touchless cash applications, and predictive deduction management

- Report-to-Sustain: consisting of digital dashboards, botified queries, analytics-as-a-service, and revenue assurance.

Each of these modules takes the form of a system of engagement sitting on top of the client’s existing ERP systems and systems of record.

In this respect, the use of unified master data is important in bringing together the various commercial and financial elements from multiple databases to ensure accuracy, for example, in billing the right person and identifying the appropriate person for each type of query. The unified master data aims to be a single source of the truth using data authentication from external sources and providing an element of real-time data cleansing.

WNS’ cognitive credit offering aims to take credit management beyond the periodic review of credit bureau reports and base its recommendations for credit eligibility on its own analyses of financial ratios, customer behavior (including any changes in payment pattern), and news triggers from external sources. WNS believes this approach to be particularly effective in addressing credit management within small businesses. The service incorporates technology from HighRadius and Emagia.

WNS’ digital contracts and smart orders modules utilize its Skense and Agilius platforms to combine and analyze data from various sources and integrate quotations, orders, contracts, and billing to reduce the errors that typically arise between disparate sources of information.

WNS’ Revenue Assurance and Analytics modules include some industry-specific modules to monitor and minimize revenue dilution, using analytics for improved collections and to reduce revenue losses arising from upstream process errors.

Quote-to-Sustain adoption plan

WNS has always approached F&A from a sector-specific viewpoint. Having developed all the modules within Quote-to-Sustain, WNS is now in the process of integrating this capability with its industry-specific processes in line with client demand. Powered by an exclusive partnership with EvoluteIQ, WNS’ domain-led hyperautomation platform suite is designed to accelerate the adoption of process automation and drive enterprise-wide digital transformation. The sectors WNS will focus on are the ones where it has already developed industry-specific expertise and IP and include airlines, travel agencies, trucking, shipping & logistics, insurance, telecom, media, CPG, manufacturing, and utilities.

Nonetheless, the initial clients of WNS Quote-to-Sustain have typically started by purchasing a single module such as cognitive credit & collections, and WNS expects a typical sequence of deployment to be cognitive credit & collections, followed by unified master data, followed by revenue assurance.

WNS has already applied its cognitive credit, touchless cash applications, botified queries, and analytics-as-a-service modules of Quote-to-Sustain for a media client. This company’s cash application process was automated but only achieved a 75% auto-match rate due to delays in the receipt of remittance advice notes. WNS deployed touchless cash apps via EIPP (electronic invoice presentment and payments) to achieve an auto-match rate of 88% and introduced intelligent chatbots and predictive disputes management to reduce the time for resolution significantly. The chatbots resolve most disputes without human intervention, with all trade promotion issues resolved through chatbots.

Overall, the media company has achieved a potential $38m uplift in free cash flow by optimizing payments from late-paying customers and an 11% reduction in bad debts by improving late-stage collection.

In addition to this modular approach being taken with mid-sized organizations, WNS is also targeting start-ups, where the company is in discussion with some organizations for the entire suite of end-to-end services.

Conclusion

Many existing F&A operations have incorporated best-of-breed point solutions and subsequently applied RPA in support of point automations. However, these organizations are often still using disparate data sources and have not fully reimagined their F&A processes into an integrated framework using a single source of the truth and analytics for improved operational and business intelligence. WNS’ Quote to Sustain offering aims to provide this reimagined finance model and help organizations become more agile and analytical in their approach to order-to-cash.

]]>

Capgemini has just launched version 2 of the Capgemini Intelligent Automation Platform (CIAP) to assist organizations in offering an enterprise-wide and AI-enabled approach to their automation initiatives across IT and business operations. In particular, CIAP offers:

- Reduced TCO and increased resilience through use of shared third-party components

- Support for AIOps and DevSecOps

- A strong focus on problem elimination and functional health checks.

Reduced TCO & increased ability to scale through use of a common automation platform

A common problem with automation initiatives is their distributed nature across the enterprise, with multiple purchasing points and a diverse set of tools and governance, reducing overall RoI and the enterprise's ability to scale automation at speed.

Capgemini aims to address these issues through CIAP, a multi-tenanted cloud-based automation solution that can be used to deliver "automation on tap." It consists of an orchestration and governance platform and the UiPath intelligent automation platform. Each enterprise has a multi-tenanted orchestrator providing a framework for invoking APIs and client scripts together with dedicated bot libraries and a segregated instance of UiPath Studio. A central source of dashboards and analytics is built into the front-end command tower.

While UiPath is provided as an integral part of CIAP, CIAP also provides APIs to integrate other Intelligent Automation platforms with the CIAP orchestration platform, enabling enterprises to continue to optimize the value of their existing use cases.

The central orchestration feature within CIAP removes the need for a series of point solutions, allowing automations to be more end-to-end in scope and removing the need for integration by the client organization. For example, within CIAP, event monitoring can trigger ticket creation, which in turn can automatically trigger a remediation solution.

Another benefit of this shared component approach is reducing TCO by improved sharing of licenses. The client no longer has to duplicate tool purchasing and dedicate components to individual automations; the platform and its toolset can be shared across each of infrastructure, applications, and business services departments within the enterprise.

CIAP is offered on a fixed-price subscription-based model based on "typical" usage levels, with additional charges only applicable where client volumes necessitate additional third-party execution licenses or storage beyond those already incorporated in the package.

Support for AIOps & DevSecOps

CIAP began life focused on application services, and the platform provides support for AIOps and DevSecOps, not just business services.

In particular, CIAP incorporates AIOps using the client's application infrastructure logs for reactive and predictive resolutions. In terms of reactive resolutions, the AIOps can identify the dependent infrastructure components and applications, identify the root cause, and apply any automation available.

CIAP also ingests logs and alerts and uses algorithms to correlate them, so that the resolver group only needs to address a smaller number of independent scenarios rather than each alert individually. The platform can also incorporate the enterprise's known error databases so that if an automated resolution does not exist, the platform can still recommend the most appropriate knowledge objects for use in resolution.

Future enhancements include increased emphasis on proactive capacity planning, including proactive simulation of the impact of change in an estate and enhancing the platform's ability to predict a greater range of possible incidents in advance. Capgemini is also enhancing the range of development enablers within the platform to establish CIAP as a DevSecOps platform, supporting the life cycle from design capture through unit and regression testing, all the way to release within the platform, initially starting with the Java and .NET stacks.

A strong focus on problem elimination & functional health checks

Capgemini perceives that repetitive task automation is now well understood by organizations, and the emphasis is increasingly on using AI-based solutions to analyze data patterns and then trigger appropriate actions.

Accordingly, to extend the scope of automation beyond RPA, CIAP provides built-in problem management capability, with the platform using machine learning to analyze historical tickets to identify the causes and recurring problems and, in many cases, initiate remediation automatically. CIAP then aims to reduce the level of manual remediation automation on an ongoing basis by recommending emerging automation opportunities.

In addition to bots addressing incident and problem management, the platform also has a major emphasis within its bot store on sector-specific bots providing functional health checks for sectors including energy & utilities, manufacturing, financial services, telecoms, life sciences, and retail & CPG. One example in retail is where prices are copied from a central system to store PoS systems daily. However, unreported errors during this process, such as network downtime, can result in some items remaining incorrectly priced in a store PoS system. In response to this issue, Capgemini has developed a bot that compares the pricing between upstream and downstream systems at the end of each batch pricing update, alerting business users, and triggering remediation where discrepancies are identified. Finally, the bot checks that remediation was successful and updates the incident management tool to close the ticket.

Similarly, Capgemini has developed a validation script for the utilities sector, which identifies possible discrepancies in meter readings leading to revenue leakage and customer dissatisfaction. For the manufacturing sector, Capgemini has developed a bot that identifies orders that have gone on credit hold, and bots to assist manufacturers in shop floor capacity planning by analyzing equipment maintenance logs and manufacturing cycle times.

CIAP has ~200 bots currently built into the platform library.

A final advantage of using platforms such as CIAP beyond their libraries and cost advantages is that they provide operational resilience by providing orchestrated mechanisms for plugging in the latest technologies in a controlled and cost-effective manner while unplugging or phasing out previous generations of technology, all of which further enhances time to value. This is increasingly important to enterprises as their automation estates grow to take on widespread and strategic operational roles.

]]>

Q&A Part 2

JW: What are the main supply chain flows that supply chain executives should look to address?

JJ: Traditionally, there are three main supply chain flows that benefit from automation:

- Physical flow (flow of goods from, e.g., from a DC to a retailer, the most visible and tangible flow) – some more obvious than others, such as parcels delivered to your door or raw materials arriving at a plant. To address these issues, the industry is getting ready (or is ready) to adopt drones, automated trucking, and automated guided vehicles (AGV). But to achieve true end-to-end physical delivery, major infrastructure and regulatory changes are yet to happen to fully unleash the potential of physical automation in this field. In the short-term, however, let’s not forget the critical paper flow associated with these flows of goods, such as a courier sending Bills of Lading to a given port on time for customs clearance and vessel departure, a procedure that often leads to unexpected delays

- Financial flow (flow of money) – here the industry is adopting new technologies to palliate common issues, e.g., interbanking communication in support of letters of credit

- Information flow (flow of information connecting systems and stakeholders alike and ensuring that relevant data is shared, ideally in real-time, between, e.g., a supplier, a manufacturer, and its end customers) – this is the information you share via email/spreadsheets or through a platform connecting you with your ecosystem partners. This flow is also a perfect candidate for automation, starting with a platform to break silos or for smaller transformation with tactical RPA deployments. More ambitious firms will also want to look into blockchain solutions to, for instance, transparently access information about their suppliers and ensure that they are compliant (directly connecting to the blockchain containing information provided by the certification institution such as ISO). While the need for drones and automated trucking/shipping is largely contingent on infrastructure changes, regulations, and incremental discoveries, the financial and information flows have reached a degree of maturity at scale that has already been generating significant quantifiable benefits for years.

JW: Can you give me examples of where Capgemini has deployed elements of an autonomous supply chain?

JJ: Capgemini has developed capabilities to help our clients not only design but also run their services following best-practice methodologies blending optimal competencies, location mix, and processes powered by intelligent automation, analytics, and world-renowned platforms. We have helped clients transform their processes, and we have run them from our centers of excellence/delivery centers to maximize productivity.

Two examples spring to mind:

Touchless planning for an international FMCG company:

Our client had maxed out their forecasting capabilities using standard ERP embedded forecasting modules. Capgemini leveraged our Demand Planning framework powered by intelligent automation and combined it with best-in-class machine learning platforms to increase the client’s forecasting accuracy and lower planning costs by over 25%, and this company is now moving to a touchless planning function.

Automated order validation and delivery note for an international chemical manufacturing company:

Our client was running fulfillment operations internally at a high operating cost and low productivity. Capgemini transformed the client’s operations and created a lean team in a cost-effective nearshore location. On top of this, we leveraged intelligent automation to create a touchless purchase/sales order to delivery note creation flow, checking that all required information is correct, and either raising exceptions or passing on the data further down the process to trigger the delivery of required goods.

JW: What are the key success factors for enterprises starting the journey to autonomous supply chains?

JJ: Moving to an autonomous supply chain is a major business and digital transformation, not a standalone technology play, and so corporate culture is highly important in terms of the enterprise being prepared to embrace significant change and disruption and to operate in an agile and dynamic manner.

To ensure business value, you also need a consistent and holistic methodology such as Capgemini’s Digital Global Enterprise Model, which combines Six Sigma-based optimization approaches with a five senses-driven automation model, a framework for the deployment of intelligent automation and analytics technology.

Also, a lot depends on the quality of the supply chain data. Enterprises need to get the data right and master their supply chain data because you can’t drive autonomy if the data is not readily available, up-to-date in real-time, consistent, and complete. Supply chain and logistics is not so much about moving physical goods; it's been about moving information for decades. A bit of automation here and there will not make your supply chain touchless and autonomous. It requires integration and consolidation first before you can aim for autonomy.

JW: And how should enterprises start to undertake the journey to autonomous supply chains?

JJ: The first step is to build the right level of skill and expertise within the supply chain personnel. Scaling too fast without considering the human factor will result in a massive mess and a dip in supply chain performance. Also, it is important to set a culture of continuous improvement and constant innovation, for example, by leveraging a digitally augmented workforce.

Secondly, the right approach is to make elements of the supply chain touchless. Autonomy will happen as a staged approach, not as a big bang. It’s a journey. Focus on high-impact areas first, enable quick wins, and start with prototyping. So, supply chain executives should identify those pockets of excellence that are close to being ready, or which can be made ready, to be made touchless, and where you can drive supply chain autonomy.

One approach to identifying the most appropriate initiatives is to plot them against two axes: the y-axis being the effort to get there and the x-axis being the impact that can be achieved. This will help identify pockets of value that can be addressed relatively quickly, harvesting some quick wins first. As you progress down this journey, further technologies may mature that allow you to address the last pieces of the puzzle and get to an extensively autonomous supply chain.

JW: Which technologies should supply chain executives be considering to underpin their autonomous supply chains in the future?

JJ: Beyond fundamental technologies such as RPA, machine learning has considerable potential to help, for example, in demand planning to increase accuracy, and in fulfillment to connect interaction and decision-making.

Technologies now exist that can, for example, both recognize and interpret the text in an email and automatically respond and send all the information required; for example, for order processing, populating orders automatically, with the order validated against inventory and with delivery prioritized according to corporate rules – and all this without human intervention. This can potentially be extended further with automated carrier bookings against rules. Of course, this largely applies to the “happy flows” at the moment, but there are also proven practices to increase the proportion of “happy orders”.

The level of autonomy in supply chain fulfillment can also be increased by using analytics to monitor supply chain fulfillment and predict potential exceptions and problems, then either automating mitigation or proposing next-best actions to supply chain decision-makers.

This is only the beginning, as AI and blockchain still have a long way to go to reach their potential. Companies that harness their power now and are prepared to scale will be the ones coming out on top.

JW: Thank you, Joerg. I’m sure our readers will find considerable food for thought here as they plan and undertake their journeys to autonomous supply chains.

]]>

Introduction

Supply chain management is an area currently facing considerable pressure and is a key target for transformation. NelsonHall research shows that less than a third of supply chain executives in major enterprises are highly satisfied with, for example, their demand forecasting accuracy and their logistics planning and optimization, and that the majority perceive there to be considerable scope to reduce the levels of manual touchpoints and hand-offs within their supply chain processes as they look to move to more autonomous supply chains.

Accordingly, NelsonHall research shows that 86% of supply chain executives consider the transformation of their supply chains over the next two years to be highly important. This typically involves a redesign of the supply chain to maximize available data sources to deliver more efficient workflow and goods handling, improving connectivity within the supply chain to enable more real-time decision-making, and improving the competitive edge with better decision-making tools, analytics, and data sources supporting optimized storage and transport services.

Key supply chain transformation characteristics critical for driving supply chain autonomy that are sought by the majority of supply chain executives include supply chain standardization, end-to-end visibility of supply chain performance, ability to predict, sense, and adjust in real-time, and closed-loop adaptive planning across functions.

At the KPI level, there are particularly high expectations of high demand forecasting accuracy, improved logistics planning and optimization, leading to higher levels of fulfillment reliability; and enhanced risk identification leading to operational cost and working capital reduction.

So, overall, supply chain executives are typically seeking a reduction in supply chain costs, more effective supply chain processes and organization, and improved service levels.

Q&A Part 1

JW: Joerg, to what extent do you see existing supply chains under pressure?

JJ: From a manufacturer looking for increased supply chain resilience and lower costs to a B2C end consumer obsessed with speed, visibility, and aftersales services, supply chains are now under great pressure to transform and adapt themselves to remain competitive in an increasingly demanding and volatile environment.

Supply chain pressure results from increasing levels of supply chain complexity, higher customer expectations, a more volatile environment (e.g., trade wars, Brexit), difficulty in managing costs, and lack of visibility. In particular, global trade has been in a constant state of exception since 2009, creating a need to increase supply chain resilience via increased agility and flexibility and, in sectors such as fast-moving consumer goods and even automotive, hyper-personalization can mean a lot size of one, starting from procurement all the way through production and fulfillment. At the same time, supply chains are no longer simple “chains” but have talent, financial, and physical flows all intertwined in a DNA-like spiral resulting in a (supply chain) ecosystem with high complexity. All this is often compounded by the low level of transparency caused by manual processes. In response, enterprises need to start the journey to autonomous supply chains. However, many supply chains are still not digitized, so there’s a lot of homework to be done before introducing digitalization and autonomous supply chains.

JW: What do you understand by the term “autonomous supply chain”?

JJ: The end game in an “autonomous supply chain” is a supply chain that operates without human intervention. Just imagine a parcel reaching your home, knowing it didn’t take any human intervention to fulfill your order? How much of this is fiction and how much reality?

Well, some of this certainly depends on major investments and changes to regulations in areas such as sending drones to deliver your parcels, flying over your neighborhood, or loading automated trucks crisscrossing the country with nobody behind the steering wheel; major steps in lowering costs and improving customer satisfaction can already be undertaken using current technologies. Recent surveys show that only a quarter of supply chain leaders perceive that they have reached a satisfactory automation level, leveraging the most innovative end-to-end solutions currently available.

JW: What benefits can companies expect from the implementation of an “autonomous supply chain”?

JJ: Our observations and experience link autonomous supply chains to:

- Lower costs – it is no surprise that supply chain automation already helps to lower costs (and will do even more so in the future), combining FTE savings and lower exception handling costs coupled with productivity and quality gains

- Improved customer satisfaction – as a customer you may ask, why should I care that the processes leading to the delivery of my products are “no touch”, that it required hardly any human intervention? Well, you will when your products are delivered faster, and that from order to delivery your experience was transparent and seamless, requiring no tedious phone calls to locate your product(s) or complains about delivery or invoicing errors!

- Increased revenue – as companies process more, faster, with fewer handling and processing errors along the way, they create added value for their customers and benefit from capacity gains that eventually affect their top line, particularly when operational savings are passed on to lower delivery/product prices, thus allowing for a healthy combination of margin and revenue increase.

We have seen that automation can do far more than simply cut costs and that there are many ways to implement automation at scale without relying on infrastructure/regulation changes (e.g., drones) – for example, by leveraging a digitally augmented workforce. Companies have been launching proofs of concept (POCs) but often struggle to reap the true benefits due to talent shortages, siloed processes, and a lack of a long-term holistic vision.

JW: What hurdles do organizations need to overcome to achieve an autonomous supply chain?

JJ: We have observed that companies often face the following hurdles when trying to create a more autonomous supply chain:

- Lack of visibility and transparency – due to 1) outdated process flows, and 2) siloed information systems often requiring email-based information exchange (back and forth non-standardized spreadsheets, flat files)

- Lack of agility (influencing/impacting the overall resilience of the supply chain) – the inability to execute on insights due to slow information velocity and stiffness in their processes, often focused on functions as opposed to value-added processes cutting across the organization

- Lack of the right talent – difficulty in finding talent in a very competitive industry with new technologies making typical supply chain profiles less relevant and new digital profiles often costly to train and hard to retain

- Lack of centralization and consolidation – leading to high costs, poor productivity, and disjointed technology landscapes, often unable to scale across the organization due to a lack of a holistic transformation approach and proper governance.

One thing that many companies have in common is a lack of ability to deploy automation solutions at scale, cost-effectively. Too often, these projects remain at a POC stage and are parked until a new POC (often technology-driven) comes along and yet again fails to scale properly due to high costs, lack of resources, and lack of strategic vision tied to business outcomes.

In Part 2 of the interview, Joerg Junghanns discusses the supply chain flows that benefit from automation, describes client case examples, and highlights the success factors, adoption approach, and key technologies behind autonomous supply chains.

]]>

NelsonHall recently attended the IPsoft Digital Workforce Summit in New York and its analyst events in NY and London. For organizations unfamiliar with IPsoft, the company has around 2,300 employees, approximately 70% of these based in the U.S. and 20% in Europe. Europe is responsible for aproximately 30% of the IPsoft client base with clients relatively evenly distributed over the six regions: U.K., Spain & Iberia, France, Benelux, Nordics, and Central Europe.

The company began life with the development of autonomics for ITSM in the form of IPcenter, and in 2014 launched the first version of its Amelia conversational agent. In 2018, the company launched 1Desk, effectively combining its cognitive and autonomic capabilities.

The events outlined IPsoft’s positioning and plans for the future, with the company:

- Investing strongly in Amelia to enhance its contextual understanding and maintain its differentiation from “chatbots”

- Launching “Co-pilot” to remove the currently strong demarcation between automated and agent interactions

- Building use cases and a partner program to boost adoption and sales

- Positioning 1Desk and its associated industry solutions as end-to-end intelligent automation solutions, and the key to the industry and the future of IPsoft.

Enhancing Contextual Understanding to Maintain Amelia’s Differentiation from Chatbots

Amelia has often suffered from being seen at first glance as "just another chatbot". Nonetheless, IPsoft continues to position Amelia as “your digital companion for a better customer service” and to invest heavily to maintain Amelia’s lead in functionality as a cognitive agent. Here, IPsoft is looking to differentiate by stressing Amelia’s contextual awareness and ability to switch contexts within a conversation, thereby “offering the capability to have a natural conversation with an AI platform that really understands you.”

Amelia goes through six pathways in sequence within a conversation to understand each utterance and the pathway with highest probability wins. The pathways are:

- Intent model

- Semantic FAQ

- AIML

- Social talk

- Acknowledge

- Don’t know.

The platform also separates “entities” from “intents”, capturing both of these using Natural Language Understanding. Both intent and entity recognition is specific to the language used, though IPsoft is now simplifying implementation further by making processes language-independent and removing the need for the client to implement channel-specific syntax.

A key element in supporting more natural conversations is the use of stochastic business process networks, which means that Amelia can identify the required information as it is provided by the user, rather than having to ask for and accept items of information in a particular sequence as would be the case in a traditional chatbot implementation.

Context switching is also supported within a single conversation, with users able to switch between domains, e.g. from IT support to HR support and back again in a single conversation, subject to the rules on context switching defined by the organization.

Indeed, IPsoft has always had a strong academic and R&D focus and is currently further enhancing and differentiating Amelia through:

- Leveraging ELMo with the aim of achieving intent accuracy of >95% while using only half of the data required in other Deep Neural Net models

- Using NLG to support Elaborate Question Asking (EQA) and Clarifying Question & Answer (CQA) to enable Amelia to follow-up dynamically without the need to build business rules.

The company is also looking to incorporate sentiment analysis within voice. While IPsoft regards basic speech-to-text and text-to-speech as commodity technologies, the company is looking to capture sentiment analysis from voice, differentiate through use of SLM/SRGS technology, and improve Amelia’s emotional intelligence by capturing aspects of mood and personality.

Launching Co-pilot to Remove the Demarcation Between Automated Handling and Agent Handling

Traditionally, interactions have either been handled by Amelia or by an agent if Amelia failed to identify the intent or detected issues in the conversation. However, IPsoft is now looking to remove this strong demarcation between chats handled solely by Amelia and chats handled solely by (or handed off in their entirety) to agents. The company has just launched “Co-pilot”, positioned as a platform to allow hybrid levels of automation and collaboration between Amelia, agents, supervisors, and coaches. The platform is currently in beta mode with a major telco and a bank.

The idea is to train Amelia on everything that an agent does to make hand-offs warmer and to increase Amelia’s ability to automate partially, and ultimately handle, edge cases rather than just pass these through to an agent in their original form. Amelia will learn by observing agent interactions when escalations occur and through reinforcement learning via annotations during chat.

When Amelia escalates to an agent using Co-pilot, it will no longer just pass conversation details but will now also offer suggested responses for the agent to select. These responses are automatically generated by crowdsourcing every utterance that every agent has created and then picking those that apply to the particular context, with digital coaches editing the language and content of the preferred responses as necessary.

In the short term, this assists the agent by providing context and potential responses to queries and, in the longer term as this process repeats over queries of the same type, Amelia then learns the correct answers, and ultimately this becomes a new Amelia skill.

Co-pilot is still at an early stage with lots of developments to come and, during 2019, the Co-pilot functionality will be enhanced to recommend responses based on natural language similarity, enable modification of responses by the agent prior to sending, and enable agents to trigger partial automated conversations.

This increased co-working between humans and digital chat agents is key to the future of Amelia since it starts to position Amelia as an integral part of the future contact center journey rather than as a standalone automation tool.

Building Use Cases & Partner Program to Reduce Time to Value

Traditionally, Amelia has been a great cognitive chat technology but a relatively heavy-duty technology seeking a use case rather than an easily implemented general purpose tool, like the majority of the RPA products.

In response, IPsoft is treading the same path as the majority of automation vendors and is looking to encourage organizations (well at least mid-sized organizations) to hire a “digital worker” rather than build their own. The company estimates that its digital marketplace “1Store” already contains 672 digital workers, which incorporate back-office automation in addition to the Amelia conversational AI interface. For example, for HR, 1Store offers “digital workers” with the following “skills”: absence manager, benefits manager, development manager, onboarding specialist, performance record manager, recruiting specialist, talent management specialist, time & attendance manager, travel & expense manager, and workforce manager.

At the same time, IPsoft is looking to increase the proportion of sales and service through channel partners. Product sales currently make up 56% of IPsoft revenue, with 44% from services. However, the company is looking to steer this ratio further in support of product, by targeting 60% per annum growth in product sales and increasing the proportion of personnel, currently approx. two-thirds, in product-related positions with a contribution from reskilling existing services personnel.

IPsoft has been late to implement its partner strategy relative to other automation software vendors, attributing this early caution in part to the complexity of early implementations of Amelia. Early partners for IPcenter included IBM and NTT DATA, who embedded IPsoft products directly within their own outsourcing services and were supported with “special release overlays” by IPsoft to ensure lack of disruption during product and service upgrades. This type of embedded solution partnership is now increasingly likely to expand to the major CX services vendors as these contact center outsourcers look to assist their clients in their automation strategies.

So, while direct sales still dominate partner sales, IPsoft is now recruiting a partner/channel sales team with a view to reversing this pattern over the next few years. IPsoft has now established a partner program targeting alliance and advisory (where early partners included major consultancies such as Deloitte and PwC), implementation, solution, OEM, and education partners.

1Desk-based End-to-End Automation is the Future for IPsoft

IPsoft has about 600 clients, including approx. 160 standalone Amelia clients, and about a dozen deployments of 1Desk. However, 1Desk is the fastest-growing part of the IPsoft business with 176 enterprises in the pipeline for 1Desk implementations, and IPsoft increasingly regards the various 1Desk solutions as its future.

IPsoft is positioning 1Desk by increasingly talking about ROAI (the return on AI) and suggesting that organizations can achieve 35% ROAI (rather than the current 6%) if they adopt integrated end-to-end automation and bypass intermediary systems such as ticketing systems.

Accordingly, IPsoft is now offering end-to-end intelligent automation capability by combining the Amelia cognitive agent with “an autonomic backbone” courtesy of IPsoft’s IPcenter heritage and with its own RPA technology (1RPA) to form 1Desk.

1Desk, in its initial form, is largely aimed at internal SSC functions including ITSM, HR, and F&A. However, over the next year, it will increasingly be tailored to provide solutions for specific industries. The intent is to enable about 70% of the solution to be implemented “out of the box”, with vanilla implementations taking weeks rather than many months and with completely new skills taking approx.. three 3 months to deploy.

The initial industry solution from IPsoft is 1Bank. As the name implies, 1Bank has been developed as a conversational banking agent for retail banking and contains preformed solutions/skills covering the account representative, e.g. for support with payments & bills; the mortgage processor; the credit card processor; and the personal banker, to answer questions about products, services, and accounts.

1Bank will be followed during 2019 by solutions for healthcare, telecoms, and travel.

]]>

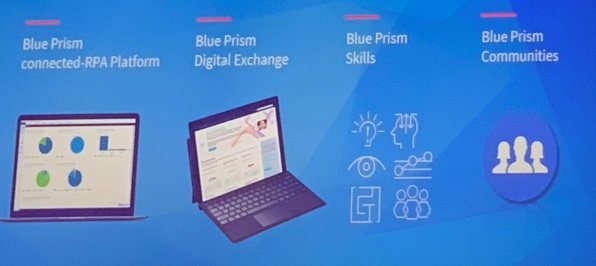

Components of Blue Prism's connected-RPA

Blue Prism is positioning by offering mature companies the promise of closing the gap with digital disruptors, both technically and culturally. The cultural aspect is important, with Blue Prism technology positioned as a lever to help organizations attract and inspire their workforce and give digitally-savvy entrepreneurial employees the technology to close the “digital entrepreneur gap” and also close the gap between senior executives and the workforce.

Within this vision, the Blue Prism roadmap is based around helping organizations to:

- Automate more – here, Blue Prism is introducing intelligent automation skills, ML-based process discovery, and DX

- Automate better – with more expansive and scalable automations

- Automate together – by learning from the mistakes and achievements of others.

Introducing intelligent document processing capability

When analyzing the interactions on its Digital Exchange (DX), Blue Prism unsurprisingly found that the single biggest use, with 60% of the items being downloaded from DX, was related to unstructured document processing.

Accordingly, Blue Prism has just announced a beta intelligent document processing program, Decipher. Decipher is positioned as an easy on-ramp to document processing and is a document processing workflow that can be used to ingest & classify unstructured documents. It can be used “out-of-the-box” without the need to purchase additional licenses or products, and organizations can also incorporate their own document capture technologies, such as Abbyy, or document capture services companies within the Decipher framework.

Decipher will clean documents to ensure that they are ready for processing, apply machine learning to classify the documents, and then to extract the data. Finally, it will apply a confidence score to the validity of the data extracted and pass to a business user where necessary, incorporating human-in-the-loop assisted learning.

Accordingly, Decipher is viewed by Blue Prism as a first step in the increasingly important move beyond rule-based RPA to introduce machine learning-based human-in-the-loop capability. Not surprisingly, Blue Prism recognizes that, as machine learning becomes more important, people will need to be brought into the loop much more than at present to validate “low-confidence” decisions and to provide assisted learning to the machine learning.

Decipher is starting with invoice processing and will then expand to handle other document types.

Improving control of assets within Digital Exchange (DX)

The Digital Exchange (DX) is another vital component in Blue Prism’s vision of connected-RPA.

Enhancements planned for DX include making it easier for organizations to collaborate and share knowledge and facilitating greater security and control of assets by enabling an organization to control the assets available to itself. Assets will be able to be marked as private, effectively providing an enterprise-specific version of the Blue Prism digital exchange and within DX, there will be a “skills” drag-and-drop toolbar so that users, and not just partners, will be able to publish skills.

Blue Prism, like Automation Anywhere, is also looking to bring an e-commerce flavor to its DX: developers will be able to create skills and then sell them. Initially, Blue Prism will build some artifacts themselves. Others will be offered free-of-charge by partners in the short-term, with a view in the near term to enabling partners to monetize their assets.

Re-aligning architecture & introducing AI-related skills

Blue Prism has been working closely with cloud vendors to re-align its architecture, and in particular to rework its UI to appeal to a broader range of users and make Blue Prism more accessible to business users.

Blue Prism is also improving its underlying architecture to make it more scalable as well as more cloud-friendly. There will be a new, more native and automated means of controlling bots via a browser interface available on mobiles and tablets that will show the health of the environment in terms of meeting SLAs, and provide notifications showing where interventions are required. Blue Prism views this as a key step in moving towards provision of a fully autonomous digital workforce that manages itself.

Data gateways (available on April 30, 2019 in v6.5) are also being introduced to make Blue Prism more flexible in its use of generated data. Organizations will be able to take data from the Blue Prism platform and send it to ML for reporting, etc.

However, Blue Prism will continue to use commodity AI and is looking to expand the universe of technologies available to organizations and bring them into the Blue Prism platform without the necessity for lots of coding. This is being done via continuing to expand the number of Blue Prism partners and by introducing the concept of Blue Prism skills.

At Blue Prism World, the company announced five new partners:

- Bizagi, for process documentation and modeling, connecting with both on-premise and cloud-based RPA

- Hitachi ID Systems, for enhanced identity and access management

- RPA Supervisor, an added layer of monitoring & control

- Systran, providing digital workers with translation into 50 languages

- Winshuttle, for facilitating transfer of data with SAP.

At the same time, the company announced six AI-related skills:

- Knowledge & insight

- Learning

- Visual perception: OCR technologies and computer vision

- Problem-solving

- Collaboration: human interaction and human-in-the-loop

- Planning & sequencing.

Going forward

Blue Prism recognizes that while the majority of users presenting at its conferences may still be focused on introducing rule-based processes (and on a show of hands, a surprisingly high proportion of attendees were only just starting their RPA journeys), the company now needs to take major strides in making automation scalable, and in more directly embracing machine learning and analytics.

The company has been slightly slow to move in this direction, but launched Blue Prism labs last year to look at the future of the digital worker, and the labs are working on addressing the need for:

- More advanced process analytics and process discovery

- More inventive and comprehensive use of machine learning (though the company will principally continue to partner for specialized use cases)

- Introduction of real-time analytics directly into business processes.

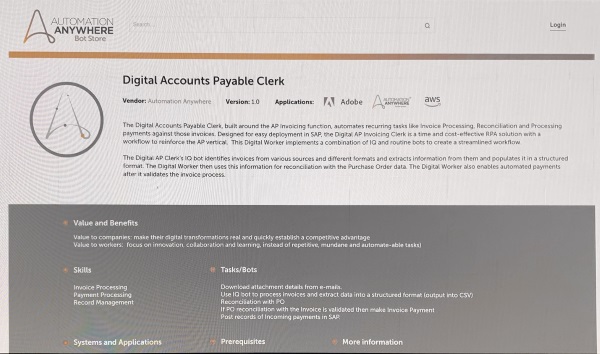

So far, downloads from the Bot Store have been free-of-charge, but Automation Anywhere perceives that this approach potentially limits the value achievable from the Bot Store. Accordingly, the company is now introducing monetization to provide value back to developers contributing bots and Digital Workers to the Bot Store, and to increase the value that clients can receive. In effect, Automation Anywhere is looking to provide value as a two-way street.

The timing for introducing monetization to the Bot Store will be as follows:

- April 16, 2019: announcement and start of sales process validation with a small number of bots and bot bundles priced within the Bot Store. Examples of “bot bundles” include a number of bots for handling email operation around Outlook or bots for handling common Excel operations

- May 2019: Availability of best practice guides for developers containing guidelines on how to write bots that are modular and easy to onboard. Start of developer sign-up

- Early summer 2019: customer launch through the direct sales channel. At this stage, bots and Digital Workers will only be available through the formal direct sales quotation process rather than via credit card purchases

- Late summer 2019: launch of “consumer model” and Bot Store credit card payments.

Pricing, initially in US$ only, will be per bot or Digital Worker, with a 70:30 revenue split between the developer and Automation Anywhere, with Automation Anywhere handling the billing and paying the developer monthly. Buyers will have a limited free trial period, initially 30 days but under review, but IP protection is being introduced so that buyers will not have access to the source code. The original developer will retain responsibility for building, supporting, maintaining, and updating their bots and Digital Workers. Automation Anywhere is developing some Digital Workers itself in order to seed the Bot Store with some examples, but Automation Anywhere has no desire to develop Digital Workers medium-term itself and may, once the concept is well-proven, hand over/license the Digital Workers it has developed to third-party developers.

Automation Anywhere clearly expects that a number of smaller systems integrators will switch their primary business model from professional services to a product model, building bots for the Bot Store, and is offering developers the promise of a recurring revenue stream and global distribution ultimately through not only the Bot Store but through Automation Anywhere and its partners. Although payment will be monthly, developers will receive real-time transaction reporting to assist them in their financial management. For professional services firms retaining a strong professional services focus, but used to operating on a project basis, Automation Anywhere perceives that licensing and updating Digital Workers within this model could provide both a supplementary revenue stream, and possibly, more importantly, a means to maintain an ongoing relationship with the client organization.

In addition to systems integrators, Automation Anywhere is targeting ISVs who, like Workday, can use the Bot Store and Automation Anywhere to facilitate deployment and operation of their software by introducing Digital Workers that go way beyond simple connectors. Although the primary motivation of these firms is likely to be to reduce the time to value for their own products, Automation Anywhere expects ISVs to be cognizant of the cost of adoption and to price their Digital Workers at levels that will provide both a reduced cost of adoption to the client and a worthwhile revenue stream to the ISV. Pricing of Digital Workers in the range $800 to as high as $12k-$15K per annum has been mentioned.

So far, inter-enterprise bot libraries have largely been about providing basic building blocks that are commonly used across a wide range of processes. The individual bots have typically required little or no maintenance and have been disposable in nature. Automation Anywhere is now looking to transform the concept of bot libraries to that of bot marketplaces to add a much higher, and long-lived, value add and to put bots on a similar footing to temporary staff with updateable skills.

The company is also aiming to steal a lead in the development of such bots and, preferably Digital Workers, by providing third-parties with the financial incentive to develop for its own, rather than a rival, platform.

]]>The company was initially slow to go-to-market in Europe relative to Blue Prism and UiPath, but estimates it has more than tripled its number of customers in Europe in the past 12 months.

NelsonHall attended the recent Automation Anywhere conference in Europe, where the theme of the event was “Delivering Digital Workforce for Everyone” with the following sub-themes:

- Automate Everything

- Adopted by Everyone

- Available Everywhere.

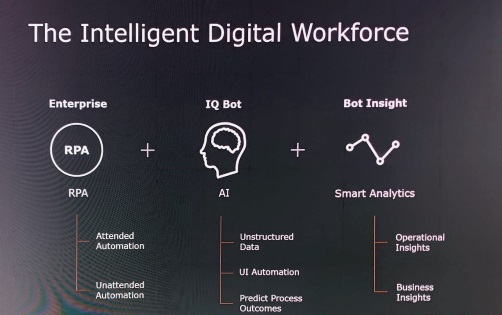

Automate Everything

Automation Anywhere is positioning as “the only multi-product vendor”, though it is debatable whether this is entirely true and also whether it is desirable to position the various components of intelligent automation as separate products.

Nonetheless, Automation Anywhere is clearly correct in stating that, “work begins with data (structured and unstructured) – then comes analysis to get insight – then decisions are made (rule-based or cognitive) – which leads to actions – and then the cycle repeats”.

Accordingly, “an Intelligent RPA platform is a requirement. AI cannot be an afterthought. It has to be part of current processes” and so Automation Anywhere comes to the following conclusion:

Intelligent digital workforce = RPA (attended + unattended) + AI + Analytics

Translated into the Automation Anywhere product range, this becomes:

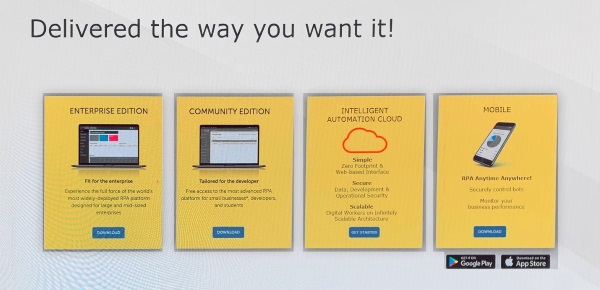

Adopted by Everyone

Automation Everywhere clearly sees the current RPA market as a land grab and is working hard to scale adoption fast, both within existing clients and to new clients, and for each role within the organization.

The company has traditionally focused on the enterprise market with organizations such as AT&T, ANZ, and Bank of Columbia using 1,000s of bots. For these companies, transformation is just beginning as they now look to move beyond traditional RPA, and Automation Anywhere is working to include AI and analytics to meet their needs. However, Automation Anywhere is now targeting all sizes of organization and sees much of its future growth coming from the mid-market (“automation has to work for all sizes of organization”) and so is looking to facilitate adoption here by introducing a cloud version and a Bot Store.