Search posts by keywords:

Filter posts by author:

Related Reports

Related NEAT Reports

Other blog posts

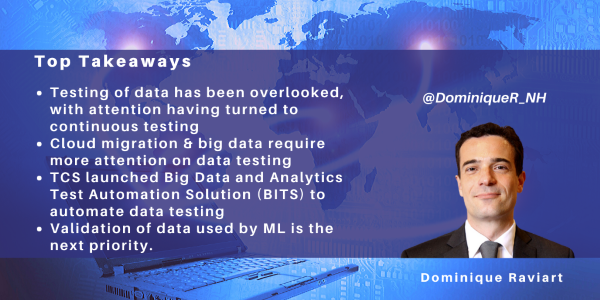

posted on Jan 08, 2020 by Dominique Raviart

In the world of testing services/quality assurance, data testing has in the past been somewhat overlooked, still largely relying on spreadsheets and manual tasks.

While much of the current attention has been on agile/continuous testing, data testing remains an important element of IT projects, and gained further interest a few years ago in the context of big data, with migration of data from databases to data lakes. This renewed interest continues with focus on the quality of data for ML projects.

We recently talked to TCS about its activities for automating data testing. The company recently launched its Big Data and Analytics Test Automation Platform Solution (BITS) IP, targeting use cases, including the validation of:

- Data across technologies such as Enterprise Data warehouse (EDW) and data lakes

- Migrations from on-premise to the cloud (e.g. AWS, Microsoft Azure and Google Cloud Platform)

- Report data

- Analytical/AI models.

Testing Data at Scale through Automation

The principle of testing data is straightforward and involves comparing target data with source data. However, TCS highlights that big data projects bring new challenges to data testing, such as:

- The diversity of sources, e.g. databases such as RDBMS, NoSQL, mainframe applications and files, along with EDWs and Apache Hadoop-related software (HFDS, HIVE, Impala, and HBase)

- The volume of data. With the fast adoption of big data, clients are now transitioning their key databases, encompassing large amounts of data

- The technologies at stake: in the past, one would have written SQL queries. Such SQL queries do not suffice in the world of big data.

To cope with these three challenges, TCS has developed (in BITS) automation related to:

- Determining non-standard data by identifying data that is incorrect or non-compliant with industry regulations and proprietary business rules

- Validating data quality, once the data transformation is completed, through identifying duplicates in the source, duplicates in the target, missing data in the target, and extra data in the target

- Identifying source-target mismatches at the individual data level.

An example client is a large mining firm, which is using BITS for validating the quality of its analytics reports and dashboards. The client is using these reports and dashboard to monitor its business and requires reliable data that is refreshed daily. TCS highlights that BITS can achieve up to 100% coverage and improve tester productivity by 30% to 60%.

Overall, TCS sees good traction for BITS in BFSI globally, as the banking industry moves from EDWs and proprietary databases to data lakes. Other promising industries include retail, healthcare, resources and communications.

TCS believes BITS has great potential and wants to create additional plug-ins that can connect with more data sources, taking a project-led approach.

Validating the Data Used by ML

Along with data validation, TCS has positioned BITS in the context of ML through testing of ML-based algorithms.

The company started on this journey of ML-based algorithms, initially focusing on linear regression. Linear regression is one of the most common statistical techniques, often used to predict output (“dependent variables”) out of existing data (“independent variables”). Currently, TCS is in its early steps, and focuses on assessing the data that was used for creating the algorithm, identifying invalid data such as blanks, duplicate data or non-compliant data. BITS will automatically remove invalid data and run the analytical model and assess how the clean data affects the accuracy of the algorithm.

Alongside analytical model validation, TCS also works on linear regression-based model simulation, looking at how to best use training and testing data. One of the challenges of ML lies in the relative scarcity of data, and how to make best use of data across training the algorithm (i.e. improve its accuracy) and testing it (once the algorithm has been finalized). Overall, the more data is used on training the algorithm, the better its accuracy. However, testing an algorithm requires fresh data that has not been used for training purposes.

While the industry uses a training to testing ratio of 80:20, TCS helps in fine-tuning the right mix by simulating ten possibilities and selecting the mix that optimizes the algorithm.

TCS sells its data and algorithm testing services, using BITS, though pricing models including T&M and fixed price, and subscription.

Roadmap: Expanding from Linear Regression to Other Statistical Models

TCS will continue to invest in the ML validation capabilities of BITS and intends to expand to other statistical models such as decision trees and clustering models. The accelerating adoption of ML and also of other digital technologies is a strategic opportunity for TCS’ services across its data testing and analytical model portfolio.