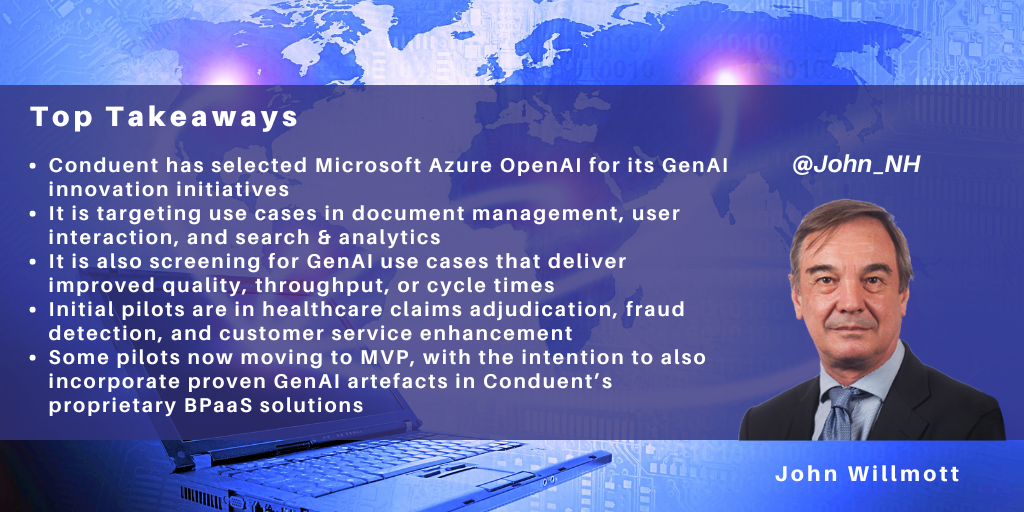

Conduent has partnered with Microsoft to use Microsoft Azure OpenAI to underpin its GenAI innovation initiatives with clients.

Its GenAI journey includes:

- Selecting use cases focused on improving quality, throughput, and cycle times

- Adoption of pilots in healthcare claims adjudication, fraud detection, and customer service enhancement

- Subsequently, moving to MVPs and industrializing use cases.

Use Case Selection Criteria Focused on Improving Quality, Throughput, and Cycle Times

Conduent recognizes that GenAI is an expensive technology and that its adoption will typically incur costs in changing existing processes and technology stacks. This makes it difficult to build GenAI business cases on already optimized operations based solely on cost reduction. Hence, Conduent is focusing on “innovative additive opportunities” to make the business case work.

The outcomes that Conduent is targeting from GenAI initiatives are:

- Improved quality, reducing error rates across standardized workflows

- Increased throughput of business process transactions

- Faster cycle times by consolidating value chain steps for faster processing.

At the same time, Conduent’s client relationships tend to involve relatively deep end-to-end service provision across a range of processes rather than single-process support. These combinations of services are tailored for specific clients rather than being standalone commoditized services.

Accordingly, Conduent’s document management services and CX services are generally supplied as part of a wider capability rather than as standalone services. Within this pattern, most of its solutions include elements of:

- Document management, including summarization & analysis, and extracting and contextualizing text within images

- User interaction/call center with multiple clients across various sectors, including enhancing agent assist, virtual agent, and call center agent assessment

- Search & analytics.

These three areas are regarded as core competencies by Conduent and all its GenAI use cases for the immediate future will fall into one of these areas and will be capable of delivering improved quality, throughput, and cycle times.

Initial GenAI PoCs Focus on Healthcare Claims Adjudication, Fraud Detection, and Customer Service Enhancement

Conduent has announced three GenAI pilot areas covering healthcare claims adjudication, state government program fraud detection, and customer service enhancement.

Conduent is a major provider of healthcare claims adjudication services. Here, it is working on a PoC with several healthcare clients to apply GenAI to reduce the error rate in data extraction and achieve faster cycle times in claims adjudication. GenAI is being used within document management to summarize highly unstructured documents, such as appeals documents and medical records, using its contextualization capabilities, including image-to-text. The technologies used are Azure AI Document Intelligence and Azure OpenAI Service.

Secondly, Conduent is working on a fraud detection PoC to support social programs in the U.S. state government sector. This PoC uses GenAI for search & analytics across multiple structured and unstructured data sets to increase the volume and speed of fraud detection. The technologies used here are Azure Data Factory and Azure OpenAI Service.

Finally, Conduent is using GenAI to enhance the use of agent assist and virtual agents by training virtual agents on existing data so that they can be deployed much faster.

Moving to MVPs and Industrializing GenAI Use Cases

Conduent initially undertook PoCs in the above areas to get both Conduent and the client comfortable with the results of applying GenAI and prove the use case. The PoC process is iterative and very granular, and Conduent perceives that organizations need to be extremely prescriptive to get the right results. This typically means defining the specific inputs and outputs of the use case very tightly, including defining what GenAI should do in the absence of individual inputs.

Conduent is now moving towards MVP and building client business cases in several of these pilots, including in some pilots in the document management space. Since Conduent has taken a horizontal approach to its use case selection, many of these have the potential to scale across multiple industry segments and clients.

In addition, Conduent provides BPaaS services to many of its clients, so it is looking to embed proven GenAI use cases in its proprietary technology platforms.

Existing use cases are business unit-sponsored but curated centrally, with the center providing enablement and cross-pollination across business units. However, the intention is to incubate future GenAI capability within individual business units.

]]>

NelsonHall recently attended the IPsoft Digital Workforce Summit in New York and its analyst events in NY and London. For organizations unfamiliar with IPsoft, the company has around 2,300 employees, approximately 70% of these based in the U.S. and 20% in Europe. Europe is responsible for aproximately 30% of the IPsoft client base with clients relatively evenly distributed over the six regions: U.K., Spain & Iberia, France, Benelux, Nordics, and Central Europe.

The company began life with the development of autonomics for ITSM in the form of IPcenter, and in 2014 launched the first version of its Amelia conversational agent. In 2018, the company launched 1Desk, effectively combining its cognitive and autonomic capabilities.

The events outlined IPsoft’s positioning and plans for the future, with the company:

- Investing strongly in Amelia to enhance its contextual understanding and maintain its differentiation from “chatbots”

- Launching “Co-pilot” to remove the currently strong demarcation between automated and agent interactions

- Building use cases and a partner program to boost adoption and sales

- Positioning 1Desk and its associated industry solutions as end-to-end intelligent automation solutions, and the key to the industry and the future of IPsoft.

Enhancing Contextual Understanding to Maintain Amelia’s Differentiation from Chatbots

Amelia has often suffered from being seen at first glance as "just another chatbot". Nonetheless, IPsoft continues to position Amelia as “your digital companion for a better customer service” and to invest heavily to maintain Amelia’s lead in functionality as a cognitive agent. Here, IPsoft is looking to differentiate by stressing Amelia’s contextual awareness and ability to switch contexts within a conversation, thereby “offering the capability to have a natural conversation with an AI platform that really understands you.”

Amelia goes through six pathways in sequence within a conversation to understand each utterance and the pathway with highest probability wins. The pathways are:

- Intent model

- Semantic FAQ

- AIML

- Social talk

- Acknowledge

- Don’t know.

The platform also separates “entities” from “intents”, capturing both of these using Natural Language Understanding. Both intent and entity recognition is specific to the language used, though IPsoft is now simplifying implementation further by making processes language-independent and removing the need for the client to implement channel-specific syntax.

A key element in supporting more natural conversations is the use of stochastic business process networks, which means that Amelia can identify the required information as it is provided by the user, rather than having to ask for and accept items of information in a particular sequence as would be the case in a traditional chatbot implementation.

Context switching is also supported within a single conversation, with users able to switch between domains, e.g. from IT support to HR support and back again in a single conversation, subject to the rules on context switching defined by the organization.

Indeed, IPsoft has always had a strong academic and R&D focus and is currently further enhancing and differentiating Amelia through:

- Leveraging ELMo with the aim of achieving intent accuracy of >95% while using only half of the data required in other Deep Neural Net models

- Using NLG to support Elaborate Question Asking (EQA) and Clarifying Question & Answer (CQA) to enable Amelia to follow-up dynamically without the need to build business rules.

The company is also looking to incorporate sentiment analysis within voice. While IPsoft regards basic speech-to-text and text-to-speech as commodity technologies, the company is looking to capture sentiment analysis from voice, differentiate through use of SLM/SRGS technology, and improve Amelia’s emotional intelligence by capturing aspects of mood and personality.

Launching Co-pilot to Remove the Demarcation Between Automated Handling and Agent Handling

Traditionally, interactions have either been handled by Amelia or by an agent if Amelia failed to identify the intent or detected issues in the conversation. However, IPsoft is now looking to remove this strong demarcation between chats handled solely by Amelia and chats handled solely by (or handed off in their entirety) to agents. The company has just launched “Co-pilot”, positioned as a platform to allow hybrid levels of automation and collaboration between Amelia, agents, supervisors, and coaches. The platform is currently in beta mode with a major telco and a bank.

The idea is to train Amelia on everything that an agent does to make hand-offs warmer and to increase Amelia’s ability to automate partially, and ultimately handle, edge cases rather than just pass these through to an agent in their original form. Amelia will learn by observing agent interactions when escalations occur and through reinforcement learning via annotations during chat.

When Amelia escalates to an agent using Co-pilot, it will no longer just pass conversation details but will now also offer suggested responses for the agent to select. These responses are automatically generated by crowdsourcing every utterance that every agent has created and then picking those that apply to the particular context, with digital coaches editing the language and content of the preferred responses as necessary.

In the short term, this assists the agent by providing context and potential responses to queries and, in the longer term as this process repeats over queries of the same type, Amelia then learns the correct answers, and ultimately this becomes a new Amelia skill.

Co-pilot is still at an early stage with lots of developments to come and, during 2019, the Co-pilot functionality will be enhanced to recommend responses based on natural language similarity, enable modification of responses by the agent prior to sending, and enable agents to trigger partial automated conversations.

This increased co-working between humans and digital chat agents is key to the future of Amelia since it starts to position Amelia as an integral part of the future contact center journey rather than as a standalone automation tool.

Building Use Cases & Partner Program to Reduce Time to Value

Traditionally, Amelia has been a great cognitive chat technology but a relatively heavy-duty technology seeking a use case rather than an easily implemented general purpose tool, like the majority of the RPA products.

In response, IPsoft is treading the same path as the majority of automation vendors and is looking to encourage organizations (well at least mid-sized organizations) to hire a “digital worker” rather than build their own. The company estimates that its digital marketplace “1Store” already contains 672 digital workers, which incorporate back-office automation in addition to the Amelia conversational AI interface. For example, for HR, 1Store offers “digital workers” with the following “skills”: absence manager, benefits manager, development manager, onboarding specialist, performance record manager, recruiting specialist, talent management specialist, time & attendance manager, travel & expense manager, and workforce manager.

At the same time, IPsoft is looking to increase the proportion of sales and service through channel partners. Product sales currently make up 56% of IPsoft revenue, with 44% from services. However, the company is looking to steer this ratio further in support of product, by targeting 60% per annum growth in product sales and increasing the proportion of personnel, currently approx. two-thirds, in product-related positions with a contribution from reskilling existing services personnel.

IPsoft has been late to implement its partner strategy relative to other automation software vendors, attributing this early caution in part to the complexity of early implementations of Amelia. Early partners for IPcenter included IBM and NTT DATA, who embedded IPsoft products directly within their own outsourcing services and were supported with “special release overlays” by IPsoft to ensure lack of disruption during product and service upgrades. This type of embedded solution partnership is now increasingly likely to expand to the major CX services vendors as these contact center outsourcers look to assist their clients in their automation strategies.

So, while direct sales still dominate partner sales, IPsoft is now recruiting a partner/channel sales team with a view to reversing this pattern over the next few years. IPsoft has now established a partner program targeting alliance and advisory (where early partners included major consultancies such as Deloitte and PwC), implementation, solution, OEM, and education partners.

1Desk-based End-to-End Automation is the Future for IPsoft

IPsoft has about 600 clients, including approx. 160 standalone Amelia clients, and about a dozen deployments of 1Desk. However, 1Desk is the fastest-growing part of the IPsoft business with 176 enterprises in the pipeline for 1Desk implementations, and IPsoft increasingly regards the various 1Desk solutions as its future.

IPsoft is positioning 1Desk by increasingly talking about ROAI (the return on AI) and suggesting that organizations can achieve 35% ROAI (rather than the current 6%) if they adopt integrated end-to-end automation and bypass intermediary systems such as ticketing systems.

Accordingly, IPsoft is now offering end-to-end intelligent automation capability by combining the Amelia cognitive agent with “an autonomic backbone” courtesy of IPsoft’s IPcenter heritage and with its own RPA technology (1RPA) to form 1Desk.

1Desk, in its initial form, is largely aimed at internal SSC functions including ITSM, HR, and F&A. However, over the next year, it will increasingly be tailored to provide solutions for specific industries. The intent is to enable about 70% of the solution to be implemented “out of the box”, with vanilla implementations taking weeks rather than many months and with completely new skills taking approx.. three 3 months to deploy.

The initial industry solution from IPsoft is 1Bank. As the name implies, 1Bank has been developed as a conversational banking agent for retail banking and contains preformed solutions/skills covering the account representative, e.g. for support with payments & bills; the mortgage processor; the credit card processor; and the personal banker, to answer questions about products, services, and accounts.

1Bank will be followed during 2019 by solutions for healthcare, telecoms, and travel.

]]>

IPSoft's Amelia

NelsonHall recently attended the IPSoft analyst event in New York, with a view to understanding the extent to which the company’s shift into customer service has succeeded. It immediately became clear that the company is accelerating its major shift in focus of recent years from autonomics to cognitive agents. While IPSoft began in autonomics in support of IT infrastructure management, and many Amelia implementations are still in support of IT service activities, IPSoft now clearly has its sights on the major prize in the customer service (and sales) world, positioning its Amelia cognitive agent as “The Most Human AI” with much greater range of emotional, contextual, and process “intelligence” than the perceived competition in the form of chatbots.

Key Role for AI is Human Augmentation Not Human Replacement

IPSoft was at pains to point out that AI was the future and that human augmentation was a major trend that would separate the winners from the losers in the corporate world. In demonstrating the point that AI was the future, Nick Bostrom from the Future of Humanity Institute at Oxford University discussed the result of a survey of ~300 AI experts to identify the point at which high-level machine intelligence, (the point at which unaided machines can accomplish any task better and more cheaply than human workers) would be achieved. This survey concluded that there was a 50% probability that this will be achieved within 50-years and a 25% probability that it will happen within 20-25 years.

On a more conciliatory basis, Dr. Michael Chui suggested that AI was essential to maintaining living standards and that the key role for AI for the foreseeable future was human augmentation rather than human replacement.

According to McKinsey Global Institute (MGI), “about half the activities people are paid almost $15tn in wages to do in the global economy have the potential to be automated by adapting currently demonstrated technology. While less than 5% of all occupations can be automated entirely, about 60% of all occupations have at least 30% of constituent activities that could be automated. More occupations will change than can be automated away.”

McKinsey argues that automation is essential to maintain GDP growth and standards of living, estimating that of the 3.5% per annum GDP growth achieved on average over the past 50 years, half was derived from productivity growth and half from growth in employment. Assuming that growth in employment will largely cease as populations age over the next 50 years, then an increase/approximate doubling in automation-driven productivity growth will be required to maintain the historical levels of GDP growth.

Providing Empathetic Conversations Rather than Transactions

The guiding principles behind Amelia are to provide conversations rather than transactions, to understand customer intent, and to deliver a to-the-point and empathetic response. Overall, IPSoft is looking to position Amelia as a cognitive agent at the intersection of systems of engagement, systems of record, and data platforms, incorporating:

- Conversational intelligence, encompassing intelligent understanding, empathetic response, & multi-channel handling. IPSoft has recently added additional machine learning and DEEP learning

- Advanced analytics, encompassing performance analytics, decision intelligence, and data visualization

- Smart workflow, encompassing dynamic process execution and integration hub, with UI integration (planned)

- Experience management, to ensure contextual awareness

- Supervised automated learning, encompassing automated training, observational learning, and industry solutions.

For example, it is possible to upload documents and SOPs in support of automated training and Amelia will advise on the best machine learning algorithms to be used. Using supervised learning, Amelia submits what it has learned to the SME for approval but only uses this new knowledge once approved by the SME to ensure high levels of compliance. Amelia also learns from escalations to agents and automated consolidation of these new learnings will be built into the next Amelia release.

IPSoft is continuing to develop an even greater range of algorithms by partnering with universities. These algorithms remain usable across all organizations with the introduction of customer data to these algorithms leading to the development of client-specific customer service models.

Easier to Teach Amelia Banking Processes than a New Language

An excellent example of the use of Amelia was discussed by a Nordic bank. The bank initially applied Amelia to its internal service desk, starting with a pilot in support of 600 employees in 2016 covering activities such as unlocking accounts and password guidance, before rolling out to 15,000 employees in Spring 2017. This was followed by the application of Amelia to customer service with a silent launch taking place in December 2016 and Amelia being rolled out in support of branch office information, booking meetings, banking terms, products and services, mobile bank IDs, and account opening. The bank had considered using offshore personnel but chose Amelia based on its potential ability to roll-out in a new country in a month and its 24x7 availability. Amelia is currently used by ~300 customers per day over chat.

The bank was open about its use of AI with its customers on its website, indicating that its new chat stream was based on the use of “digital employees with artificial intelligence”. The bank found that while customers, in general, seemed pleased to interact via chat, less expectedly, use of AI led to totally new customer behaviors, both good and bad, with some people who hated the idea of use of robots acting much more aggressively. On the other hand, Amelia was highly successful with individuals who were reluctant to phone the bank or visit a bank branch.

Key lessons learnt by the bank included:

- The high level of acceptance of Amelia by customer service personnel who regarded Amelia as taking away boring “Monday-morning” tasks allowing them to focus on more meaningful conversations with customers rather than threatening their livelihoods

- It was easier than expected to teach Amelia the banking processes, but harder than expected to convert to a new language such as Swedish, with the bank perceiving that each language is essentially a different way of thinking. Amelia was perceived to be optimized for English and converting Amelia to Swedish took three months, while training Amelia on the simple banking processes took a matter of days.

Amelia is now successfully handling ~90% of requests, though ~30% of these are intentionally routed to a live agent for example for deeper mortgage discussions.

Amelia Avatar Remains Key to IPSoft Branding

While the blonde, blue-eyed nature of the Amelia avatar is likely to be highly acceptable in Sweden, this stereotype could potentially be less acceptable elsewhere and the tradition within contact centers is to try to match the nature of the agent with that of the customer. While Amelia is clearly designed to be highly empathetic in terms of language, it may be more discordant in terms of appearance.

However, the appearance of the Amelia avatar remains key to IPSoft’s branding. While IPSoft is redesigning the Amelia avatar to capture greater hand and arm movements for greater empathy, and some adaptation of clothing and hairstyle are permitted to reflect brand value, IPSoft is not currently prepared to allow fundamental changes to gender or skin color, or to allow multiple avatars to be used to develop empathy with individual customers. This might need to change as IPSoft becomes more confident of its brand and the market for cognitive agents matures.

Partnering with Consultancies to Develop Horizontal & Vertical IP

At present, Amelia is largely vanilla in flavor and the bulk of implementations are being conducted by IPSoft itself. IPSoft estimates that Amelia has been used in 50 instances, covering ~60% of customer requests with ~90% accuracy and, overall, IPSoft estimates that it takes 6-months to assist an organization to build an Amelia competence in-house, 9-days to go-live, and 6-9 months to scale up from an initial implementation.

Accordingly, it is key to the future of IPSoft that Amelia can develop a wide range of semi-productized horizontal and vertical use cases and that partners can be trained and leveraged to handle the bulk of implementations.

At present, IPSoft estimates that its revenues are 70:30 services:product, with product revenues growing faster than services revenues. While IPSoft is currently carrying out the majority (~60%) of Amelia implementations itself, it is increasingly looking to partner with the major consultancies such as Accenture, Deloittes, PwC, and KPMG to build baseline Amelia products around horizontals and industry-specific processes, for example, working with Deloittes in HR. In addition, IPSoft has partnered with NTT in Japan, with NTT offering a Japanese-language, cloud-based virtual assistant, COTOHA.

IPSoft’s pricing mechanisms consist of:

- A fixed price per PoC development

- Production environments: charge for implementation followed by a price per transaction.

While Amelia is available in both cloud and onsite, IPSoft perceives that the major opportunities for its partners lie in highly integrated implementations behind the client firewall.

In conclusion, IPSoft is now making considerable investments in developing Amelia with the aim of becoming the leading cognitive agent for customer service and the high emphasis on “conversations and empathic responses” differentiates the software from more transactionally-focused cognitive software.

Nonetheless, it is early days for Amelia. The company is beginning to increase its emphasis on third-party partnerships which will be key to scaling adoption of the software. However, these are currently focused around the major consultancies. This is fine while cognitive agents are in the first throes of adoption but downstream IPSoft is likely to need the support of, and partnerships with the major contact center outsourcers who currently control around a third of customer service spend and who are influential in assisting organizations in their digital customer service transformations.

]]>

As well as conducting extensive research into RPA and AI, NelsonHall is also chairing international conferences on the subject. In July, we chaired SSON’s second RPA in Shared Services Summit in Chicago, and we will also be chairing SSON’s third RPA in Shared Services Summit in Braselton, Georgia on 1st to 2nd December. In the build-up to the December event we thought we would share some of our insights into rolling out RPA. These topics were the subject of much discussion in Chicago earlier this year and are likely to be the subject of further in-depth discussion in Atlanta (Braselton).

This is the third and final blog in a series presenting key guidelines for organizations embarking on an RPA project, covering project preparation, implementation, support, and management. Here I take a look at the stages of deployment, from pilot development, through design & build, to production, maintenance, and support.

Piloting & deployment – it’s all about the business

When developing pilots, it’s important to recognize that the organization is addressing a business problem and not just applying a technology. Accordingly, organizations should consider how they can make a process better and achieve service delivery innovation, and not just service delivery automation, before they proceed. One framework that can be used in analyzing business processes is the ‘eliminate/simplify/standardize/automate’ approach.

While organizations will probably want to start with some simple and relatively modest RPA pilots to gain quick wins and acceptance of RPA within the organization (and we would recommend that they do so), it is important as the use of RPA matures to consider redesigning and standardizing processes to achieve maximum benefit. So begin with simple manual processes for quick wins, followed by more extensive mapping and reengineering of processes. Indeed, one approach often taken by organizations is to insert robotics and then use the metrics available from robotics to better understand how to reengineer processes downstream.

For early pilots, pick processes where the business unit is willing to take a ‘test & learn’ approach, and live with any need to refine the initial application of RPA. Some level of experimentation and calculated risk taking is OK – it helps the developers to improve their understanding of what can and cannot be achieved from the application of RPA. Also, quality increases over time, so in the medium term, organizations should increasingly consider batch automation rather than in-line automation, and think about tool suites and not just RPA.

Communication remains important throughout, and the organization should be extremely transparent about any pilots taking place. RPA does require a strong emphasis on, and appetite for, management of change. In terms of effectiveness of communication and clarifying the nature of RPA pilots and deployments, proof-of-concept videos generally work a lot better than the written or spoken word.

Bot testing is also important, and organizations have found that bot testing is different from waterfall UAT. Ideally, bots should be tested using a copy of the production environment.

Access to applications is potentially a major hurdle, with organizations needing to establish virtual employees as a new category of employee and give the appropriate virtual user ID access to all applications that require a user ID. The IT function must be extensively involved at this stage to agree access to applications and data. In particular, they may be concerned about the manner of storage of passwords. What’s more, IT personnel are likely to know about the vagaries of the IT landscape that are unknown to operations personnel!

Reporting, contingency & change management key to RPA production

At the production stage, it is important to implement a RPA reporting tool to:

- Monitor how the bots are performing

- Provide an executive dashboard with one version of the truth

- Ensure high license utilization.

There is also a need for contingency planning to cover situations where something goes wrong and work is not allocated to bots. Contingency plans may include co-locating a bot support person or team with operations personnel.

The organization also needs to decide which part of the organization will be responsible for bot scheduling. This can either be overseen by the IT department or, more likely, the operations team can take responsibility for scheduling both personnel and bots. Overall bot monitoring, on the other hand, will probably be carried out centrally.

It remains common practice, though not universal, for RPA software vendors to charge on the basis of the number of bot licenses. Accordingly, since an individual bot license can be used in support of any of the processes automated by the organization, organizations may wish to centralize an element of their bot scheduling to optimize bot license utilization.

At the production stage, liaison with application owners is very important to proactively identify changes in functionality that may impact bot operation, so that these can be addressed in advance. Maintenance is often centralized as part of the automation CoE.

Find out more at the SSON RPA in Shared Services Summit, 1st to 2nd December

NelsonHall will be chairing the third SSON RPA in Shared Services Summit in Braselton, Georgia on 1st to 2nd December, and will share further insights into RPA, including hand-outs of our RPA Operating Model Guidelines. You can register for the summit here.

Also, if you would like to find out more about NelsonHall’s expensive program of RPA & AI research, and get involved, please contact Guy Saunders.

Plus, buy-side organizations can get involved with NelsonHall’s Buyer Intelligence Group (BIG), a buy-side only community which runs regular webinars on RPA, with your buy-side peers sharing their RPA experiences. To find out more, contact Matthaus Davies.

This is the final blog in a three-part series. See also:

Part 1: How to Lay the Foundations for a Successful RPA Project

]]>

As well as conducting extensive research into RPA and AI, NelsonHall is also chairing international conferences on the subject. In July, we chaired SSON’s second RPA in Shared Services Summit in Chicago, and we will also be chairing SSON’s third RPA in Shared Services Summit in Braselton, Georgia on 1st to 2nd December. In the build-up to the December event we thought we would share some of our insights into rolling out RPA. These topics were the subject of much discussion in Chicago earlier this year and are likely to be the subject of further in-depth discussion in Atlanta (Braselton).

This is the second in a series of blogs presenting key guidelines for organizations embarking on an RPA project, covering project preparation, implementation, support, and management. Here I take a look at how to assess and prioritize RPA opportunities prior to project deployment.

Prioritize opportunities for quick wins

An enterprise level governance committee should be involved in the assessment and prioritization of RPA opportunities, and this committee needs to establish a formal framework for project/opportunity selection. For example, a simple but effective framework is to evaluate opportunities based on their:

- Potential business impact, including RoI and FTE savings

- Level of difficulty (preferably low)

- Sponsorship level (preferably high).

The business units should be involved in the generation of ideas for the application of RPA, and these ideas can be compiled in a collaboration system such as SharePoint prior to their review by global process owners and subsequent evaluation by the assessment committee. The aim is to select projects that have a high business impact and high sponsorship level but are relatively easy to implement. As is usual when undertaking new initiatives or using new technologies, aim to get some quick wins and start at the easy end of the project spectrum.

However, organizations also recognize that even those ideas and suggestions that have been rejected for RPA are useful in identifying process pain points, and one suggestion is to pass these ideas to the wider business improvement or reengineering group to investigate alternative approaches to process improvement.

Target stable processes

Other considerations that need to be taken into account include the level of stability of processes and their underlying applications. Clearly, basic RPA does not readily adapt to significant process change, and so, to avoid excessive levels of maintenance, organizations should only choose relatively stable processes based on a stable application infrastructure. Processes that are subject to high levels of change are not appropriate candidates for the application of RPA.

Equally, it is important that the RPA implementers have permission to access the required applications from the application owners, who can initially have major concerns about security, and that the RPA implementers understand any peculiarities of the applications and know about any upgrades or modifications planned.

The importance of IT involvement

It is important that the IT organization is involved, as their knowledge of the application operating infrastructure and any forthcoming changes to applications and infrastructure need to be taken into account at this stage. In particular, it is important to involve identity and access management teams in assessments.

Also, the IT department may well take the lead in establishing RPA security and infrastructure operations. Other key decisions that require strong involvement of the IT organization include:

- Identity security

- Ownership of bots

- Ticketing & support

- Selection of RPA reporting tool.

Find out more at the SSON RPA in Shared Services Summit, 1st to 2nd December

NelsonHall will be chairing the third SSON RPA in Shared Services Summit in Braselton, Georgia on 1st to 2nd December, and will share further insights into RPA, including hand-outs of our RPA Operating Model Guidelines. You can register for the summit here.

Also, if you would like to find out more about NelsonHall’s expensive program of RPA & AI research, and get involved, please contact Guy Saunders.

Plus, buy-side organizations can get involved with NelsonHall’s Buyer Intelligence Group (BIG), a buy-side only community which runs regular webinars on sourcing topics, including the impact of RPA. The next RPA webinar will be held later this month: to find out more, contact Guy Saunders.

In the third blog in the series, I will look at deploying an RPA project, from developing pilots, through design & build, to production, maintenance, and support.

]]>

As well as conducting extensive research into RPA and AI, NelsonHall is also chairing international conferences on the subject. In July, we chaired SSON’s second RPA in Shared Services Summit in Chicago, and we will also be chairing SSON’s third RPA in Shared Services Summit in Braselton, Georgia on 1st to 2nd December. In the build-up to the December event we thought we would share some of our insights into rolling out RPA. These topics were the subject of much discussion in Chicago earlier this year and are likely to be the subject of further in-depth discussion in Atlanta (Braselton).

This is the first in a series of blogs presenting key guidelines for organizations embarking on RPA, covering establishing the RPA framework, RPA implementation, support, and management. First up, I take a look at how to prepare for an RPA initiative, including establishing the plans and frameworks needed to lay the foundations for a successful project.

Getting started – communication is key

Essential action items for organizations prior to embarking on their first RPA project are:

- Preparing a communication plan

- Establishing a governance framework

- Establishing a RPA center-of-excellence

- Establishing a framework for allocation of IDs to bots.

Communication is key to ensuring that use of RPA is accepted by both executives and staff alike, with stakeholder management critical. At the enterprise level, the RPA/automation steering committee may involve:

- COOs of the businesses

- Enterprise CIO.

Start with awareness training to get support from departments and C-level executives. Senior leader support is key to adoption. Videos demonstrating RPA are potentially much more effective than written papers at this stage. Important considerations to address with executives include:

- How much control am I going to lose?

- How will use of RPA impact my staff?

- How/how much will my department be charged?

When communicating to staff, remember to:

- Differentiate between value-added and non value-added activity

- Communicate the intention to use RPA as a development opportunity for personnel. Stress that RPA will be used to facilitate growth, to do more with the same number of people, and give people developmental opportunities

- Use the same group of people to prepare all communications, to ensure consistency of messaging.

Establish a central governance process

It is important to establish a strong central governance process to ensure standardization across the enterprise, and to ensure that the enterprise is prioritizing the right opportunities. It is also important that IT is informed of, and represented within, the governance process.

An example of a robotics and automation governance framework established by one organization was to form:

- An enterprise robotics council, responsible for the scope and direction of the program, together with setting targets for efficiency and outcomes

- A business unit governance council, responsible for prioritizing RPA projects across departments and business units

- A RPA technical council, responsible for RPA design standards, best practice guidelines, and principles.

Avoid RPA silos – create a centre of excellence

RPA is a key strategic enabler, so use of RPA needs to be embedded in the organization rather than siloed. Accordingly, the organization should consider establishing a RPA center of excellence, encompassing:

- A centralized RPA & tool technology evaluation group. It is important not to assume that a single RPA tool will be suitable for all purposes and also to recognize that ultimately a wider toolset will be required, encompassing not only RPA technology but also technologies in areas such as OCR, NLP, machine learning, etc.

- A best practice for establishing standards such as naming standards to be applied in RPA across processes and business units

- An automation lead for each tower, to manage the RPA project pipeline and priorities for that tower

- IT liaison personnel.

Establish a bot ID framework

While establishing a framework for allocation of IDs to bots may seem trivial, it has proven not to be so for many organizations where, for example, including ‘virtual workers’ in the HR system has proved insurmountable. In some instances, organizations have resorted to basing bot IDs on the IDs of the bot developer as a short-term fix, but this approach is far from ideal in the long-term.

Organizations should also make centralized decisions about bot license procurement, and here the IT department which has experience in software selection and purchasing should be involved. In particular, the IT department may be able to play a substantial role in RPA software procurement/negotiation.

Find out more at the SSON RPA in Shared Services Summit, 1st to 2nd December

NelsonHall will be chairing the third SSON RPA in Shared Services Summit in Braselton, Georgia on 1st to 2nd December, and will share further insights into RPA, including hand-outs of our RPA Operating Model Guidelines. You can register for the summit here.

Also, if you would like to find out more about NelsonHall’s extensive program of RPA & AI research, and get involved, please contact Guy Saunders.

Plus, buy-side organizations can get involved with NelsonHall’s Buyer Intelligence Group (BIG), a buy-side only community which runs regular webinars on sourcing topics, including the impact of RPA. The next RPA webinar will be held in November: to find out more, contact Matthaus Davies.

In the second blog in this series, I will look at RPA need assessment and opportunity identification prior to project deployment.

]]>

In its early days, BPO was a linear and lengthy process with knowledge transfer followed by labor arbitrage, followed by process improvement and standardization, followed by application of tools and automation. This process typically took years, often the full lifetime of the initial contract. More recently, BPO has speeded up with standard global process models, supported by elements of automation, being implemented in conjunction with the initial transition and deployment of global delivery. This timescale for “time to value” is now being speeded up further to enable a full range of transformation to be applied in months rather than years. Overall, BPO is moving from a slow-moving mechanism for transformation to High Velocity BPO. Why take years when months will do?

Some of key characteristics of High Velocity BPO are shown in the chart below:

|

Attribute |

Traditional BPO |

High-Velocity BPO |

|

Objective |

Help the purchaser fix their processes |

Help the purchaser contribute to wider business goals |

|

Measure of success |

Process excellence |

Business success, faster |

|

Importance of cost reduction |

High |

Greater, faster |

|

Geographic coverage |

Key countries |

Global, now |

|

Process enablers & technologies |

High dependence on third-parties |

Own software components supercharged with RPA |

|

Process roadmaps |

On paper |

Built into the components |

|

Compliance |

Reactive compliance |

Predictive GRC management |

|

Analytics |

Reactive process improvement |

Predictive & driving the business |

|

Digital |

A front-office “nice-to-have” |

Multi-channel and sensors fundamental |

|

Governance |

Process- dependent |

GBS, end-to-end KPIs |

As a start point, High Velocity BPO no longer focuses on process excellence targeted at a narrow process scope. Its ambitions are much greater, namely to help the client achieve business success faster, and to help the purchaser contribute not just to their own department but to the wider business goals of the organization, driven by monitoring against end-to-end KPIs, increasingly within a GBS operating framework.

However, this doesn’t mean that the need for cost reduction has gone away. It hasn’t. In fact the need for cost reduction is now greater and faster than ever. And in terms of delivery frameworks, the mish-mash of third-party tools and enablers is increasingly likely to be replaced by an integrated combination of proprietary software components, probably built on Open Source software, with built in process roadmaps, real-time reporting and analytics, and supercharged with RPA.

Furthermore, the role of analytics will no longer be reactive process improvement but predictive and driving real business actions, while compliance will also become even more important.

But let’s get back to the disruptive forces impacting BPO. What forms will the resulting disruption take in both the short-term and the long-term?

|

Disruption |

Short-term impact |

Long-term impact |

|

Robotics |

Gives buyers 35% cost reduction fast |

No significant impact on process models or technology |

|

Analytics |

Already drives process enhancement |

Becomes much more instrumental in driving business decisions Potentially makes BPO vendors more strategic |

|

Labor arbitrage on labor arbitrage |

Ongoing reductions in service costs and employee attrition

|

“Domestic BPO markets” within emerging economies become major growth opportunity |

|

Digital |

Improved service at reduced cost |

Big opportunity to combine voice, process, technology, & analytics in a high-value end- to-end service |

|

BPO “platform components” |

Improved process coherence |

BPaaS service delivery without the third-party SaaS |

|

The Internet of Things |

Slow build into areas like maintenance |

Huge potential to expand the BPO market in areas such as healthcare |

|

GBS |

Help organizations deploy GBS |

Improved end-to-end management and increased opportunity Reduced friction of service transfer |

Well robotics is here now and moving at speed and giving a short-term impact of around 35% cost reduction where applied. It is also fundamentally changing the underlying commercial models away from FTE-based pricing. However, robotics does not involve change in process models or underlying systems and technology and so is largely short-term in its impact and is a cost play.

Digital and analytics are much more strategic and longer lasting in their impact enabling vendors to become more strategic partners by delivering higher value services and driving next best actions and operational business decisions with very high levels of revenue impact.

BPO services around the Internet of Things will be a relatively slow burn in comparison but with the potential to multiply the market for industry-specific BPO services many times over and to enable BPO to move into critical services with real life or death implications.

So what is the overall impact of these disruptive forces on BPO? Well while two of the seven listed above have the potential to reduce BPO revenues in the short-term, the other five have the potential to make BPO more strategic in the eyes of buyers and significantly increase the size and scope of the global BPO market.

Part 1 The Robots are Coming - Is this the end of BPO?

Part 2 Analytics is becoming all-pervasive and increasingly predictive

Part 3 Labor arbitrage is dead - long live labor arbitrage

Part 4 Digital renews opportunities in customer management services

Part 6 The Internet of Things: Is this a New Beginning for Industry-Specific BPO?

]]>However, BPO vendors have often tended to use their own proprietary tools in key areas such as workflow, which has had the advantage of enabling them to offer a lower price point than via COTS workflow and also enabled them to achieve more integrated real-time reporting and analytics. This approach to develop pre-assembled components is being further accelerated in conjunction with cloud-based provisioning.

Now, BPO vendors are starting to take this logic a step further and pre-assemble large numbers of BPO platform components as an alternative to COTS software. This approach potentially enables them to retain the IP in-house, an important factor in areas like robotics and AI, reduce their cost to serve by eliminating the cost of third-party licences, and achieve a much more tightly integrated and coherent combination of pre-built processes, dashboards and analytics supported by underlying best practice process models.

It also potentially enables them to offer true BPaaS for the first time and to begin to move to a wider range of utility offerings - the Nirvana for vendors.

Coming next: The Internet of Things – Is this a new beginning for industry-specific BPO

Previous blogs in this series:

Part 1 The Robots are Coming - Is this the end of BPO?

Part 2 Analytics is becoming all-pervasive and increasingly predictive

Part 3 Labor arbitrage is dead - long live labor arbitrage

Part 4 Digital renews opportunities in customer management services

]]>However, the impact of digital is such that it increases the need for voice and data convergence. A common misconception in customer service is that the number of transactions is going down. It isn’t, it is increasing. So while the majority of interactions will be handled by digital rather than voice within three years, voice will not disappear. Indeed, one disruptive impact of digital is that it increases the importance of voice calls, and with voice calls now more complex and emotional, voice agents need to be up-skilled to meet this challenge. So the voice aspect of customer service is no longer focused on cost reduction. It is now focused on adding value to complex and high value transactions, with digital largely responsible for delivering the cost reduction element.

So what are the implications for BPO vendors supporting the front-office. Essentially vendors now need a strong combination of digital, consulting, automation, and voice capability and:

- Need to be able to provide a single view of the customer & linked multi-channel delivery

- Need to able to analyse and optimize customer journeys

- Need the analytics to be able to recommend next best actions both to agents and through digital channels.

On the people side, agent recruiting, training, and motivation become more important than ever before, now complicated by the fact that differing channels need different agent skills and characteristics. For example, the recruiting criteria for web chat agents are very different from those for voice agents. In addition, the web site is now a critical part of customer service delivery, and self-serve and web forms, traditionally outside the mandate of customer management services vendors, are a disruptive force that now needs to be integrated with both voice and other digital channels to provide a seamless end-to-end customer journey regard. This remains an organizational challenge for both organizations and their suppliers.

Coming next – the impact of the move to platform components

Disruptive Forces and Their Impact on BPO - previous articles in series

Part 1 The Robots are Coming - Is this the end of BPO?

Part 2 Analytics is becoming all-pervasive and increasingly predictive

Part 3 Labor arbitrage is dead - long live labor arbitrage

]]>

One side of labor arbitrage within labor arbitrage is relatively defensive, but in spite of automation and robotics, mature “International BPO” services are now being transferred to tier-n cities. Here labor arbitrage within labor arbitrage offers lower price points, reduced attrition, and business continuity. The downside is that travel to these locations might be slightly more challenging than some clients are used to, Nonetheless tier-n locations are an increasingly important part of the global delivery mix even for major outsources centered around mature geographies such as North America and Europe. And even more important as doing business in emerging markets becomes evermore business critical to these multinationals.

However, there’s also a non-defensive side to use of tier-n cities, which is to support growth in domestic markets in emerging markets, which will be an increasingly important part of the BPO market over the coming years. Lots of activity of this type is already underway in India, but let’s take South Africa as an example where cities such as Port Elizabeth and Jo’berg are emerging as highly appropriate for supporting local markets cost-effectively in local languages.So, just when you thought all BPO activity had centralized in a couple of major hubs, the spokes are fighting back and becoming more strategic.

But let’s get back to a sexier topic than labor arbitrage. The next blog looks at the impact of Digital.

Part 1 The Robots are Coming - Is this the end of BPO?

Part 2 Analytics is becoming all-pervasive and increasingly predictive

]]>However, analytics is now becoming much more pervasive, much more embedded in processes, and much more predictive & forward-looking in terms of recommending immediate business actions and not just process improvements as shown below

|

BPO Activity |

Areas where analytics being applied |

|

Contact center |

Speech and text analytics Social media monitoring & lead generation |

|

Marketing operations |

Price & promotion analytics |

|

Financial services |

Model validation |

|

Procurement |

Spend analytics |

Indeed, it’s increasingly important that real-time, drill down dashboards are built into all services, and what-if modelling is becoming increasingly common here in support of for example supply chain optimization.

Analytics is also helping BPO to move up the value chain and open up new areas of possibility such as marketing operations, where traditional areas like store performance reporting are now being supplemented by real-time predictive analytics in support of identifying the most appropriate campaign messaging and the most effective media, or mix of media, for issuing this messaging. So while analytics is still being used in traditional areas such as spend analytics to drive down costs, it is increasingly helping organizations take real-time decisions that have high impact on the top-line. Much more strategic and impactful.

The next disruptive force to be covered is less obvious - labor arbitrage within labor arbitrage.

]]>In the current market, the need to reduce cost while maintaining or enhancing customer satisfaction is still the primary driver of transformational CMS contracts, but in future we expect to see pure cost take-out give way to revenue generation (currently present in 14% of transformational contracts) as the primary driver.

For now, however, leveraging CMS as a revenue generator may still be some way off, and vendors are looking at a variety of process improvement methods to deliver transformation, including use of lean methodologies, call deflection, hiring based on behavioral characteristics, and improved agent training.

One such example is Serco’s latest win, a large multi-channel transformational CMS award by fashion retailer JD Williams. Under the terms of the contract Serco will acquire control of JD Williams’s Manchester contact center which houses 550 agents (~80% of the company’s CMS resources) in January 2015 for a ten-year period. Services in scope include inbound sales support, billing and multi-channel customer care. Serco will use the client’s legacy systems, implementing its EpiCentre unified channel agent dashboard.

At present, the contact center is at 70% capacity. Serco aims to use the remaining space to house additional clients as well as handle expected further business from JD Williams over the next two years. The potential agent expansion could be ~300 FTEs.

The transformation delivered will focus on process improvements that can be made by leveraging Serco’s experience in managing an online retail catalog for Shop Direct. JD Williams’s online business currently accounts for between 55% and 60% of its revenues, but with process improvement the expectation is that this will reach a ceiling of ~70% over the next two years (with the company’s catalog division still actively supporting older demographics).

In common with most transformation plays in the current market, JD Williams is aiming primarily at removing cost through increased operational and process efficiencies, and a company the size of Serco looks well placed to deliver on high margin transformational CMS contracts such as this.

We expect vendors’ transformational CMS efforts will shift towards benchmark-led frameworks and roadmaps, supported by greater automation. However, the CMS market is still some way behind F&A and HRO, where established transformational frameworks are already in place. Broad-scope BPO vendors are beginning to transition models from these areas, but for now, traditional process improvement remains the gateway to transformation in CMS.

NelsonHall’s latest CMS market analysis report, ‘Targeting Transformational CMS’, is now available, along with a NEAT tool, which enables sourcing managers to assess and compare the performance of vendors offering transformational CMS services.

]]>