WNS has a highly developed property & casualty (P&C) insurance practice handling 30 million claims transactions and ~$12 billion in claims spend annually. The company has extensive capability in property & casualty supporting motor, property, casualty, employer and public liability for insurers, fleet operators, MGAs, global corporates, and municipal authorities. WNS has strong capability in the Lloyd’s & London market supporting specialty lines products. The company has developed a range of proprietary solutions in support of its P&C and Speciality BPM business.

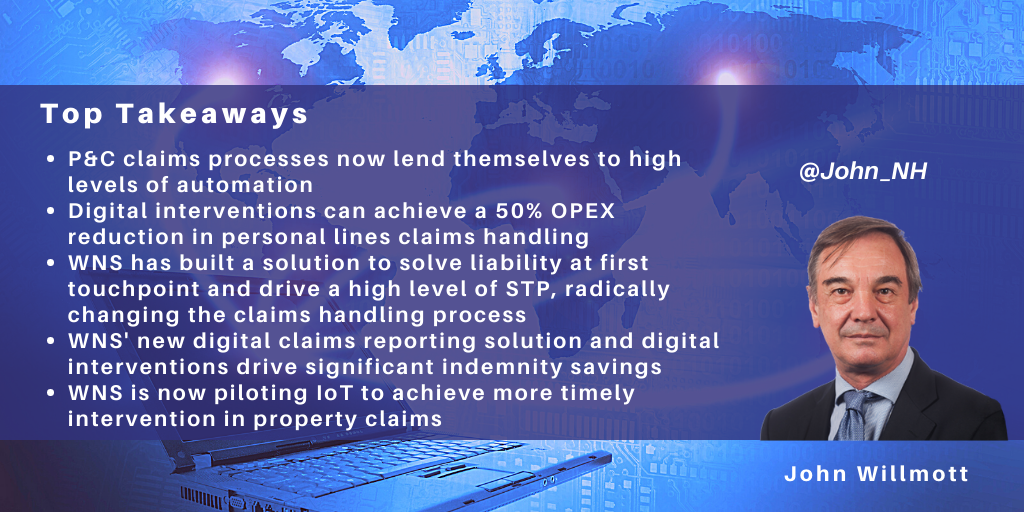

The company has now taken its proposition to the next level by reinventing, automating, and digitizing its claims handling capability to assist insurers in competing with the new generation of digital disrupters.

WNS Seeking to Reengineer P&C Claims Handling to Touchless Process: Proposition – ‘Simplified Claims’

WNS’ strategy is to assist its P&C insurance clients in moving to contactless, touchless claims, with their or clients’ staff only intervening for the decreasing proportion of exceptions that need manual handling, typically for risk appetite, strategy, governance, or customer proposition reasons.

This has the potential to enable insurance companies to dramatically reduce their operating costs while simultaneously speeding up the claims process and increasing customer NPS.

For example, for motor claims, key elements in this strategy include:

- Establishing the liability for approx.70% of claims at the first notice of loss (FNOL) stage, whether received via a portal, email, or voice and then automating all downstream claims management in these cases

- Minimizing dispute timescales and costs by providing a self-serve option for the customer and third-parties to acknowledge an accident’s circumstances and share their versions of events in a timely fashion

- Automating the downstream processes if the policyholder is at fault, with third-party and subrogation interventions handled digitally

- Identifying and progressing fraud throughout its life cycle

- Handling Third Party exposures via complex AI, ML models to assess unstructured data, complete valuations, and make offers.

Similar disruptive solutions to drive high levels of straight-through processing (STP)/one-touch claims are deployed for property (personal and commercial) and casualty products.

WNS is also looking to minimize the insurance supply chain cost. For example, in property insurance, this will involve using images and video calls to cash settle more property claims at one touchpoint, eliminating the necessity for visits by loss adjustors.

Using New Digital channels for More Timely and Effective Claim Notification

One of the pain points with commercial customers and fleet companies is the amount of time taken by end customers/drivers to report accidents. In these large corporate and municipal accounts, WNS has found the average notification time to average 33 days, which leads to a loss of opportunity to intervene and reduce the loss incurred.

Accordingly, WNS is piloting the use of new digital channels so that the driver of a vehicle can effortlessly report a claim in one touch and upload images, enabling WNS to receive details such as the third party’s registration number details within minutes of the incident. This has the potential to be particularly effective in the case of drivers whose lack of fluency in English may be inhibiting accident reporting.

Similarly, for property surge events, whenever there is a warning of a weather event, such as an Australian bushfire, WNS will identify the post/ZIP codes for the areas likely to be impacted and use this new digital approach to share a prepopulated claim form with customers a day or two in advance of the weather event.

This avoids the need for customers to phone potentially heavily loaded contact centers. Instead, they upload details of their losses, and WNS will respond within 30 minutes, providing details of coverage and dispatching services accordingly.

The new digital reporting channel pilots for motor and property claims are at the completion stage and have been successful from customer experience and indemnity spend standpoints.

Enhancing the Competitiveness of a Major P&C Insurer

WNS has applied its claims strategy components to assist a major global insurer in substantially improving its competitiveness through a fundamental reinvention of its claims processes.

The insurer had carried out a competitive benchmark with a major consultancy, which estimated its OPEX to be very high on a like-for-like basis, with contributory factors being the excessive fragmentation of the insurer’s onshore delivery across more than ten onshore sites and its comparatively low usage of automation and digitalization.

WNS initially addressed these issues by consolidating the insurer’s onshore sites to two centers. WNS also made operating model changes at one of these sites, introducing a collaborative team structure with client staff working alongside WNS staff on a co-branded production floor. A WNS site head runs the site, and in addition to agents, the WNS site personnel include process leads, continuous improvement consultants, and RPA and analytics specialists. The overall client operations delivered by WNS leverage a combination of onshore and offshore delivery.

Secondly, considerable automation and digital interventions have been introduced into the insurer’s motor, property, and casualty claims process. These digital interventions are funded through an innovation funding mechanism that ensures “continuous improvement is a way of life by design”. WNS initially provided resources to deliver productivity improvements, taking a share of the resulting savings, part of which is then used to self-fund further productivity initiatives to establish a continuous reengineering cycle.

Within the new digital model, each new claim reported via the digital channel is run through an automated liability solution, followed by an early settlements, total loss, recovery, and fraud identification model, all integrated via a simple process flow running seamlessly in the background. These interventions are followed by automation of the appropriate downstream activity, such as booking the vehicle for repairs, courtesy car allotment, etc. For suspected total losses, the claim details are run through a total loss predictive tool. These processes for both repairable and total loss motor claims are supported throughout by a self-serve E-FNOL app and fraud screening.

The liability predictive model used by WNS within this contract is based on partner insurtech technology. The system automates digital liability handling within motor claims, including:

- Prompting using image-based questions to establish the accident scenario, including testimonials from witnesses, using links to Google Maps and historical meteorological data to help pinpoint the accident's exact location and weather conditions at the time

- Sending allegations to the third party for self-serve input and responses

- Integration with case law to assist in decision-making

- Built-in AI to identify fraud and subrogation opportunities.

Overall, WNS uses various commercial models ranging from FTE, transaction-based pricing, management consulting fees, and gain share within the contract. Although personal lines claims within the contract are largely paid on transaction-based pricing, WNS is additionally committed to guaranteed percentage efficiency and indemnity improvements together with customer journey improvement.

The overall benefits achieved for this insurance company included:

- Improvement of 15-20 NPS base points

- Improvement of +30 employee NPS (ENPS) base points

- ~50% OPEX reduction

- ~2% motor indemnity reduction.

In 2016, Atos was awarded a 13-year life & pensions BPO contract by Aegon, taking over from the incumbent Serco and involving the transfer of ~300 people in a center in Lytham St Annes.

The services provided by Atos within this contract include managing end-to-end operations, from initial underwriting through to claims processing, for Aegon's individual protection offering, which comprises life assurance, critical illness, disability, and income protection products (and for which Aegon has 500k customers).

Alongside this deal, Aegon was separately evaluating the options for its closed book life & pensions activity and subsequently went to market to outsource its U.K. closed book business covering 1.4m customers across a range of group and individual policy types. The result was an additional 15-year deal with Atos, signed recently.

Three elements were important factors in the award of this new contract to Atos:

- Transfer of the existing Aegon personnel

- Ability to replatform the policies

- Implementation of customer-centric operational excellence.

Leveraging Edinburgh-Based Delivery to Offer Onshore L&P BPS Service

The transfer of the existing Aegon personnel and maintaining their presence in Edinburgh was of high importance to Aegon, the union, and the Scottish government. The circa 800 transferred personnel will continue to be housed at the existing site when transfer takes place in summer 2019, with Atos sub-leasing part of Aegon’s premises. This is possible for Atos since it is the company’s first life closed block contract and the company is looking to win additional deals in this space over the next few years (and will be going to market with an onshore rather than offshore-based proposition).

Partnering with Sapiens to Offer Platform-Based Service

While (unlike some other providers of L&P BPS services) Atos does not own its own life platform, the company does recognize that platform-based services are the future of closed book L&P BPS. Accordingly, the company has partnered with Sapiens, and the Sapiens insurance platform will be used as a common platform and integrated with Pega BPM across both Aegon’s protection and closed book policies.

Atos has undertaken to transfer all of the closed block policies from Aegon’s two existing insurance platforms to Sapiens, and these will be transferred over the 24-month period following service transfer. The new Sapiens-based system will be hosted and maintained by Atos.

Aiming for Customer-Centric Operational Excellence

The third consideration is a commitment by Atos to implement customer-centric operational excellence. While Aegon had already begun to measure customer NPS and assess ways of improving the service, Atos has now begun to employ further the customer journey mapping techniques deployed in its Lytham center to identify customer effort and pain points. Use of the Sapiens platform will enable customer self-service and omni-channel service, while this and further automation will be used to facilitate the role of the agent and enhance the number of policies handled per FTE.

The contract is priced using the fairly traditional pricing mechanisms of a transition and conversion charge (£130m over a 3-year period) followed by a price per policy, with Atos aiming for efficiency savings of up to £30m per annum across the policy book.

Atos perceives that this service will become the foundation for a growing closed block L&P BPS business, with Atos challenging the incumbents such as TCS Diligenta, Capita, and HCL. Edinburgh will become Atos’ center of excellence for closed book L&P BPS, with Atos looking to differentiate from existing service providers by offering an onshore-based alternative with the digital platform and infrastructure developed as part of the Aegon relationship, offered on a multi-client basis. Accordingly, Atos will be increasingly targeting life & pensions companies, both first-time outsourcers and those with contracts coming up for renewal, as it seeks to build its U.K. closed book L&P BPS business.

]]>

As well as conducting extensive research into RPA and AI, NelsonHall is also chairing international conferences on the subject. In July, we chaired SSON’s second RPA in Shared Services Summit in Chicago, and we will also be chairing SSON’s third RPA in Shared Services Summit in Braselton, Georgia on 1st to 2nd December. In the build-up to the December event we thought we would share some of our insights into rolling out RPA. These topics were the subject of much discussion in Chicago earlier this year and are likely to be the subject of further in-depth discussion in Atlanta (Braselton).

This is the third and final blog in a series presenting key guidelines for organizations embarking on an RPA project, covering project preparation, implementation, support, and management. Here I take a look at the stages of deployment, from pilot development, through design & build, to production, maintenance, and support.

Piloting & deployment – it’s all about the business

When developing pilots, it’s important to recognize that the organization is addressing a business problem and not just applying a technology. Accordingly, organizations should consider how they can make a process better and achieve service delivery innovation, and not just service delivery automation, before they proceed. One framework that can be used in analyzing business processes is the ‘eliminate/simplify/standardize/automate’ approach.

While organizations will probably want to start with some simple and relatively modest RPA pilots to gain quick wins and acceptance of RPA within the organization (and we would recommend that they do so), it is important as the use of RPA matures to consider redesigning and standardizing processes to achieve maximum benefit. So begin with simple manual processes for quick wins, followed by more extensive mapping and reengineering of processes. Indeed, one approach often taken by organizations is to insert robotics and then use the metrics available from robotics to better understand how to reengineer processes downstream.

For early pilots, pick processes where the business unit is willing to take a ‘test & learn’ approach, and live with any need to refine the initial application of RPA. Some level of experimentation and calculated risk taking is OK – it helps the developers to improve their understanding of what can and cannot be achieved from the application of RPA. Also, quality increases over time, so in the medium term, organizations should increasingly consider batch automation rather than in-line automation, and think about tool suites and not just RPA.

Communication remains important throughout, and the organization should be extremely transparent about any pilots taking place. RPA does require a strong emphasis on, and appetite for, management of change. In terms of effectiveness of communication and clarifying the nature of RPA pilots and deployments, proof-of-concept videos generally work a lot better than the written or spoken word.

Bot testing is also important, and organizations have found that bot testing is different from waterfall UAT. Ideally, bots should be tested using a copy of the production environment.

Access to applications is potentially a major hurdle, with organizations needing to establish virtual employees as a new category of employee and give the appropriate virtual user ID access to all applications that require a user ID. The IT function must be extensively involved at this stage to agree access to applications and data. In particular, they may be concerned about the manner of storage of passwords. What’s more, IT personnel are likely to know about the vagaries of the IT landscape that are unknown to operations personnel!

Reporting, contingency & change management key to RPA production

At the production stage, it is important to implement a RPA reporting tool to:

- Monitor how the bots are performing

- Provide an executive dashboard with one version of the truth

- Ensure high license utilization.

There is also a need for contingency planning to cover situations where something goes wrong and work is not allocated to bots. Contingency plans may include co-locating a bot support person or team with operations personnel.

The organization also needs to decide which part of the organization will be responsible for bot scheduling. This can either be overseen by the IT department or, more likely, the operations team can take responsibility for scheduling both personnel and bots. Overall bot monitoring, on the other hand, will probably be carried out centrally.

It remains common practice, though not universal, for RPA software vendors to charge on the basis of the number of bot licenses. Accordingly, since an individual bot license can be used in support of any of the processes automated by the organization, organizations may wish to centralize an element of their bot scheduling to optimize bot license utilization.

At the production stage, liaison with application owners is very important to proactively identify changes in functionality that may impact bot operation, so that these can be addressed in advance. Maintenance is often centralized as part of the automation CoE.

Find out more at the SSON RPA in Shared Services Summit, 1st to 2nd December

NelsonHall will be chairing the third SSON RPA in Shared Services Summit in Braselton, Georgia on 1st to 2nd December, and will share further insights into RPA, including hand-outs of our RPA Operating Model Guidelines. You can register for the summit here.

Also, if you would like to find out more about NelsonHall’s expensive program of RPA & AI research, and get involved, please contact Guy Saunders.

Plus, buy-side organizations can get involved with NelsonHall’s Buyer Intelligence Group (BIG), a buy-side only community which runs regular webinars on RPA, with your buy-side peers sharing their RPA experiences. To find out more, contact Matthaus Davies.

This is the final blog in a three-part series. See also:

Part 1: How to Lay the Foundations for a Successful RPA Project

]]>

As well as conducting extensive research into RPA and AI, NelsonHall is also chairing international conferences on the subject. In July, we chaired SSON’s second RPA in Shared Services Summit in Chicago, and we will also be chairing SSON’s third RPA in Shared Services Summit in Braselton, Georgia on 1st to 2nd December. In the build-up to the December event we thought we would share some of our insights into rolling out RPA. These topics were the subject of much discussion in Chicago earlier this year and are likely to be the subject of further in-depth discussion in Atlanta (Braselton).

This is the second in a series of blogs presenting key guidelines for organizations embarking on an RPA project, covering project preparation, implementation, support, and management. Here I take a look at how to assess and prioritize RPA opportunities prior to project deployment.

Prioritize opportunities for quick wins

An enterprise level governance committee should be involved in the assessment and prioritization of RPA opportunities, and this committee needs to establish a formal framework for project/opportunity selection. For example, a simple but effective framework is to evaluate opportunities based on their:

- Potential business impact, including RoI and FTE savings

- Level of difficulty (preferably low)

- Sponsorship level (preferably high).

The business units should be involved in the generation of ideas for the application of RPA, and these ideas can be compiled in a collaboration system such as SharePoint prior to their review by global process owners and subsequent evaluation by the assessment committee. The aim is to select projects that have a high business impact and high sponsorship level but are relatively easy to implement. As is usual when undertaking new initiatives or using new technologies, aim to get some quick wins and start at the easy end of the project spectrum.

However, organizations also recognize that even those ideas and suggestions that have been rejected for RPA are useful in identifying process pain points, and one suggestion is to pass these ideas to the wider business improvement or reengineering group to investigate alternative approaches to process improvement.

Target stable processes

Other considerations that need to be taken into account include the level of stability of processes and their underlying applications. Clearly, basic RPA does not readily adapt to significant process change, and so, to avoid excessive levels of maintenance, organizations should only choose relatively stable processes based on a stable application infrastructure. Processes that are subject to high levels of change are not appropriate candidates for the application of RPA.

Equally, it is important that the RPA implementers have permission to access the required applications from the application owners, who can initially have major concerns about security, and that the RPA implementers understand any peculiarities of the applications and know about any upgrades or modifications planned.

The importance of IT involvement

It is important that the IT organization is involved, as their knowledge of the application operating infrastructure and any forthcoming changes to applications and infrastructure need to be taken into account at this stage. In particular, it is important to involve identity and access management teams in assessments.

Also, the IT department may well take the lead in establishing RPA security and infrastructure operations. Other key decisions that require strong involvement of the IT organization include:

- Identity security

- Ownership of bots

- Ticketing & support

- Selection of RPA reporting tool.

Find out more at the SSON RPA in Shared Services Summit, 1st to 2nd December

NelsonHall will be chairing the third SSON RPA in Shared Services Summit in Braselton, Georgia on 1st to 2nd December, and will share further insights into RPA, including hand-outs of our RPA Operating Model Guidelines. You can register for the summit here.

Also, if you would like to find out more about NelsonHall’s expensive program of RPA & AI research, and get involved, please contact Guy Saunders.

Plus, buy-side organizations can get involved with NelsonHall’s Buyer Intelligence Group (BIG), a buy-side only community which runs regular webinars on sourcing topics, including the impact of RPA. The next RPA webinar will be held later this month: to find out more, contact Guy Saunders.

In the third blog in the series, I will look at deploying an RPA project, from developing pilots, through design & build, to production, maintenance, and support.

]]>

As well as conducting extensive research into RPA and AI, NelsonHall is also chairing international conferences on the subject. In July, we chaired SSON’s second RPA in Shared Services Summit in Chicago, and we will also be chairing SSON’s third RPA in Shared Services Summit in Braselton, Georgia on 1st to 2nd December. In the build-up to the December event we thought we would share some of our insights into rolling out RPA. These topics were the subject of much discussion in Chicago earlier this year and are likely to be the subject of further in-depth discussion in Atlanta (Braselton).

This is the first in a series of blogs presenting key guidelines for organizations embarking on RPA, covering establishing the RPA framework, RPA implementation, support, and management. First up, I take a look at how to prepare for an RPA initiative, including establishing the plans and frameworks needed to lay the foundations for a successful project.

Getting started – communication is key

Essential action items for organizations prior to embarking on their first RPA project are:

- Preparing a communication plan

- Establishing a governance framework

- Establishing a RPA center-of-excellence

- Establishing a framework for allocation of IDs to bots.

Communication is key to ensuring that use of RPA is accepted by both executives and staff alike, with stakeholder management critical. At the enterprise level, the RPA/automation steering committee may involve:

- COOs of the businesses

- Enterprise CIO.

Start with awareness training to get support from departments and C-level executives. Senior leader support is key to adoption. Videos demonstrating RPA are potentially much more effective than written papers at this stage. Important considerations to address with executives include:

- How much control am I going to lose?

- How will use of RPA impact my staff?

- How/how much will my department be charged?

When communicating to staff, remember to:

- Differentiate between value-added and non value-added activity

- Communicate the intention to use RPA as a development opportunity for personnel. Stress that RPA will be used to facilitate growth, to do more with the same number of people, and give people developmental opportunities

- Use the same group of people to prepare all communications, to ensure consistency of messaging.

Establish a central governance process

It is important to establish a strong central governance process to ensure standardization across the enterprise, and to ensure that the enterprise is prioritizing the right opportunities. It is also important that IT is informed of, and represented within, the governance process.

An example of a robotics and automation governance framework established by one organization was to form:

- An enterprise robotics council, responsible for the scope and direction of the program, together with setting targets for efficiency and outcomes

- A business unit governance council, responsible for prioritizing RPA projects across departments and business units

- A RPA technical council, responsible for RPA design standards, best practice guidelines, and principles.

Avoid RPA silos – create a centre of excellence

RPA is a key strategic enabler, so use of RPA needs to be embedded in the organization rather than siloed. Accordingly, the organization should consider establishing a RPA center of excellence, encompassing:

- A centralized RPA & tool technology evaluation group. It is important not to assume that a single RPA tool will be suitable for all purposes and also to recognize that ultimately a wider toolset will be required, encompassing not only RPA technology but also technologies in areas such as OCR, NLP, machine learning, etc.

- A best practice for establishing standards such as naming standards to be applied in RPA across processes and business units

- An automation lead for each tower, to manage the RPA project pipeline and priorities for that tower

- IT liaison personnel.

Establish a bot ID framework

While establishing a framework for allocation of IDs to bots may seem trivial, it has proven not to be so for many organizations where, for example, including ‘virtual workers’ in the HR system has proved insurmountable. In some instances, organizations have resorted to basing bot IDs on the IDs of the bot developer as a short-term fix, but this approach is far from ideal in the long-term.

Organizations should also make centralized decisions about bot license procurement, and here the IT department which has experience in software selection and purchasing should be involved. In particular, the IT department may be able to play a substantial role in RPA software procurement/negotiation.

Find out more at the SSON RPA in Shared Services Summit, 1st to 2nd December

NelsonHall will be chairing the third SSON RPA in Shared Services Summit in Braselton, Georgia on 1st to 2nd December, and will share further insights into RPA, including hand-outs of our RPA Operating Model Guidelines. You can register for the summit here.

Also, if you would like to find out more about NelsonHall’s extensive program of RPA & AI research, and get involved, please contact Guy Saunders.

Plus, buy-side organizations can get involved with NelsonHall’s Buyer Intelligence Group (BIG), a buy-side only community which runs regular webinars on sourcing topics, including the impact of RPA. The next RPA webinar will be held in November: to find out more, contact Matthaus Davies.

In the second blog in this series, I will look at RPA need assessment and opportunity identification prior to project deployment.

]]>

In its early days, BPO was a linear and lengthy process with knowledge transfer followed by labor arbitrage, followed by process improvement and standardization, followed by application of tools and automation. This process typically took years, often the full lifetime of the initial contract. More recently, BPO has speeded up with standard global process models, supported by elements of automation, being implemented in conjunction with the initial transition and deployment of global delivery. This timescale for “time to value” is now being speeded up further to enable a full range of transformation to be applied in months rather than years. Overall, BPO is moving from a slow-moving mechanism for transformation to High Velocity BPO. Why take years when months will do?

Some of key characteristics of High Velocity BPO are shown in the chart below:

|

Attribute |

Traditional BPO |

High-Velocity BPO |

|

Objective |

Help the purchaser fix their processes |

Help the purchaser contribute to wider business goals |

|

Measure of success |

Process excellence |

Business success, faster |

|

Importance of cost reduction |

High |

Greater, faster |

|

Geographic coverage |

Key countries |

Global, now |

|

Process enablers & technologies |

High dependence on third-parties |

Own software components supercharged with RPA |

|

Process roadmaps |

On paper |

Built into the components |

|

Compliance |

Reactive compliance |

Predictive GRC management |

|

Analytics |

Reactive process improvement |

Predictive & driving the business |

|

Digital |

A front-office “nice-to-have” |

Multi-channel and sensors fundamental |

|

Governance |

Process- dependent |

GBS, end-to-end KPIs |

As a start point, High Velocity BPO no longer focuses on process excellence targeted at a narrow process scope. Its ambitions are much greater, namely to help the client achieve business success faster, and to help the purchaser contribute not just to their own department but to the wider business goals of the organization, driven by monitoring against end-to-end KPIs, increasingly within a GBS operating framework.

However, this doesn’t mean that the need for cost reduction has gone away. It hasn’t. In fact the need for cost reduction is now greater and faster than ever. And in terms of delivery frameworks, the mish-mash of third-party tools and enablers is increasingly likely to be replaced by an integrated combination of proprietary software components, probably built on Open Source software, with built in process roadmaps, real-time reporting and analytics, and supercharged with RPA.

Furthermore, the role of analytics will no longer be reactive process improvement but predictive and driving real business actions, while compliance will also become even more important.

But let’s get back to the disruptive forces impacting BPO. What forms will the resulting disruption take in both the short-term and the long-term?

|

Disruption |

Short-term impact |

Long-term impact |

|

Robotics |

Gives buyers 35% cost reduction fast |

No significant impact on process models or technology |

|

Analytics |

Already drives process enhancement |

Becomes much more instrumental in driving business decisions Potentially makes BPO vendors more strategic |

|

Labor arbitrage on labor arbitrage |

Ongoing reductions in service costs and employee attrition

|

“Domestic BPO markets” within emerging economies become major growth opportunity |

|

Digital |

Improved service at reduced cost |

Big opportunity to combine voice, process, technology, & analytics in a high-value end- to-end service |

|

BPO “platform components” |

Improved process coherence |

BPaaS service delivery without the third-party SaaS |

|

The Internet of Things |

Slow build into areas like maintenance |

Huge potential to expand the BPO market in areas such as healthcare |

|

GBS |

Help organizations deploy GBS |

Improved end-to-end management and increased opportunity Reduced friction of service transfer |

Well robotics is here now and moving at speed and giving a short-term impact of around 35% cost reduction where applied. It is also fundamentally changing the underlying commercial models away from FTE-based pricing. However, robotics does not involve change in process models or underlying systems and technology and so is largely short-term in its impact and is a cost play.

Digital and analytics are much more strategic and longer lasting in their impact enabling vendors to become more strategic partners by delivering higher value services and driving next best actions and operational business decisions with very high levels of revenue impact.

BPO services around the Internet of Things will be a relatively slow burn in comparison but with the potential to multiply the market for industry-specific BPO services many times over and to enable BPO to move into critical services with real life or death implications.

So what is the overall impact of these disruptive forces on BPO? Well while two of the seven listed above have the potential to reduce BPO revenues in the short-term, the other five have the potential to make BPO more strategic in the eyes of buyers and significantly increase the size and scope of the global BPO market.

Part 1 The Robots are Coming - Is this the end of BPO?

Part 2 Analytics is becoming all-pervasive and increasingly predictive

Part 3 Labor arbitrage is dead - long live labor arbitrage

Part 4 Digital renews opportunities in customer management services

Part 6 The Internet of Things: Is this a New Beginning for Industry-Specific BPO?

]]>|

Sector |

Examples |

|

Telemedicine |

Monitoring heart operation patients post-op |

|

Insurance |

Monitoring driver behavior for policy charging |

|

Energy & utilities |

Identifying pipeline leakages |

|

Telecoms |

Home monitoring/management - the "next big thing” for the telecoms sector |

|

Plant & equipment |

Predictive maintenance |

|

Manufacturing |

Everything-as-a-service |

So, for example, sensors are already being used to monitor U.S. heart operation patients post-op from India to detect warning signs in their pulses, while a number of insurance companies are using telematics to monitor driver behaviour in support of policy charging. Elsewhere sensors are increasingly being linked to analytics to provide predictive maintenance in support of machinery from aircraft to mining equipment, and home monitoring seems likely to be the next “big thing” for the telecoms sector. And in the manufacturing sector, there is an increasing trend to sell “everything as a service” as an alternative to selling products in their raw form.

This is a major opportunity that has the potential to massively increase the market for industry-specific or middle-office BPO way beyond its traditional more administrative role.

However, it has a number of implications for BPO vendors in that the buyers for these sensor-dependent services are often not the traditional BPO buyer, these services are often real-time in nature and have a high level of requirement for 24X7 delivery, and strong analytics capability is likely to be a pre-requisite. In addition, these services arising out of the Internet of Things potentially take the meaning of risk/reward to a whole new level, as many of them potentially have real life or death implications. Some work for the lawyers on both sides here.

Coming next: High-Velocity BPO – What the client always wanted!

Previous blogs in this series:

Part 1 The Robots are Coming - Is this the end of BPO?

Part 2 Analytics is becoming all-pervasive and increasingly predictive

Part 3 Labor arbitrage is dead - long live labor arbitrage

Part 4 Digital renews opportunities in customer management services

]]>HCL’s original contract with Chesnara dates back to 2005. However, HCL also had a separate L&P BPO contract with Save & Prosper, who Chesnara purchased from JPMorgan Asset Management in December 2010. More recently, Chesnara also purchased Direct Line Life, whose L&P operations are currently in process of being transitioned to HCL. This latest acquisition by Chesnara adds a further 150,000 policy holders to the portfolio currently administered by HCL.

Accordingly, these three historically separate contracts are now being consolidated by Chesnara and HCL into a single contract to provide a consistent suite of services and SLAs across policy administration services, fund accounting, investment administration, and certain actuarial valuation and reporting services.

At the service delivery level, this involves handling all policies within a similar operating model with workflow for all policies handled through HCL’s OpEX (Operational Excellence) work management and quality assurance tools. In addition, policies are being migrated, where feasible, on HCL’s ALPS insurance platform. For example, the policies transitioned from Direct Line Life are currently being migrated onto ALPS. However, as usual, it is not feasible to handle all policies on a single platform and a small number of legacy systems will remain in place across the various books, handling approx. 50,000 policies.

In addition, within the new contract, HCL is working to ensure that service levels and service metrics are consistent across all Chesnara books of business, and HCL will be enhancing the SLAs and service metrics in place to ensure consistency with regulatory conduct risk expectations over next 18-months. This involves developing an increased focus on customer experience e.g. introducing proactive calling where there may have been a customer service issue before this issue turns into a formal complaint. The benefits of this type of approach include operations cost reduction, by reducing the level of formal complaints handling, as well as delivering improved customer experience.

The pricing mechanisms used across the various books are also being standardized and moved from pure per policy charging to a combination of per policy and activity-based pricing. For example, the pricing of core policy administration services will still tend to be per policy driven but will be rationalized by policy type across books. However, the costs of many accounting-based activities are not sensitive to the number of policies managed and so will be priced differently to achieve better alignment between service pricing and the underlying cost drivers.

Elsewhere in the industry, changes in the fiscal treatment of retirement income is forcing companies to re-evaluate their retirement product strategies and their approaches to administration of annuity and retirement books. The new legislation coming into place in the U.K. means that companies with small annuity books may no longer be adding significantly to these and so may need to treat these differently in future. This potentially creates opportunities both for consolidators and companies such as HCL who support their operations.

]]>