NelsonHall recently visited Accenture at its Cyber Fusion Center in Washington D.C. to discuss innovations in its cyber resiliency offerings and the recent launch of its new digital identity tool, Zoran.

Failings of existing role-based access (RBA)

Typical identity and access management (IAM) systems control users’ access to data based on their role, i.e. position, competency, authority and responsibility within the enterprise. It’s a standard best practice to keep access to systems/information at a minimum, segmenting access to prevent one user, even a C-level user, from having carte blanche to traverse the organization's operations. Not only does this reduce the risk from a single user being compromised, it also reduces the potential insider threat posed by that user.

While these IAM solutions can match user provisioning requests to a directory of employee job titles to automate a lot of these processes, there can be a breakdown in the setup of these RBA IAM tools, with roles defined too widely as a catch-all, which in turn reduces the segmentation of the access. For example, if a member of your team works in the R&D department developing widget A, should they receive access to data related to widget B?

Likewise, another issue with these solutions is privilege creep, which is where an employee who has had several roles or responsibilities has retained previous permission sets when they have moved role. These and many more issues result in RBA systems being ineffective, as they are implemented as a static picture of the organization’s employees at a single point in time. In addition, recertification is a time-consuming and wasteful exercise.

Enter Zoran

Accenture developed Zoran in The Dock in Dublin, a multidisciplinary research and incubation hub. It brought in five companies to discuss the problem of identity management, two of which stayed on for the full development, handing over data to Accenture to be used in the development of Zoran.

Zoran analyses user access and privileges across the organization and performs data analytics to look for patterns in their access, entitlements, and assignments. The trends found in this patent pending analytics algorithm are used to generate confidence scores to determine whether users should have those privileges. These confidence scores can then be used to perform automatic operations such as recertification, for example, if a user’s details change after a specified period of time.

Zoran is not using machine learning to continuously improve confidence scores – i.e. if, for a group of users, an entitlement is always recertified, the confidence scoring algorithm is not updated to increase the confidence score. Accenture’s reason for this is that it runs the risk of being self-perpetuating, with digital identity analysts being more likely to recertify users because the confidence score has risen.

Currently, Zoran does not store which security analyst approved which certification for which user, although Accenture is in the process of adding this feature.

Will Zoran be the silver bullet for IAM?

IAM tools have been relatively slow to develop from simple automation to an ML/AI state, and this is certainly a step in the right direction. However, there will have to be some reskilling and change management around the recertification process.

While Zoran aims to reduce the uncertainty in recertifying permissions for a user, there is still a very limited risk of ‘false positive’ confidence scores being given which could automatically recertify a user, or that a security analyst could certify a user in something akin to a box-ticking exercise due to trust in the confidence score provided.

Accenture also needs to improve on developing the Zoran technologies with its other technologies; for example, its work with Ripjar’s Labyrinth security data analytics platform could yield some interesting results.

NelsonHall believes tools such as Zoran, combined with more traditional IAM solutions, are likely to be the current trajectory of the IAM market, with ML further segmenting groups/roles and providing increased trust in recertification processes.

]]>

Mike Smart presents summary highlights from IBM and The Weather Company at Red Bull Racing.

]]>

NelsonHall recently attended Atos’ Technology Day in Paris, where we heard updates on its digital technology initiatives, including key partnerships with Siemens and Google, and new AI product developments.

Extending partnership with Siemens

Atos gave an update on its ongoing investment with Siemens, a seven-year-old partnership that was recently extended to 2020 with the addition of an extra €100m in funding (pushing the total amount of funding to €330m). The partnership focuses on data analytics and AI, IoT and connectivity services using the MindSphere platform, cybersecurity, and digital services.

Also, we were encouraged by what appeared to be a renewed interest in integrating the platform by Siemens, with Siemens now partnering with Infosys to develop applications and services for MindSphere. Developing the MindSphere platform and growing the business beyond Siemens will be a critical factor for growth in Atos’ IoT business.

Google partnership update

Atos presented on its partnership with Google, announced in April 2018, framing the partnership around AI. Within the partnership scope, Atos’ initiatives are to:

- Develop its Canopy Orchestrated Hybrid Cloud to integrate Google Cloud platform (which will become Atos’ preferred cloud computing choice)

- Create a ML practice using Google Cloud’s ML APIs, and develop vertical-specific algorithms

- Use G Suite as part of its Digital Workplace offerings.

The first Atos/Google co-lab has now been opened, with two more set to follow: in Boulogne, Paris (opening in fall 2018) and Dallas, TX. Each of these labs have 50 specialists, 25 from Atos, 25 from Google.

AI product developments

Atos announced the release of its Codex AI product suite. The suite aims to act as a workbench for client development on AI and the management of AI applications. The advantage of Atos’ suite is its ability to push and manage the AI algorithms across a number of architectures in an infrastructure-agnostic manner, which we found particularly interesting with regard to edge computing.

Edge computing has a multitude of applications for IoT services. Even now the amount of data sent by IoT devices can strain the ability to communicate data back for analytic model development, and in some cases data just cannot be communicated in a timely manner. Edge computing aims to reduce the amount of data that needs to be sent ‘home’ by providing some computing power for running algorithms at the device end. Linking back to the Codex AI suite, a key selling point of the edge computing box will be the ability to have models developed at HPC stacks, then use algorithms developed on the suite to be run at the edge.

Other future-looking announcements included the release of an updated version of Atos’ Quantum Learning Machine (QLM) and a prototype of hardware for an edge computing box.

Comparing these announcements to 2016’s ‘2019’ ambition plan, it's clear to see Atos has the potential to overperform on Codex expectations. The Codex business, which encompasses business-driven analytics, IoT services, and solutions including MindSphere and now the Codex AI suite, had a revenue target of €1bn by 2019, up from ~€500m in 2016. Codex experienced very significant growth in 2017, delivering revenues of ~€760m, in part due to its acquisition of zData in the U.S. We expect to see further acquisitions in the Codex business, as projects from MindSphere and the releases announced at this event ramp up.

In sum, the strengthening of key partnerships, AI product developments, and the slow build of the Quantum business, establish a foundation for the long-term growth of Atos’ digital and data-orientated business.

]]>

Mike Smart with a quick report from DXC's 'Delivering on Digital 2018' client event.

]]>

Mike Smart reports directly from IBM’s European Analyst & Advisory Exchange 2017 with some quick take-aways regarding IBM’s transition from systems integrator to services integrator and its business resiliency services.

]]>

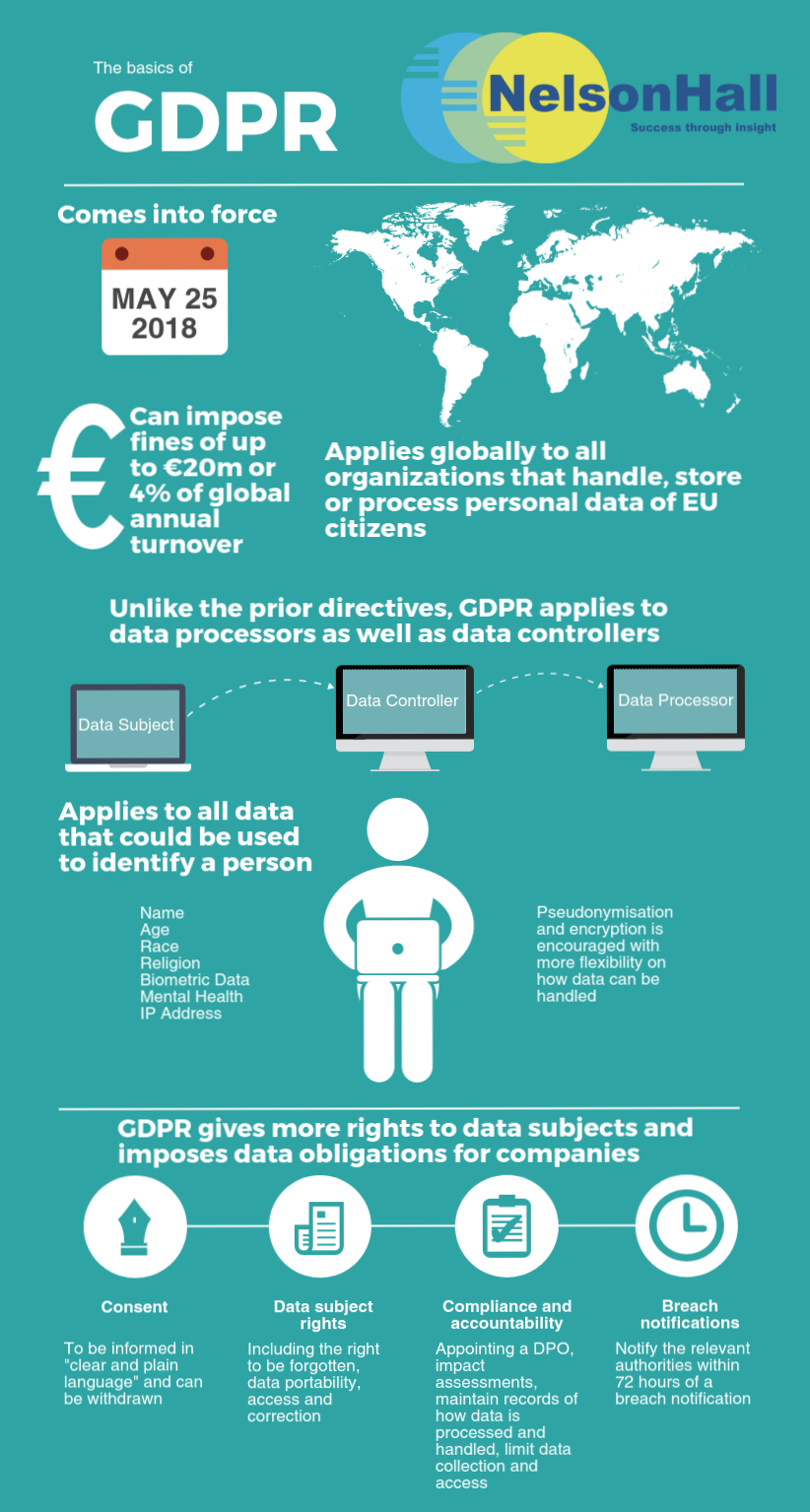

In this, the second of two articles on GDPR, I look at how IT services vendors can help companies meet GDPR compliance in several areas. You can read the first article, ‘The Impact & Benefits of GDPR for Organizations’, here.

Application services

Application services can help organizations in ensuring that new and legacy applications meet the GDPR articles pertaining to applications: namely Article 25, which aims to make sure that applications have ‘data protection by design and default’.

In short, application providers should be providing:

- Security by design in the early stages of the SDLC

- Gap analyses on what personal data is required, how it is collected, processed and handled

- Ensuring a level of security appropriate to the risk with:

- Encryption and/or pseudonymisation of data

- The ability to restore personal data in case of a breach or technical issue

- Regular security testing of practices and solutions to ensure confidentiality, integrity, availability, and resilience

- Data minimisation efforts, using the prior gap analysis so that only the required data is collected, stored, and accessed (for example, does the organization really need to know users’ age to provide a non-age restricted service?)

- Ensuring that the principle of least privilege is used for internal users so that they may only access required data (for example, in a telecoms provider, a customer service agent providing technical assistance need not know clients’ full payment details and history).

The difficulty arises with articles of the GDPR that require organizations to be able to provide data portability and the right to be forgotten. For data portability (i.e. the right of the user to take their data from one vendor to another), the regulation encourages data controllers to develop formats for the data to enable portability. However, in legacy systems, this data may be structured in a way that makes portability difficult.

Also, GDPR’s ‘right to be forgotten’ allows users to have their data deleted without a trace, but this has the potential for disrupting how organizations backup data, due to technological limitations and existing regulations. There are concerns that the right to be forgotten is not achievable while meeting existing regulations that require organizations to hold data for an extended period of time. For example, MiFID II, for which financial institutions must record all conversations related to a financial deal for 5 years. GDPR’s right to be forgotten does not apply when other legal justifications are in place, and the regulation is superseded by the other legal requirement. Organizations in this position will need to consider carefully which data is required and which data can be safely erased.

Organizations that use data backup services also have to ensure that their backups meet GDPR requirements. Data that is restored from backups must also be free of data that the user has requested to be erased. However, in some technical implementations, it is technically impossible to delete bits of data from backups without destroying the entire backup.

Cybersecurity

Cybersecurity vendors can help organizations meet GDPR articles that impose more stringent data security. Most of the cybersecurity services providers’ frameworks divide the act of becoming compliant into five standard operations:

- Assessment – the vendor conducts privacy, governance, process, and data security assessments and gap analyses to identify personal data and how it is processed within the organization, and constructs roadmaps to make the organization GDPR compliant

- Design – the vendor designs an implementation plan of the standards, controls, policies, and architecture to support the roadmap

- Transformation – the embedding of tools, technologies, and processes

- Operation – the execution of business processes and the management of data subject rights

- Conform – monitoring and auditing the organization's compliance to GDPR.

Cybersecurity vendors’ incident response (IR) services will be well placed to handle cybersecurity breaches that require notification to the in-country supervisory authority. The change to incident response protocols after GDPR is enforced is the requirement to notify the authority within 72 hours. Currently, typical IR SLAs can provide off-site services in one hour, and onsite support within ~24 hours. In situations where an existing agreement is in place, remediation vendors are less able to commit to the 72-hour deadline and less able to guide their clients in contacting authorities. As GDPR comes into place, we can expect to see the number of organizations choosing IR services retainers to grow.

Other vendor initiatives

An organization need not choose a single vendor to complete all these operations. Indeed, in a number of cases, vendors are being approached after the organization has conducted assessments of their current level of compliance independently or with the help of another vendor, and managing GDPR tools and auditing the compliance is expected to be rolled into existing Managed Security Services GRC operations.

Other service providers are working to ensure that their services are GDPR compliant. Initiatives to become compliant include:

- Cloud services providers that were previously exempt from the 1995 directive are now regulated and have been working to meet the May 2018 GDPR deadline. As most of the GDPR requirements on cloud providers are covered by ISO 27001, meeting 27001 standards will certainly help the provider demonstrate that it is working towards ‘appropriate technical and organizational measures’, as specified by GDPR

- SaaS vendors have been mapping incoming and outcoming data flows, and how data is processed and stored, and demonstrating that they can meet users’ requirements for the right to erasure, data portability, etc.

- ADM vendors have been performing application design services as part of an SDLC as a matter of principle for years, and will not require drastic changes beyond possibly expanding the use of pseudonymization

- Application security vendors have been performing vulnerability and compliance testing as a core service, and have added provisions to perform GDPR gap analysis.

DPO services

A service that NelsonHall expects to grow fast is Data Protection Officer (DPO) outsourcing. The DPO role (required for data controllers and processors alike) can either be internal or outsourced (provided that the DPO can perform their duties in an independent manner and not cause a conflict of interest).

Of the vendors we have spoken to about GDPR services over the past year, none had a defined DPO outsourcing service in place, and only one (LTI) has been working towards a defined service. LTI is currently in the process of training DPO officers, and is investigating exactly how the service should be offered. NelsonHall expects to see a number of distinct offers around DPO emerge from IT services and law firms very soon.

Not long now…

With the impending enforcement of GDPR less than 200 days away, and services from vendors solidifying, organizations would do well to start considering services now emerging to help them work towards compliance.

]]>

The EU's General Data Protection Regulation (GDPR) was adopted in April 2016 and will be put into force on 25 May 2018. The unified and enforceable laws contained in the regulation replace the outdated rules (that could be interpreted differently by each member state) contained in the 1995 EU Data Protection Directive.

The regulation is of critical importance to organizations because of the steep fines that can be levied for failing to meet the requirements – up to €20m or 4% of global annual turnover for the preceding financial year (whichever is greater) for serious breaches, and €10m or 2% of turnover in less serious cases such as procedural failures.

It is worth noting, however, that these are maximum levels that can be imposed by the supervisory bodies within countries, and in reality they may be much lower. The U.K. information commissioner, Elizabeth Denham, who will be leading the enforcement of GDPR in the U.K. has stated that early talk of fines at such high levels amount to scaremongering, and that ‘issuing fines has always been, and will continue to be, a last resort’. As a proof point in the last year financial year, the U.K. ICO conducted 17k investigations of which just 16 resulted in fines.

Additionally, authorities may be even less able to handle the number of cases related to GDPR after the May 2018 enforcement period begins due to the level of staffing. The U.K. ICO is particularly strong, with 500 personnel, and plans to add 200 new positions over the next two years to help cope with the increasing number of cases related to GDPR. Other member states have lower headcount levels.

Hence, the indications are that strict enforcement may not happen from the outset when the regulation comes into force, and that organizations shown to be working towards meeting the regulation may be given some leeway. Nevertheless, organizations should be looking to start on the road to compliance as soon as possible.

The GDPR exercise should not be seen as one of solely checking boxes to avoid being fined, as there are a number of benefits to organizations in being compliant:

- GDPR can be seen as a chance to review the company’s data handling processes, restructuring them not only to meet compliance, but also to identify potential efficiency gains or new business opportunities/revenue streams

- Increasing the level of security of user data through encryption or pseudonymization will build trust with users, as breaches in the organization's cybersecurity are less likely to impact them

- Performing a review of IT processes, organizations will be able to identify and eliminate ‘shadow IT’ and build proper processes that are known to the organization

- It is a chance to improve IT systems and processes behind the scenes, e.g. through the implementation of customer identity and access management (CIAM) and backup systems.

In the second blog on GDPR, I will look at how IT services vendors can help companies meet GDPR compliance.

]]>

When NelsonHall spoke to Atos earlier in the year about its managed security services, there was a clear push to move clients away from reactive security to a predictive and prescriptive security environment, so not only monitoring the end-to-end security of a client but also performing analytics on how the business and its customers would be affected by threats. Atos’ “Security at Heart” event two weeks ago provided more information on this.

I recently blogged about IBM’s progress in applying Watson to cybersecurity; Watson ingests additional sources such as security blogs into its data lake and machine learning to speed up threat detection and investigation. At face value, the prescriptive SOC offering from Atos isn’t very different in that it starts with a similar goal: use a wider set of security data sources and apply machine learning to better support clients.

With Atos’ prescriptive security approach, it has increased the amount of security data in the data lake that it analyzes. This information can come from threat intelligence feeds, contextual identity information, audit trails, full packet and DNS capture, social media, and information from the deep and dark web.

Atos highlights its ability to leverage its analytics and big data capabilities of its bullion high-end x86 servers to apply prescriptive analytics to the data in its data lake, then use the information, through McAfee’s DXL data exchange layer and threat defense life cycle, to automate responses to security events.

Using this capability Atos can reduce the number of manual actions that analysts are required to perform from 19 to 3. The benefits are clear; cyber analysts have more time to focus on applying their knowledge to secure the client and the speed, and completeness of the service offered increases. Atos claims its Prescriptive SOC analyzes 210 indicators of compromise compared to 6 in the previous service, reducing the time to respond to a threat from 24 hours to under seven minutes, and time to protect against a threat from 4.2 hours to around one minute.

Atos has been beta’ing its prescriptive managed security offering with several clients, mainly in the financial services sector.

Another highlight of the event was Atos’ Quantum computing capabilities, with the release of its Quantum Learning Machine (QLM) quantum computing emulator. These investments in quantum computing in effect future proof some of its cybersecurity capabilities.

The general consensus currently is that scale use of quantum computing by enterprises is still around a decade away. When this happens, quantum computing will add a powerful weapon to the threat actors’ arsenal: the ability to break current encryption methods. Atos' current investment in quantum computing, and specifically its quantum computing emulator, will help organizations develop and test today the quantum applications and algorithms of tomorrow.

]]>

WannaCry ransom message

Each time this ransomware infected a new computer it tried to connect to a domain; if it could not reach the domain, WannaCry continued to spread. To aid its spread, WannaCry utilized a tool known as EternalBlue to identify and use file sharing protocols on the infected systems to spread. EternalBlue is a hacking tool developed by the NSA then stolen by a group called Shadow Brokers and dumped online in April.

With the online dump of the vulnerability, known as MS17-010, Microsoft went about producing and releasing a security patch to fix the vulnerability, quickly pushing the update live to its current operating systems. Unfortunately for some, operating systems that had been EoL’d before the attack, namely Windows XP, did not have a security patch released initially as Microsoft usually charges to provide custom support agreements for old version of Windows.

Organizations hit that have in part remained on XP include Telefónica, the NHS, FedEx, Renault, and Police and petrol stations in China.

Advice for ransomware is to:

- Isolate the system

- Power off

- Secure any data backups

- Contact law enforcement

- Change account passwords.

The FBI had previously released a controversial statement saying they often advise people to pay the ransom, though it does state that paying is no guarantee of getting data back.

The trouble with any advice to pay WannaCry is the mechanism it has to release infected systems. WannaCry has no process to uniquely identify which infected machines have paid the ransom and therefore the likeliness that any infected machines will be released by the attacker is low. Nevertheless, the hackers’ bitcoin wallets have received more than 230 payments totaling more than $65k.

So what can be done about WannaCry and other similar ransomware?

After two days, Microsoft released a patch for Windows XP to fix the vulnerability.

Before this, the attack had been slowed by a security researcher who analyzed the code of WannaCry and detected the domain kill switch it had been attempting to connect to. By registering this domain (for less than $11), newly infected systems received the kill switch and did not go on to spread the ransomware. However, since this, a second version of WannaCry was released without this kill switch.

Organizations should be looking towards their IT service providers to mitigate the threat.

In the immediate timeframe, clients should look towards their service providers to download and apply all applicable OS security patches and antivirus updates and look at what data DR systems can restore.

Moving forwards, organizations should be looking with their IT service providers at:

- Performing a cybersecurity vulnerability analysis to assess the current state of affairs, discover the organization's crown jewels, and close vulnerabilities

- Developing business continuity plans to ensure even if/when a cyberattack occurs, the organization knows how to react and reduce the impact

- Developing cybersecurity training programs to reduce the chance staff will download malware/ransomware.

As part of a wider conversation, if an enterprise has business critical infrastructure that remains on outdated OS’s, it should be looking at how these systems can be secured. These systems could be upgraded to more current OS’s, or if legacy processes or applications prevent this move, perhaps look at other methods of protecting these systems such as air gapping the infrastructure or even paying for Microsoft’s extended service agreement.

In the case of the NHS, at end 2016, incredibly 90% of NHS Trusts were still using Windows XP in some capacity – yet last year, the U.K. Government Digital Service decided not to extend a £5.5m one-year support deal that would have included security patches. We imagine there are some red faces at GDS. Decisions like this in not extending this support deal have now had a huge impact in some areas of the NHS, including in some areas causing delays in the delivery of life-saving services. There are clearly lessons to be learned in both the public and private sector about managing old estates.

]]>NIIT Technologies has a clear focus on a few target industries, with Travel & Transportation accounting for nearly a third of its global revenues, Insurance nearly 25% and Banking & Financial Services another 20%. From a service line perspective, ADM represents the bulk of activity (around two thirds of global revenues) and infrastructure management services another 17%.

An emphasis of the half-day event was how NIIT Technologies is moving away from its traditional model, one that was essentially ‘lift and shift’ with expertise and software IP in a few select sectors, to be one that is more clearly focused on customer satisfaction and digital outcomes

CEO Arvind Thakur outlined three strategic priorities for the company:

- 'Smart IT’ (automation/AI)

- Superior Experience

- Scale Digital

Smart IT: Enter TRON

Not the 1982 original, nor the 2010 sequel (both starring Jeff Bridges) TRON is the moniker that NIIT Technologies has recently given to its growing portfolio of smart automation tools for use across infrastructure, applications and business process services. It has partnerships with the likes of UiPath for BPO and with Nanoheal for its IT helpdesk operations. Expect to hear more partnership announcements and additions to TRON.

Superior Experience: Hi 5 for a millennial workforce

Thakur reminded us how NIIT Technologies has sought for some time to engender an organizational culture of “Up Your Service”, with its ‘Hi 5’ approach.

Hi-5

Geared toward a younger workforce, and explicitly encouraging employees to be customer-centric, to question everything and have the confidence to unleash new ideas, we imagine Thakur’s personal pleasure whenever a young employee approaches him with a high five.

Current initiatives include widespread training on design thinking and building design studios. Again, expect to hear more about the latter.

Scale Digital

NIIT Technologies has joined the rank of service providers who are revealing what proportion of their revenues come from “digital services” (though none specify what these cover). In its most recent (Oct-Dec 2016) quarter, management claims that digital services accounted for 19% of its total revenue, up from 15% in the prior year quarter. More important than the percentage itself is the level of topline growth this indicates: by our estimates, this represents a y/y growth of nearly 30% from “digital services”. For a company whose overall topline grew by just 2% last quarter, ‘Scale Digital” is clearly a priority.

New Global Head of Digital Services

The importance of the Scale Digital strategic pillar is reflected in the decision, rather than to replace the COO position, to instead to create a new EVP role of Head of Digital Services, and appointed in January Joel Lindsey (ex. HPE digital transformation program lead). It is still early days; we will be looking with interest to see where and how he chooses to focus.

Digital Experience

NIIT Technologies’ clients are primarily in B2C sectors (T&T, BFSI, media), where digital transformation strategies have centered around the customer experience. Accordingly, NIIT Technologies articulates its approach with digital uses a deep understanding of customers’ pain points and moments of truth to help clients develop sector-specific ‘Digital E3’ (Emotionally Empathetic Experiences):

- Travel and transport, being made to "feel special"

- Investment banking, "feeling secure"

- Insurance, "feeling cared for".

Digital Analytics

We also heard briefly about ‘Digital Foresight’, a proprietary framework and platform which integrates internal company data, external public data (from commercial sources and from social media), and applies predictive analytics. The focus is on BFS and transportation sectors, and organizations handling large volumes of transactions. See an earlier blog about Digital Foresight here. Again, we expect to hear more about ‘Digital Foresight’ and its application in other sectors.

Platforms: new head of NITL

Another element of NIIT Technologies’ positioning on its abilities to support clients on their digital transformation journeys is its platforms business, in particular, its London market software arm NIIT Insurance Technologies Ltd (NITL). Examples of investments in recent years include the Navigator, Acumen and Exact components for multi-market analytics and risk aggregation, and the Advantage multi-market microservices platform, which also supports technologies such as IoT.

The NITL business is not a major revenue generator in itself and growth is currently flat (a consequence of Brexit), but it is a high margin (~20%) business that provides NIIT Technologies with a clear presence in the London Market.

And, again, there is a recent EVP-level hire to head NITL: Adrian Morgan (formerly at London Market competitor Xchanging where he established Xuber, then at CSC where he was also UK Head of Digital for Insurance). His appointment indicates the importance being attached to growing NITL (which has had around $25m investment in platform development in recent years) – perhaps Morgan will try to take Advantage into other regions such as Singapore? Certainly, we should expect to hear more about NIIT Technologies platforms business.

NIIT Technologies has had several quarters of low single-digit growth, impacted most recently by short-term headwinds such as a client-specific weakness in the U.S. travel sector and by an Indian government sector contract, also by current uncertainties in the London market due to Brexit. A return to double digit growth is not likely on the next couple of quarters.

However, there is a very evident emphasis from the top on driving automation and on shifting its business to digital services and to growing the platforms business. In these regards, NIIT Technologies is holding its own with much larger IT services players. The company also benefits from its strong focus on a few target sectors, in several of which it has a significant presence.

The EVP-level appointments of Joel Lindsay and Adrian Morgan (and the naming of TRON!) are clear indicators of investment priorities. We expect to see some interesting announcements coming out of NIIT Technologies in the next few years.

Mike Smart and Rachael Stormonth

]]>Innovation Hubs

Crédit Agricole, one of its largest clients in France, set up Le Village by CA in 2014 as an innovation hub to nurture startups, and not just in fintech. BearingPoint has contributed to establishment and operation of the innovation village from CA Group’s initial reflections on the project. Using a network of partners including the likes of BearingPoint, Microsoft, HPE, IBM, Orange and Philips, the village hosts ~100 startups with solutions inside and out of the financial services space.

The center is in prime real-estate in Paris, surrounded by the headquarters of many of France’s largest enterprises. This proximity and the partnerships allow the start-ups to gain unprecedented access to these organizations. In some cases, through the coaching provided by the partners and this access, start-ups have saved six months in sales development.

Each month, start-ups pitch to the partners for the ability to enter the village, with pitches lasting a tightly defined 13 minutes: 5 minutes of pitch time, 5 for Q&A and 3 for decision making. Successful start-ups receive the ability to rent space in the village half price, and support & mentoring through the expertise of CA and the partners for up to two years. BearingPoint highlights the importance of this mentoring: ‘incubating is not enough, now you need coaching’. BearingPoint’s contribution includes start-up mentoring and support programs and offering participation in think tanks and other events. Through this coaching the center aims for ~50-60% start-up success, with BearingPoint and other partners offering co-creating of solutions.

Since its inception CA has established 8 such villages, supporting 237 startups across France, and plans to open 30 more villages in 2017.

Global Alliance Network

BearingPoint’s global alliance network includes ABeam Consulting, Grupo ASSA, and West Monroe Partners. Each alliance partner adds geographic coverage; ABeam Consulting in APAC, primarily Japan; BearingPoint in EMEA; Grupo ASSA in Latin America; West Monroe Partners in North America. The global alliance network has 10.2k FTEs across 35 countries with revenues of ~$1.5bn (of which BearingPoint $655m).

The global alliance network promotes ‘working as one group’, and is co-developing centers as a group effort. It intends to open a mobile innovation center, starting in Vienna hosting ~10 start-ups, and thereafter in APAC.

Each alliance member owns the relationship of clients headquartered in their geography, leveraging the global alliance capabilities. The first cross-alliance contract is Continental, where BearingPoint owns the relationship, with resources from West Monroe, Grupo ASSA and ABeam providing SAP integration services across their respective geographies.

The global alliance network will help BearingPoint serve its clients with global operations – its target 2020 revenue for RoW is €65m.

Operating Model

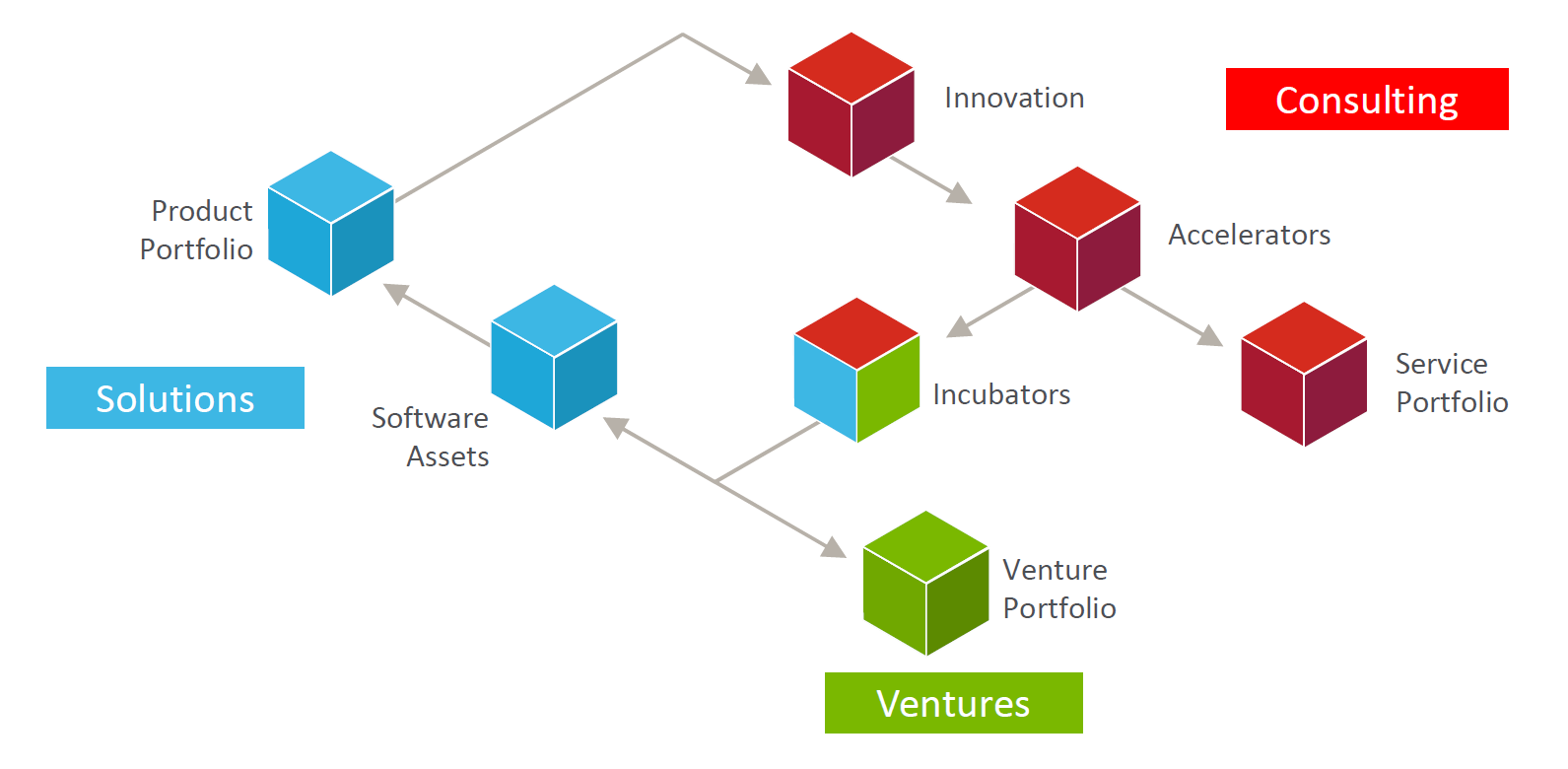

BearingPoint’s operating model is designed to support innovation across its Consulting, Solutions and Ventures organizational structure.

BearingPoint runs shark-tanks twice yearly out of innovation hubs such as Le Village by CA to choose innovative companies to invests in for its Ventures unit.

With this operating model, in 2016 BearingPoint generated 420 new ideas from BearingPoint’s consultants, drawing from their experiences in client engagements, created 11 accelerators that have generated €47m in new sales, and spun off its first venture: blockchain technology for financial institutions, acquired by Digital Asset Holding.

This model has been a success; global alliance partner ABeam is working on implementing a similar incubator platform in 2017/18.

BearingPoint’s 2020 ambitions include €1bn in revenues and a 15% EBIT margin. Three key elements of BearingPoint’s growth strategy are:

- Demonstrating its global reach through its global alliance network

- The solution business adding €100m to its current €139m revenue

- M&A to fill gaps in the portfolio and to support growth in strategic areas. This week for example. BearingPoint announced the acquisition of LCP consulting to strengthen its presence in the retail sector.

NelsonHall estimates the Smart Energy IoT outsourcing market to be worth ~$260m in 2016, sitting third behind the Connected Car and Smart Manufacturing IoT markets. Smart Energy is of major importance, with government policy around the world strongly influencing its widespread adoption, and here I take a brief look at the background of the Smart Energy IoT market, and at some of the work currently being carried out by outsourcing vendors in this space.

The emergence of Smart Energy IoT

Energy generation is underpinned by relatively large and isolated fuel burning power plants (around 60% through the burning of natural gas and coal). However, through the use of the Internet of Things (IoT) and other related technologies this will be replaced by distributed grids.

These distributed grids enable a more flexible network topology, with distributed energy generation relying heavily on the use of renewable energy sources such as solar. These smaller energy generators can be used to form microgrids rather than relying on a costly nationwide grid to transmit the entire energy load large distances from source to distribution end point. IoT is used to monitor the performance of each individual source, e.g. down to the level of each individual solar panel.

As well as being used at the source, IoT is being used heavily at the end point, and in particular for smart meters. Smart meters can send usage readings to the utility provider at much greater frequency than traditional meters, which in turn gives the utility better forecasting of power consumption needs and the ability to perform remote management.

The early stages of these smart grids are already in progress. Government initiatives are helping the spread of smart meters, in particular in China, where current estimates show that by 2017, 95% of households will have a smart meter installed. And a 2012 EU Energy Directive has the target that by 2020 smart meters will be rolled out to 80% of EU households.

Smart Energy IoT outsourcing initiatives

IT outsourcing vendors are working on a variety of Smart Energy IoT initiatives, including:

- Atos’ work with ERDF to build an automated meter management system

- Capgemini supporting BC Hydro to implement a smart meter program

- Capgemini, with its SES platform to provide end-to-end smart metering services, currently in use by E. ON Elnät in Sweden and BC Hydro

- CGI, supported by Telefonica, providing smart meter rollout and the building of the U.K.’s smart metering shared infrastructure for utility services

- Dell’s use of Dell Edge Gateways to build a reliable IoT solution for microgrids for ELM

- HCL using predictive analytics for an oil and gas client to reduce the number of failures and the required time to fix hardware

- IBM working with a North American energy company to develop smart grid and meter technology which allowed consumers to save ~15% on normal energy usage

- TCS including pipeline monitoring with image analytics

- Tech Mahindra developing an IoT gateway and cloud portal to monitor PV farms for an EU solar equipment manufacturer

- VirtusaPolaris testing the uses of IoT for smart water utility management as part of a mini-smart city with the International Institute of Information Technology, Bangalore

- Wipro’s work with a U.S. smart meter manufacturer at Wipro’s smart energy lab.

Outlook

With current utilities aging badly and lacking the investment that would enable them to cope with increasing demand loads and move towards distributed energy generation from renewables, the need to cut down on leakage, maximize production capability and capacity, and make the most of energy sources will become ever more important. And in turn, the use of IoT in the Smart Energy sector will become increasingly important in the coming years. NelsonHall forecasts the Smart Energy IoT outsourcing market to grow from its 2016 level of ~$260m to approaching $1bn by 2020.

]]>

Smart Cities is one of the fastest growing segments within the burgeoning internet of things (IoT) outsourcing market. Currently worth just ~$140m, NelsonHall forecasts the global market for Smart Cities IoT outsourcing to grow by 75% CAGR over the next five years, taking it to ~$2.15bn by 2020.

IoT is being applied in commercial buildings and homes, and in urban infrastructures such as utilities and traffic management. IoT integrated into urban infrastructures will enable cities to gather, store and share data about how citizens interact with the city, with the aim of increasing efficiency through better control of the environment. This can take many forms: for example, in the case of Smart Streets, IoT can be used to monitor vehicles, gathering data such as speed, direction, license details, and number of passengers. Using cognitive computing and big data analysis, traffic flow can be improved and streets can be made safer and be better designed.

When combined with the use of connected vehicles, the entire road network can be transformed. Research conducted by MIT’s Senseable City Lab looked at the use of intelligent slot-based road intersections which automatically adjust vehicle speeds to coordinate arrivals at intersections and remove the need to stop and wait at traffic lights. While this reduces average journey time per car, secondary benefits include reduced pollution and less vehicle wear and tear.

Similar thinking can be applied to other city infrastructures such as sewage and water treatment, parking, and street lighting.

In buildings, efficiencies can be made by better understanding energy usage within the building and relaying this information to building control systems. In one case, TCS implemented an IoT-based solution into large industrial cooling units for an Indian building owner. By monitoring the coolers’ flow rates, inlet and output temperatures, and equipment status (in addition to temperature throughout the building and the local weather conditions) TCS was able to achieve energy savings of around 4%, in addition to reducing the chiller downtime by 10%. TCS has enabled the solution to be reusable and to work for building heaters as well as coolers.

Additionally, safety and standard of living can benefit from IoT through the use of connected smoke alarms, door locks, and smart lighting, windows and blinds.

While the consumer market has been relatively slow to integrate these technologies into buildings, the commercial market is growing faster, with capital investment in the integration of IoT solutions into existing building management systems starting to deliver returns.

Inhibitors to applying IoT much more widely across cities include the difficulty of coordinating across government agencies, and the constraints of outdated regulations. Hence, extensive Smart City development is for now limited to specific, focused initiatives such as:

- India’s Smart City Mission project to build 100 smart cities

- Dubai 2021, a smart city strategy including over 100 initiatives and a plan to transform 1,000 government services into smart services

- Barcelona’s smart metropolis strategy

- Australia’s Commonwealth Smart Cities Plan.

IT outsourcing vendors active in the Smart Cities space include:

- Accenture – working with Chicago CityWorks and Seattle for smart buildings

- CSS Corp – working to improve airport efficiencies and thermostats in retail buildings

- IBM – with its Smarter Cities challenge

- Dell – working with Fujian Province, China and an unnamed hotel to improve boiler efficiencies

- NIIT Technologies – working with an insurance provider to discover what electricals are in use in buildings

- PTC – working with All Traffic Solutions for connected traffic safety equipment

- Tech Mahindra – working on smart cities in Dubai, Milton Keynes (England), and a number of Indian cities

- TCS – providing building chiller/heater management and airport customer tracking

- Virtusa – working with an airport for customer tracking.

Smart City initiatives are emerging fast, and the related IoT outsourcing market is growing alongside. After E-Healthcare and Smart Retail, Smart Cities is the third fastest growing area of IoT adoption globally.

]]>For example, in a series of pilots run in collaboration with Dell, Cloudera, Mitsubishi Electric, and Revolution Analytics, Intel investigated how the use of IoT and data analytics could be applied to factory equipment and sensors to deliver operational and cost efficiencies. By integrating sensors on automated test equipment (ATE)’s testing interface unit (TIU), Intel was able to identify defective TIUs that were wrongly categorizing good units as bad. This was able to predict up to 90% of potential failures before they were triggered by the factory’s existing process control system and, by replacing the TIUs early, Intel was able to reduce yield costs by up to 25% and reduce spare parts costs by 20%.

Another Intel pilot applied image analytics on microchips with the result that defective chips were identified ~10 times faster than using manual methods. And in another case, Airbus embedded electronics into its assembly line tools and used PTC’s IoT platform to enable engineers to rely less on user manuals for determining, for example, the level of torque required for the assembly of specific parts.

So, is IoT being used purely as an efficiency play by manufacturers? In short, no! The advantage of IoT is that it isn't restricted to the manufacturing machines or tools – manufacturers can attach sensors to everything. There have been numerous cases of using wearables to monitor workers’ locations to increase safety, for example.

Manufacturers are also looking to IoT to build relationships with their clients. For example, a printer manufacturer contacted HCL to use its printers’ existing sensors together with an IoT platform in order to monitor the use of its products after sale. Whereas before the deployment of the IoT solution the manufacturer interacted with the client once during the sale, by monitoring the products’ usage the manufacturer was able to offer aftermarket sales and service which it estimates to be a $2bn revenue stream. Similar projects have been able to ‘aaS’ify other types of products.

Generally, every vendor building an IoT offering is targeting the manufacturing industry due to the large opportunities it presents. Just a few vendors operating in this space are:

- Atos’ work with John Deere

- CGI’s elevator maintenance with ThyssenKrupp

- Dell’s work with Scapa Group to use IoT to automate manual processes

- PTC remote diagnosing of Diebold ATMs

- Tech Mahindra increasing the efficiency of a ready-mix concrete manufacturer

- Wipro’s contract with ESAB Welding & Cutting Products to track, monitor and optimize welding equipment.

So, while increased efficiency is the leading driver of IoT projects within the manufacturing industry, this is not the only game in town. The influence of IoT on building new services and improving the manufacturing experience for workers is also significant.

NelsonHall estimates that the manufacturing IoT market is currently worth $220m and forecasts 46% growth (CAGR) over the next five years, taking it to $1.45bn by 2020.

]]>IoT can be used to build connected vehicle systems to enable:

- Vehicle-to-vehicle communication so that vehicles can update each other on road conditions, allow vehicles to properly maintain safe distances, etc.

- Vehicle-to-OEM communication so that vehicle manufacturers can build stronger relations with drivers. Using data on the use of a vehicle, the manufacturer can perform remote diagnostics and predictive and preventative maintenance

- Vehicle-to-third party communication facilitating new business models; e.g. telematics for car insurance, smart parking, navigation systems, entertainment streaming services, smart fueling stations

- Vehicle-to-infrastructure communication connections to traffic lights, toll stations, dynamic speed limits.

The use of these systems will change how vehicles are bought/sold, managed, driven and maintained, with the primary aims of increasing safety, added revenue for OEMs, a better driver experience, and efficiencies for third parties.

The consumer IoT market for connected car systems can be subdivided into categories including driver assistance, safety, and in-car entertainment. Currently the largest segment of the connected car market is dedicated to increasing the safety of motoring, either through the use of telematics systems or for accident reporting.

An easy entry point into the market is in the development of driver scores through the use of telematics. For a relatively small investment, black boxes can be installed to measure acceleration, speed, position, and cornering. Vendors can use ADM capabilities to develop driver scores which can be used by insurance providers or for fleet tracking. The majority of vendors operating in the IoT space have some form of telematics or fleet management offering, including relatively new entrants such as NIIT Technologies.

While the use of telematics, predictive maintenance, and fleet management may currently be the largest application of IoT, the biggest opportunities are in building driver assistance and in-vehicle entertainment. In the self-driving space, fully self-driving vehicles have the chance to change multiple industries dramatically in years to come. If vendors can support manufacturers in developing near 100% reliable onboard real-time analytics (provided that regulation supports their use), the potential market for the autonomous vehicle is enormous. Apart from consulting and systems integration of these systems, the building of connected applications that require real-time analytics, and platforms and infrastructure to support the influx of data is a huge opportunity. The obvious caveat to this market becoming established is the issue of security, following recent revelations of system vulnerabilities enabling attackers to take control of a vehicle’s acceleration and braking, for example.

However, on the assumption that before long the self-driving vehicle market will take off, vendors have been working with vehicle OEMs and banks to extend the use of in-car entertainment systems – e.g. to provide shopping facilities. From a car’s infotainment system, passengers will be able to secure tickets to an event, or pay for a drive-through, automatically using beacon technology. Vendors working in this space include:

- Atos’ work with Renault to use Renault’s R-Link tablet for in-car shopping

- Accenture’s PoC with Visa for ordering fast food (pizzas).

In the wider connected vehicle space, vendors are primarily supporting clients in maintaining transport infrastructure and providing asset management. Examples of this include:

- Capgemini’s Linear Asset Decision Support solution used by National Rail that uses data from a number of sensors, including carriages fitted with rail fault detectors for preventative maintenance

- CGI supporting ProRail by developing the Train Observation and Tracking System (TROTS).

The connected vehicle space has had some of the largest adoption rates of IoT, mainly due to the easily demonstrable benefits to end-clients and third parties, and the numerous potential applications. NelsonHall estimates that the connected vehicle IoT market is currently worth $250m and forecasts 43% growth (CAGR) over the next five years, taking it to $1.5bn by 2020.

A major market analysis report on IoT will be published very soon as part of NelsonHall's IT Services program. To find out more, contact Guy Saunders.

]]>A typical large organization receives millions of security messages per year that require filtering from system logs to identify genuine security threats. One case example that demonstrates the effectiveness of security event filters is the work done by Atos for the 2012 London Olympics. Atos monitored 255 million syslogs, from which 4.5m significant events were identified, of which 5k were passed into the security operations center (SOC). Atos was able to reduce the number of security incidents to a manageable amount and prevent any security incidents impacting live competition.

NelsonHall’s latest IT Services report, Managed Security Services, examines the state of the global Managed Security Services (MSS) market, which we forecast to grow from $6.8bn in 2014 to $14.6bn by 2019 (16.5% CAAGR).

The report reveals that the largest challenge for organizations in securing their operations is the rising cost of cybersecurity. Other key challenges include access to cybersecurity skills, the ability to respond quickly to threats, and the ability to gain a holistic view of cybersecurity (i.e. across the entire attack surface area).

The war for cybersecurity talent is particularly fierce, with organizations finding it difficult to staff their own SOCs. An example is specialty pharmaceutical company ProStraken, whose team of six cybersecurity FTEs had difficulty in maintaining its security profile against ever increasing legislation, and who outsourced its IT infrastructure security management and vulnerability management to Dell.

The advantages of choosing an experienced security partner include:

- Increased speed of response

- Assistance in building proactive incident response plans

- Adding complementary services such as legal support/cyber insurance.

Currently, the top three MSS vendors by market share in the major regions are:

- Global – HP, Dell, IBM

- N. America – SAIC, AT&T, HP

- EMEA – Atos, HP, BT Global Services.

Critical success factors for MSS vendors include:

- A strong understanding of the entire IT security landscape, typically through a high level of security research

- An understanding of IT security in the context of the organization's security needs and specific industry sector

- Access to expertise for best of breed tools that the client organization lacks, and a willingness to work with existing security tools while offering best of breed tools.

The report Managed Security Services examines the changing shape of the MSS market, reveals customer requirements, market size & growth and vendor market shares by geography, and an analysis of critical success factors in this burgeoning market. To find out more, contact Guy Saunders.

]]>Highlighted at the event were the benefits achieved from its 2010 acquisition by NTT. This not only enabled topline growth but it has helped Dimension Data shift from being a hardware-centric to a services-centric business, and one that is able to offer complementary services through the group, for example Dimension Data utilizing NTT Data in applications services and its research arm NTT i3 in cloud, security, infrastructure, and IP around machine learning.

Currently, around $1bn of Dimension Data’s overall revenues are generated from data center management services: its ambition is to quadruple this figure within the next five years. Although this would appear a lofty target, Dimension Data has a solid track record: in November 2010 Dawson shared a target of $7bn in revenue within five years. To achieve its $4bn data center services revenue target, Dimension Data will have to continue at its current rate of growth, which is partly acquisitive. Dimension Data’s data center business is currently stealing market share, both from larger competitors where the client relationship is a traditional IT infrastructure outsource, also from smaller competitors that cannot offer the same level of IP.

As part of its growth strategy Dimension Data is looking to:

- Continue its ‘Simplify to Accelerate’ program across its global operations, with a common service catalog, a single set of reporting and consistent processing across the client base. The program was completed in Europe in 2014, the MEA region is being completed in H1 2015, with the Australian, Asia Pacific and U.S. operations to follow

- Further invest in areas such as cloud data centers and automation of components of its managed services, to bring more activity into an aaS model. Last year Dimension Data invested ~$250m in its portfolio. And in the last three years it has invested a total of ~$750m in acquisitions such as NextiraOne, Nexus, Teliris and Oakton, for geographic and service expansion: a third of its recent growth has been inorganic. Looking ahead, Dimension Data intends to invest ~$100m each year for the next three years in cloud-based offerings. Inorganic growth will also continue to play an important part in future growth, with Continental Europe and the Americas being the obvious target regions

- Reduce the size of the tail of smaller accounts, particularly in its legacy regions such as South Africa, Australia and APAC. Smaller accounts in other regions are felt to offer greater opportunities for growth.

The focus on transforming Dimension Data’s offerings is illustrated by the fact that the top 100 people in the company have 45% of their bonuses based on transformation goals, which include growth in some key services areas - revenue growth in the last two years in some of these areas are impressive: 87% in data center services, 62% in other IT outsourcing and 50% in ITaaS activities.

While increased collaboration across the NTT group has benefited Dimension Data, the journey is not complete; Dawson mentioned ‘hundreds of millions of dollars’ that Dimension Data could still potentially sell into NTT.

Dimension Data is winning contracts that are helping it gain mindshare. Recent examples in EMEA include a win with Amaury Sport Organization (A.S.O.), the owners of the Tour de France, to become its technology partner, and a partnership with Deloitte Consulting to provide and manage an IaaS layer with its Managed Cloud Platform (MCP) for Deloitte’s offerings around enterprise application and analytic workloads. It also has established relationships with the likes of Airbus and Unilever.

The ‘Simplify to Accelerate’ initiative is critical for Dimension Data to be able to shift from offering essentially regional services to being a global services provider that is a top of mind vendor outside Australia and South Africa.

Dimension Data, itself an acquisitive organization, was acquired by a larger acquisitive group (NTT Group’s other subsidiaries also include NTT Data Inc. and NTT Communications, the latter only partly owned). As a post-script., will we see in the mid-term:

- An increasing focus on integrating various parts of the NTT Group into selected target large MNC accounts

- A new Security services organization that leverages assets and resources from across the NTT Group?

Concerns about security issues are expanding beyond CSOs/CISOs to the rest of the C suite, even commanding the attention of CEOs. HP highlighted that

- Conversations with clients now focus primarily on the business issues of security, questioning the increasing cost of security versus the level of protection delivered

- The increasing complexity and difficulty - and cost - of resolving threats.

The increased importance of IT security is a consequence of:

- Attacks on organizations becoming more deadly (recent examples include Target’s CEO being removed after malware was found to have had stolen details for 40m customer credit cards and Ebay where personal information was stolen for 233m customers)

- The transformation of IT infrastructures to cloud and mobile devices

- Needing to comply with increasing regulations (SOX, Basel III, GLBA, PCI etc.).

To illustrate the increasing attention being paid to cyber security, after the recent attack in which customer contact information was taken from 76m households and 7m small businesses. JP Morgan’s CEO recently stated that JP Morgan will likely double its level of cyber security spend within the next five years.

HP highlighted some innovation it is looking to apply to security operations centers (SOCs). HP described three levels of SOC:

- SOC 1.0, ‘Secure the Perimeter’: base level of security analytics currently employed today by most MSSP vendors

- SOC 2.0, ‘Secure the Application’. HP detailed the use of monitoring DNS records within security event information monitoring (SEIM). Monitoring the DNS gives a much higher number of events than the classic model (21bn vs 4.5bn within HP alone); it also gives a deeper insight into application security. Currently in beta phase at HP internally, 25% of the malware found so far is new and had not been detected by traditional methods. HP also detailed a case in which this style of DNS records search was used for an external client, using historic logs to capture a number of previously unknown vulnerabilities.

- SOC 3.0, ‘Secure the Business’. The aspirational SOC level 3.0 uses predictive analytics and HP’s threat database to identify the types of threat that a client experiences and then proactively work to reduce the number of threats.

HP describes its internal SOC as currently at level 1.5; the monitoring of DNS records has not yet been rolled out across the company. Reaching level 3.0 – which is about proactive security management - will be a multi-year journey (around five years?) requiring a more sizeable threat database and a large set of use cases. HP will roll out its central threat database to more partners and receive information from as many clients as possible, then utilize big data analytics to discover trends in the billions of events monitored. And of course, the imminent break up of HP Group into HP Enterprise and HP Inc. will add to the complexity of servicing both new HP companies.

(NelsonHall will be publishing a market assessment in managed security services in Q4, along with detailed vendor profiles on selected key vendors, including HP)

]]>Although the bill has passed through the Duma, it still needs to be pass in the Federation Council of Russia and President Vladimir Putin before it comes into effect. If put into law, all internet companies operating in Russia will be required to store the personal data of Russian citizens within Russia’s borders by September 2016.

This decision by the Russian parliament to put pressure on internet companies follows recent stories and whistleblowing about NSA spying and data seizures. This is not the first time Russia’s isolationist nature in regards to the internet has affected users within the country, for example blocking sites that offer news labelled as extremist and requiring internet writers with more than 3,000 daily visitors to register with the government. In the latter case, only those writers whose pieces are hosted within Russia are susceptible under the law. The new law would require blogs and social media to be hosted within the country; an open internet would not be able to operate without strict enforcement.

The data center outsourcing market in Russia is immature. NelsonHall estimates the data center outsourcing market size in Eastern Europe is less than 20% of that of the U.K. despite having almost 50% more internet users.

Clients looking to remain with their current data center supplier will put pressure on vendors to expand their operations within Russia if the cost of expansion is viable. Vendors slow to take up operations in Russia will lose out to those that can support operations, i.e. the larger IT infrastructure vendors who already have Russian operations/partners or Russian centric suppliers.

This is not the first time regulations have forced a number of vendors to expand their data center operations. For example, the German Federal Data Protection Act (FDPA) forced some cloud providers to operate German data centers for storage of some personal information. The subtle difference between the German and Russian attempts to force center location is built on cause: in the case of Germany the law was conceived to help quash data privacy concerns; with Russia it can be seen as data control.

]]>