Last week, NelsonHall attended ANTENNA2020, AntWorks’ yearly analyst retreat. AntWorks has made considerable progress since its last analyst retreat, experiencing considerable growth (estimated at ~260%) in the three quarters ending January 2020, and employing 604 personnel at the end of this period.

By geography, AntWorks’ most successful geography remains APAC, closely followed by the Americas, with AntWorks having an increasingly balanced presence across APAC, the Americas, and EMEA. By sector, AntWorks’ client base remains largely centered on BFSI and healthcare, which together account for ~70% of revenues.

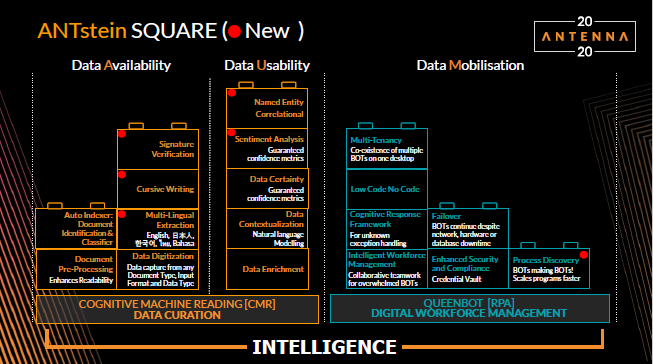

The company’s success continues to be based on its ability to curate unstructured data, with all its clients using its Cognitive Machine Reading (CMR) platform and only 20% using its wider “RPA” functionality. Accordingly, AntWorks is continuing to strengthen its document curation functionality while starting to build point solutions and building depth into its partnerships and marketing.

Ongoing Strengthening of Document Curation Functionality

The company is aiming to “go deep” rather than “shallow and wide” with its customers and cites the example of one client which started with one unstructured document use case and has over the past year introduced an additional ten unstructured document use cases resulting in revenues of $2.5m.

Accordingly, the company continues to strengthen its document curation capability, and recent CMR enhancements include signature verification, cursive handwriting, language extension, sentiment analysis, and hybrid processing. The signature verification functionality can be used to detect the presence of a signature in a document and verify it against signatures held centrally or on other documents and is particularly applicable for use in KYC and fraud avoidance where, for example, a signature on a passport or driving license can be matched with those on submitted applications.

This strategy of the depth of document curation functionality resonated strongly with the clients speaking at the event. In one such case, it was the depth of the platform allowing cursive and text to be analyzed together that led to an early drop out of a number of competitors tasked with building a POC that could extract cursive writing.

AntWorks also continues to extend the range of languages where it can curate documents; currently, 17 languages are supported. The company has changed the learning process for new languages to allow for quicker training on new languages, with support for Mandarin and Arabic available soon.

Hybrid processing enables multi-format documents containing, for example, text, cursive handwriting, and signatures to be processed in a single step.

Elsewhere, AntWorks has addressed a number of hygiene factors with QueenBOT, enhancing its business continuity management, auto-scaling, and security. Auto-scaling in QueenBOT to allow bots to switch between processes if one process requires extra assistance to meet SLAs, effectively allowing bots to be “carpenters in the morning and electricians in the evening,” increasing both SLA adherence and bot utilization.

Another key hygiene factor addressed in the past year has been training material. AntWorks began 2019 with a thin training architecture, with just two FTEs supporting the rapidly expanding company; over the past year, the number of FTEs supporting training has grown to 25, supporting the creation of thousands of hours of training material. AntWorks also launched its internship program, starting in India which has added 43 FTEs in 2019. The ambition this year is to go global with this program.

Announcement of Process Discovery, Email Agent & APaaS Offerings

Process discovery is an increasingly important element in intelligent automation, helping to remove the up-front cost involved in scaling use cases by identifying and mapping potential use cases.

AntWorks’ process discovery module enables organizations to both record the keystrokes taken by one or more users against multiple transactions or import keystroke data from third-party process discovery tools. From these recordings, it uses AI to identify the cycles of the process, i.e. the individual transactions, and presents the user with the details of the workflow, which can then be grouped into process steps for ease of use. The process discovery module can also be used to help identify the business rules of the process and assist in semi-automatic creation of the identified automations (aka AutoBOT).

The process discovery module aims to offer ease of use compared to competitive products and can, besides identifying transaction steps, be used to assist organizations in calculating the RoI on business cases and in estimating the proportions of processes that can be automated, though AntWorks is understandably reluctant to underwrite these estimates.

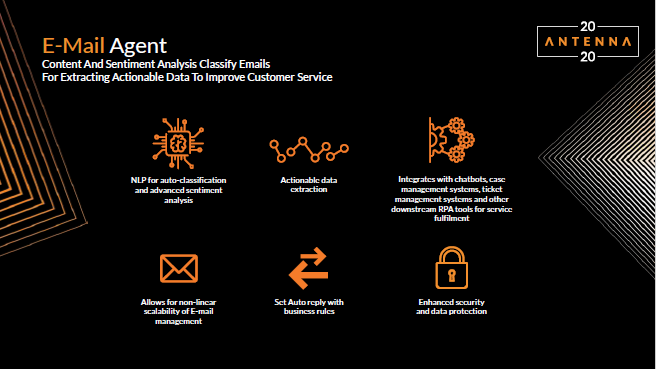

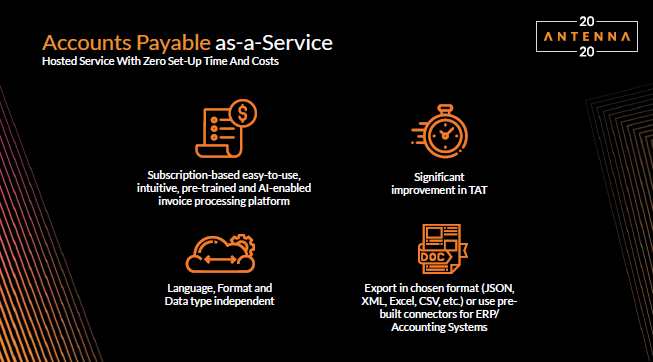

One of the challenges for AntWorks over the coming year is to develop standardized use cases/point solutions based on its technology components, initially in horizontal form, and ultimately verticalized. Two of these just announced are Email Agent and Accounts Payable as-a-Service (APaaS).

Email Agent is a natural progression for AntWorks given its differentiation in curating unstructured documents, built on components from the ANTstein full-stack and packaged for ease of consumption. It is a point solution designed solely to automate email traffic and encompasses ML-based email classification, sentiment analysis to support email prioritization, and extraction of actionable data. Email Agent can also respond contextually via templated static or dynamic content. AntWorks estimates that 40-50 emails are sufficient for training for each use case such as HR-related email.

The next step in the development of Email Agent is the production of verticalized solutions by training the model on specific verticals to understand the front office relations organizations (such as those in the travel industry) have with their clients.

APaaS is a point solution consisting of a pre-trained configuration of CMR to extract relevant information from invoices which can then be API’d into accounting systems such as QuickBooks. Through these point solutions offered on the cloud, AntWorks hopes to open up the potential for the SME market.

Focusing on Quality of Partnerships, Not Quantity

Movement on AntWorks’ partner ecosystem (now ~66) has been slower than expected, with only a handful of partners added since last year's ANTENNA event, despite its expansion being a priority. Instead, AntWorks has been ensuring that the partnerships it does have and signs are deep and constructive. Examples of these deep partnerships include Bizhub and Accenture, two partners who have been added and that are helping train CMR in Korean and Thai respectively in exchange for some timed exclusivity in those countries.

AntWorks is also partnering with SBI Group to penetrate the South East Asia marketplace, with SBI assisting AntWorks in implementing the ability to carry out data extraction in Japanese. Elsewhere, AntWorks has partnered with the SEED Group based in Dubai and chaired by Sheikh Saeed Bin Ahmed Al Maktoum to access the MENA (Middle East & North Africa) region.

New hire Hugo Walkinshaw was brought in to lead the partnership ecosystem very recently, and he has his work cut out for him, as CEO Ash Mehra targets a ratio of direct sales to sales through partners of between 60:40 and 50:50 (an ambitious target from the current 90:10 ratio). The aim is to achieve this through the current strategy of working very closely with partners, signing exclusive partnerships where appropriate, and targeting less mature geographies and emerging use cases, such as IoT, where AntWorks can establish a major presence.

In the coming year, expect AntWorks to add more deep partnerships focused on specific geographic presence in less mature markets and targeted verticals, and possibly with technology players to support future plans for running bots on embedded devices such as ships.

Continuing to Ramp Up Marketing Investment

AntWorks was relatively unknown 18 months ago but has made a major investment in marketing since then. AntWorks attended ~50 major events in 2019, possibly 90 events in total, counting all minor events. However, AntWorks’ approach to events is arguably even more important than the number attended, with the company keen to establish a major presence at each event it attends. AntWorks does not wish to be merely another small booth in the crowd, instead opting for larger spaces in which it can run demos to support the interest in clients and partners.

This appears to have had the desired impact. Overall, AntWorks states that in the past year it has gone from being invited to RFIs/RFPs in 20% of cases to 80% and that it intends to continue to ramp up its marketing budget.

A series B round of funding, currently underway, is targeted on expanding its marketing investments as well as its platform capabilities. Should AntWorks utilize this second round of funding as effectively as its first with SBI Investments 2 years ago, we expect it to act as a springboard for exponential growth and these deep relationships and continue to lead in middle- and back-office intelligent automation use cases with high volumes of complex or hybrid unstructured documents.

Reboot work was the slogan for UiPath’s recent Forward III partner event, a reference to rethinking the way we work. UiPath’s vision is to elevate employees above repetitive and tedious tasks to a world of creative, fulfilling work. The company’s vision is driven by an automation first mindset, along with the concept of a bot for everyone and human-automation collaboration.

During the event, which attracted ~3K attendees, UiPath referenced ~50 examples of clients at scale, and pointed to a sales pipeline of more than $100m.

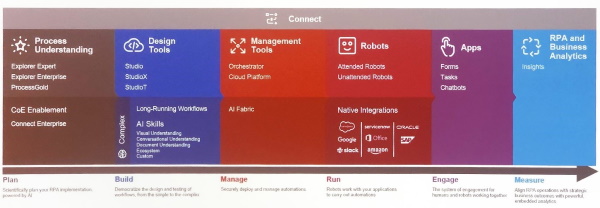

Previously, UiPath’s automation process had three phases: Build, Manage, and Run, using Studio, Orchestrator, and Attended and Unattended bots respectively. Their new products extend this process to six phases: Plan, Build, Manage, Run, Engage, and Measure. In this blog, I look at the six phases of the UiPath automation process and at the key automation products at each stage, including new and enhanced products announced at the event.

Plan phase (with Explorer Enterprise, Explorer Expert, ProcessGold, and Connected Enterprise)

By introducing the product lines Explorer and Connected Enterprise, UiPath aims to allow RPA developers to have a greater understanding of the processes to be automated when planning RPA development.

Explorer consists of three components, Explorer Enterprise, Explorer Expert, and ProcessGold. Explorer provides new process mapping and mining functionality building on two UiPath acquisitions: the previously announced SnapShot, which now comes under the Explorer Enterprise brand, and the newly announced ProcessGold whose existing clients include Porsche and EY. Both products construct visual process maps in data-driven ways; Explorer Enterprise (SnapShot) does this by observing the steps performed by a user for the process, and ProcessGold does this by mining transaction logs from various systems.

Explorer Enterprise performs task mining, with an agent sitting in the background of a user machine (or set of users’ machines) for 1-2 weeks. Explorer then collects details of the user activities, the effort required, the frequency of the activity, etc.

ProcessGold, on the other hand, monitors transaction logs and, following batch updates and 2 to 3 hours of construction, builds a process flow diagram. These workflow diagrams show the major activities of the process and the time/effort required for each step, which can then be expanded to an individual task level. Additionally, at the activity level, the user has access to activity and edge sliders. The activity slider expands the detail of the activities, and the edge slider expands the number of paths that the logged users take, which can identify users possibly straying from a golden path.

Administrators can then use the data from Explorer Enterprise and/or ProcessGold in Explorer Expert. Explorer Expert allows the admin users to enter deeper organizational insights, and either record a process to build or manually create a golden path workflow. These workflows act as a blueprint to build bots and can be exported to Word documents which can then be used by bot creators.

Connected Enterprise enables an organization to crowdsource ideas for which processes to automate, and aims to simplify the automation and decision-making pipelines for CoEs.

Automation ideas submitted to Connected Enterprise are accompanied by process information from the submitter in the form of nine standard questions, such as how rule-based it is, how likely to change it is, who the owner is, etc. as well as process owners. This information is crunched to produce automation potential and ease of implementation scores to help decide on the priority of the automation idea. These ideas are then curated by admins who can ask the end-user for more information, including an upload of ProcessGold files.

The additions of Explorer and Connected Enterprise allow developers to gain deeper insights into the processes to be automated, and business users to connect with RPA development.

Build phase (with enhanced Studio, plus new StudioX & StudioT)

New components to the build phase include StudioX and StudioT along with a number of enhancements to the existing Studio bot builder.

StudioX is a simplified version of the Studio component which is targeted at citizen developers and regular business users, which UiPath referred to ‘Excel power user level’, to create more simplistic bots as part of a push for citizen developers and a bot for every person.

StudioX simplifies bot development by removing the need for variables, and reduces the number of tasks that can be selected. Bots produced with StudioX can be opened with Studio; however, the reverse may not necessarily be the case depending on the components used in Studio.

The build-a-bot demo session for StudioX focused on using Excel to copy data in and out of HR and finance systems and extracting and renaming files from an Outlook inbox to a folder. Using StudioX in the build-a-bot session was definitely an improvement over Studio for the creation of these simple bots.

StudioT, which is in beta and set to release Q1 2020, will act as a version of Studio focused entirely on testing automation. NelsonHall’s software testing research, including software testing automation, can be found here.

Further key characteristics of the existing build components include:

- Long-running workflows which can suspend a process, send a query to a human while freeing up the bot, and continuing the bot once the human has provided input

- Cloud which has a 1-minute signup for the Community version of the aaS platform and (as of September 2019) has 240k users, up from 167k in June 2019

- Queue triggers which can automatically take action when items are added to the queue

- More advanced debugging with breakpoint and watch panels

- Taxonomy management

- Validation stations.

With the introduction of StudioX, UiPath aims to democratize RPA development to the business users, at least in simple cases; and with long-running workflows, human-bot collaboration no longer requires bots to sit idle, hogging resources while waiting for responses.

Manage phase (with AI Fabric)

The Manage phase now allows users to manage machine learning (ML) models using AI Fabric, an add-on to Studio. It allows users to more easily select ML models, including models created outside of UiPath, and integrate them into a bot. AI Fabric, which was announced in April 2019, has now entered private preview.

Run phase (with enhancements to bots with native integrations)

Improvements to the run components leverage changes across the portfolio of Plan, Build, Manage, Run, Engage, and Measure, in particular for attended bots with Apps (see below). Other new features include:

- Expanding the number of native integrations, for which UiPath and its partners are building 100s of connectors to business applications such as Salesforce and Google to provide functionality including launching bots from the business application. Newer native applications are available via the UiPath Go! Storefront

- A new tray will feature in the next release.

Engage phase (allowing users direct connection to bots with Apps)

Apps act as a direct connection for users to interact with attended bots through the use of forms, tasks, and chatbots. In Studio, developers can add a form with the new form designer to ask for inputs directly from the user. For example, combined with an OCR confidence score, a bot could trigger a form to be filled in should the confidence score of the OCR be substandard due to a low-quality image.

Bots that encounter a need for human intervention through Apps will automatically suspend, add a task to the centralized inbox, and move on to running another job. When a human has completed the required interaction, the job is flagged to be resumed by a bot.

With the addition of Apps, the development required to capture inputs from the business users is minimized to allow for a deeper human-bot connection, reduction of development timelines, and helping to enable the goal of ‘a bot for every person’.

Measure phase (now with Insights to measure bot performance)

Insights expands UiPath’s reporting capabilities. Specifically, Insights features customizable dashboarding facilities for process and bot metrics. Insights also features the ability to send pulses, i.e. notifications, to users on metrics, such as if an SLA falls below a threshold. Dashboards can be filtered on processes and bots and can be shared through a URL or as a manually sent or scheduled PDF update.

What does this mean for the future of UiPath?

While UiPath and its competitors have long-standing partnerships with the likes of Celonis for process mining, the addition of native process mining through the acquisitions of SnapShot and ProcessGold, in addition to the expanded reporting capabilities, position UiPath as more of an end-to-end RPA provider.

With ProcessGold, NelsonHall believes that UiPath will continue the development of Explorer, which could lead to a nirvana state in which a client deploys ProcessGold, ProcessGold maps the processes and identifies areas that are ideal for automation, and Explorer Expert helps the bot creator to design this process by linking directly with Studio. While NelsonHall has had conversations with niche process mining and automation providers that are focusing on developing bots through a combination of transaction logs and recording users, UiPath is currently the best positioned of the big 3 intelligent automation platform providers to invest in this space.

StudioX is a big step towards allowing citizen developers. During our build-a-bot session, it was clear that the simplified version of the platform is more user-friendly, resulting in the NelsonHall team powering ahead of the instructor at points. However, we were somewhat concerned that while StudioX opens up the ability to develop bots to a larger scope of personae, the slight disconnects between Studio and StudioX could lead to users learning StudioX and wanting to leverage activities that are currently restricted to Studio (such as error handling) becoming frustrated. NelsonHall believes that the lines between Studio and StudioX will blur, with StudioX receiving simplified functions currently restricted to Studio, which will enable more bots to be passed between the two personae.

Conclusion

With the announcements at the Forward III event, it is clear that UiPath is enabling organizations to connect the business users directly with automation; be that through citizen developers with StudioX, the Connected Enterprise Hub to forge stronger connections between business users and automation CoEs, Explorer to allow the CoE to have greater understanding of the processes, or Apps to provide direct access to the bot.

This multi-pronged push approach to connect the developer and automation to the business user will certainly reduce frustrations around bot development and reduce the feeling from business users that automation is something that is thrust upon them rather than being part of their organization's journey to a more efficient way of working.

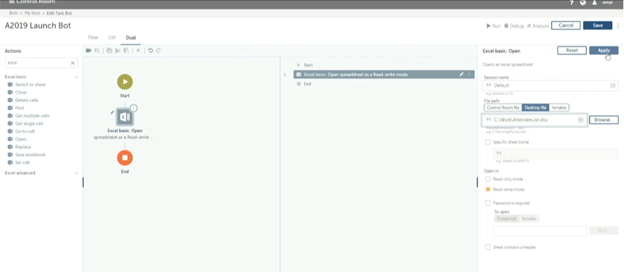

]]>The event, the first under new CMO Riadh Dridi, showcased improvements in the new version of the Automation Anywhere platform around:

- Experience – the most immediate change is in the UI. While prior versions utilized code, workflow, and mixed code/workflow views, the new version features a completely revamped workflow view that simplifies the UX with little coding environment

- Cloud –delivery now utilizes a completely web-based interface, allowing users to sign in and create bots in minutes with zero required installation. This speed of development was demonstrated live on stage with SVP of products Abhijit Kakhandiki successfully racing to create a simple bot against the arrival of an Uber ordered by CEO Mihir Shukla. The bot used in this example was part of Automation Anywhere’s RPA-aaS offering, hosted on Azure leveraging its partnership with Microsoft. Automation Anywhere was also keen to point out the ability to use the platform on-premise or in a private cloud, as is deployed at JP Morgan Chase, the client speaking at the event

- Ecosystem – Automation Anywhere highlighted it has strong and growing ecosystem. With Microsoft, for example, the partnership has been operating for over a year and has so far featured the ability to embed Microsoft’s AI tools into bots, and the above-mentioned Azure partnership. The event featured a demonstration of the integration of Automation Anywhere into Office: a user was able to select and use bots from Excel, as a single joined experience

- Intelligent Automation – in addition to leveraging the ecosystem for its ability to drag and drop third-party AI components, another improvement in A2019 was the integration of the capabilities gained through the Klevops acquisition earlier this year to improve assisted automation capabilities, providing a greater bot and human collaboration across teams and workflows

The majority of these enhancements are already analyzed in NelsonHall’s profile of Automation Anywhere’s capabilities as part of the Intelligent Automation Platform NEAT assessment.

Using the above enhancements, Automation Anywhere estimates that whereas previously clients required 3 to 6 months to POC, and a further 6 to 24 months to scale, it now takes 1-4 months to POC and 4-12 months to scale.

Absent from the event were enhancements to the governance procedure of bots, vitally important as the access to build bots increases, and the bot store for which curation could still be an issue.

While the messaging of the event was ‘Anything Else is Legacy’, there were some natural points in which the announcement looks unfinished – the partnership with Office currently only extends to Excel, the rest of the suite will follow, and the Community version of Automation Anywhere, which is how a large proportion of users dip their toes in the water of automation, is set to be updated to match A2019 later in Q4 2019. Likewise, while the improvement to the workflow view is much cleaner, easier to use than competitors, leading to quicker bot development, the competitor platforms more easily handle complex, branching operations. Therefore, while A2019 can be ideal for organizations that are looking to have citizen developers build simple bots, organizations looking to automate more complex workflows should include the competing platforms in shortlisting.

NelsonHall's profile on the Automation Anywhere platform can be found here.

The recent NEAT evaluation of Intelligent Automation Platforms can be found here.

]]>

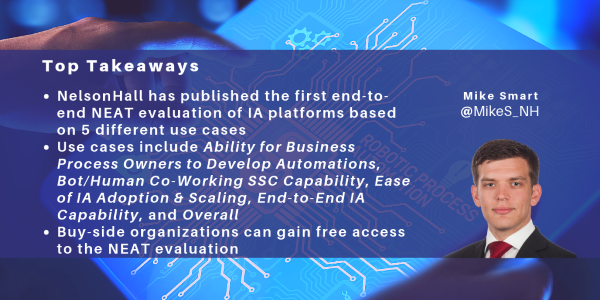

NelsonHall has just launched an industry-first evaluation of Intelligent Automation (IA) platforms, including platforms from Antworks, Automation Anywhere, Blue Prism, Datamatics, IPsoft, Jacada, Kofax, Kryon, Redwood, Softomotive, and UiPath.

As RPA and artificial intelligence converge to address more sophisticated use cases, we at NelsonHall feel it is now time for an evaluation of IA platforms on an end-to-end basis and based on the use cases to which IA platforms will typically be applied. Accordingly, NelsonHall has evaluated IA platforms against five use cases:

- Ability for Business Process Owners to Develop Automations

- Bot/Human Co-Working SSC Capability

- Ease of IA Adoption & Scaling

- End-to-End IA Capability

- Overall.

Ability for Business Process Owners to Develop Automations – as organizations move to a ‘bot for every worker’, platforms must support the business process owners in developing automations rather than select individuals as part of an automation CoE. Capabilities that support business process owners in developing an automation include a strong bot development canvas, a well-populated app/bot store, and process discovery functionality, all in support of speed of implementation.

Bot/Human Co-Working SSC Capability – in addition to traditional unassisted back-office automation and assisted individual automations, bots are increasingly required to provide end-to-end support for large-scale SSC and contact center automation. This increasingly requires bot/human rather than human/bot co-working, with the bot taking the lead in processing SSC transactions, queries and requests. The key capabilities here include conversational intelligence, ability to handle unidentified exceptions, and seamless integration of RPA and machine learning.

End-to-End IA Capability – the ability for a platform to support an automation spanning an end-to-end process, leveraging ML and artificial intelligence, either through native technologies or through partnerships. While many IA implementations remain highly RPA-centric, it is critical for organizations to begin to leverage a wider range of IA technologies if they are to address unstructured document processing and begin to incorporate self-learning in support of exception handling. Key capabilities here include computer vision/NLP, ability to handle unidentified exceptions, and seamless integration of RPA and machine learning in support of accurate document/data capture, reduced error rates, and improved transparency & auditability of operations.

Ease of IA Adoption & Scaling – the ability for organizations to roll out automations at scale. Key criteria here include the ability to leverage the cloud delivery of the IA platform and the strength of the bot orchestration/management platform.

Overall – a composite perspective of the strength of the IA platforms across capabilities, delivery options, and the benefits provided to clients.

No single platform is the most appropriate across all these use cases, and the pattern of capability varies considerably by use case. And this area is ill-understood, even by the vendors operating in this market, with companies that NelsonHall has identified as leaders unknown even to some of their peers. However, the NelsonHall Evaluation & Assessment Tool (NEAT) for IA platforms enables organizations to see the relative strengths and capabilities of platform vendors for all the use cases described above in a series of quadrant charts.

If you are a buy-side organization, you can view these charts, and even generate your own charts based on criteria that are important to you, FREE-OF-CHARGE at NelsonHall Intelligent Automation Platform evaluation.

The full project, including comprehensive profiles of each vendor and platform, is also available from NelsonHall by contacting either Guy Saunders or Simon Rodd.

]]>

Following on from the Blue Prism World Conference in London (see separate blog), NelsonHall recently attended the Blue Prism World conference in Orlando. Building on the significant theme around positioning the ‘Connected Entrepreneur Enterprise’, the vendor provided further details on how this links to the ‘democratization’ of RPA through organizations.

In the past, Blue Prism has seen automation projects stall when being led from the bottom up (due to inabilities to scale and apply strong governance or best practices from IT), or from the top down (which has issues with buy-in and with speed of deployments). However, their Connected Entrepreneur Enterprise story aims to overcome these issues by decentralizing automation. So how is Blue Prism enabling this?

Connected Entrepreneur Enterprise

The Connected RPA components, namely Blue Prism’s connected-RPA platform, Blue Prism Digital Exchange, Blue Prism Skills, and Blue Prism Communities, all aim to facilitate this. In particular, the likes of Blue Prism Communities acts as a knowledge-sharing platform for which Blue Prism envisions that clients will access forums for help in building digital workers (software robots), share best practices, and (with its new connection into Stack Overflow) collaborate on digital worker development.

Blue Prism Skills helps in lightening the load with knowledge requirements for users to begin digital worker development. with the ability to drag and drop in AI components into processes such as any number of computer vision AI solutions.

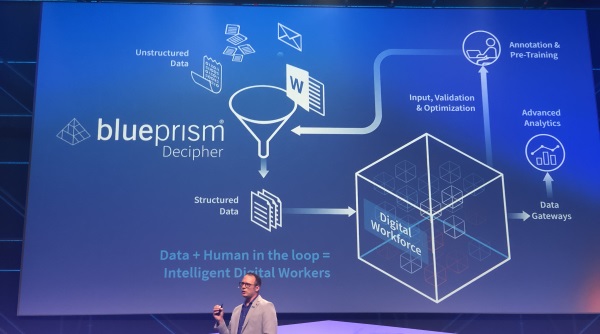

Decipher for document processing was developed by Blue Prism’s R&D lab, and features ML which can be integrated into digital workers, and in turn can have skills such as language detection from Google dropped into the process. The ability to drag and drop these skills continues the work in allowing business users who know the process best to quickly and easily build AI into digital workers. Additionally, Decipher introduces human-in-the-loop capability into Blue Prism to assist in cases for which the OCR lacks confidence in its result. The beta version of Decipher is set to launch this summer with a focus on invoice processing.

Decipher will also factor in the new cloud-based and mobile-enabled dashboard capabilities in the new dashboard notification area which, in addition to providing SLA alerts, provides alerts when queues for Decipher’s human-in-the-loop feature are backing up.

Client example

An example of Blue Prism being used to democratize RPA is for marquee client EY. EY, Blue Prism’s fifth largest client, spoke during the conference about its automation journey. During the 4.5-year engagement, EY has deployed 2k digital workers, with 1.3k performing client work and 700 working internally on 500 processes. Through the deployment of the digital workforce, EY has saved 2 million-man hours.

In democratizing RPA, EY federated the automation to the business, while using a centralized governance model and IT pipeline. A benefit of having an IT pipeline was that the automation of processes was not a stop-start development.

When surveying its employees, EY found that the employees who had been involved in the development of RPA had the highest engagement.

Likewise, Blue Prism had market surveys performed with a partner that found that in 87% of cases in the U.S., employees are willing to reskill to work alongside a digital workforce.

Summary

There is further work to be done in democratizing RPA as part of this Connected Entrepreneur Enterprise, and Blue Prism is currently looking into upgrading the underlying architecture and is surveying its partners with regard to UI changes; in addition, it is moving aspects of the platform to the cloud, starting with the dashboarding capability. Also, while Blue Prism has its university partnerships, these are often not heavily marketed and are in competition with other RPA vendors in the space offering the likes of community editions to encourage learnings.

]]>AntWorks’strategy is based on developing full stack intelligent automation, built for modular consumption, and the company’s focus in 2019 is on:

- BOT productivity, defined as data harvesting plus intelligent RPA

- Verticalization.

In particular, AntWorks is trying to dispel the idea that Intelligent Automation needs to consist of three separate products from three separate vendors across machine vision/OCR, RPA, and AI in the form of ML/NLP, and show that AntWorks can offer a single, though modular, “automation” across these areas end-to-end.

Overall, AntWorks positions Intelligent Automation 2.0 as consisting of:

- Multi-format data ingestion, incorporating both image and text-based object detection and pattern recognition

- Intelligent data association and contextualization, incorporating data reinforcement, natural language modelling using tokenization, and data classification. One advantage claimed for fractal analysis is that it facilitates the development of context from images such as company logos and not just from textual analysis and enables automatic recognition of differing document types within a single batch of input sheets

- Smarter RPA, incorporating low code/no code, self-healing, intelligent exception handling, and dynamic digital workforce management.

Cognitive Machine Reading (CMR) Remains Key to Major Deals

AntWorks’ latest release, ANTstein SQUARE is aimed at delivery of BOT productivity through combining intelligent data harvesting with cognitive responsiveness and intelligent real-time digital workforce management.

ANTstein data harvesting covers:

- Machine vision, including, to name a modest sub-set, fractal machine learning, fractal image classifier, format converter, knowledge mapper, document classifier, business rules engine, workflow

- Pre-processing image inspector, where AntWorks demonstrated the ability of its pre-processor to sharpen text and images, invert white text on a black background, remove grey shapes, and adjust skewed and rotated inputs, typically giving a 8%-12% uplift

- Natural language modelling.

Clearly one of the major issues in the industry over the last few years has been the difficulty organizations have experienced in introducing OCR to supplement their initial RPA implementations in support of handling unstructured data.

Here, AntWorks has for some time been positioning its “cognitive machine reading” technology strongly against traditional OCR (and traditional OCR plus neural network-based machine learning) stressing its “superior” capabilities using pattern-based Content-based Object Retrieval (CBOR) to “lift and associate all the content” and achieve high accuracy of captured content, higher processing speeds, and ability to train in production. AntWorks also takes a wide definition of unstructured data covering not just typed text, but also including for example handwritten documents and signatures and notary stamps.

AntWorks' Cognitive Machine Reading encompasses multi-format data ingestion, fractal network driven learning for natural language understanding using combinations of supervised learning, deep learning, and adaptive learning, and accelerators e.g. for input of data into SAP.

Accuracy has so far been found to be typically around 75% for enterprise “back-office” processes, but the level of accuracy depends on the nature of the data, with fractal technology most appropriate where the past data strongly correlates with future data and data variances are relatively modest. Fractal techniques are regarded by AntWorks as being totally inappropriate in use cases where the data has a high variance e.g. crack detection of an aircraft or analysis of mining data. In such cases, where access to neural networks is required, AntWorks plans to open up APIs to, for example, Amazon and AWS.

Several examples of the use of AntWorks’ CMR were provided. In one of these, AntWorks’ CMR is used in support of sanction screening within trade finance for an Australian bank to identify the names of the parties involved and look for banned entities. The bank estimates that 89% of entities could be identified with a high degree of confidence using CMR with 11% having to be handled manually. This activity was previously handled by 50 FTEs.

Fractal analysis also makes its own contribution to one of ANTstein’s USPs: ease of use. The business user uses “document designer”, to train ANTstein on a batch of documents for each document type, but fractal analysis requires lower numbers of cases than neural networks and its datasets also inherently have lower memory requirements since the system uses data localization and does not extract unnecessary material.

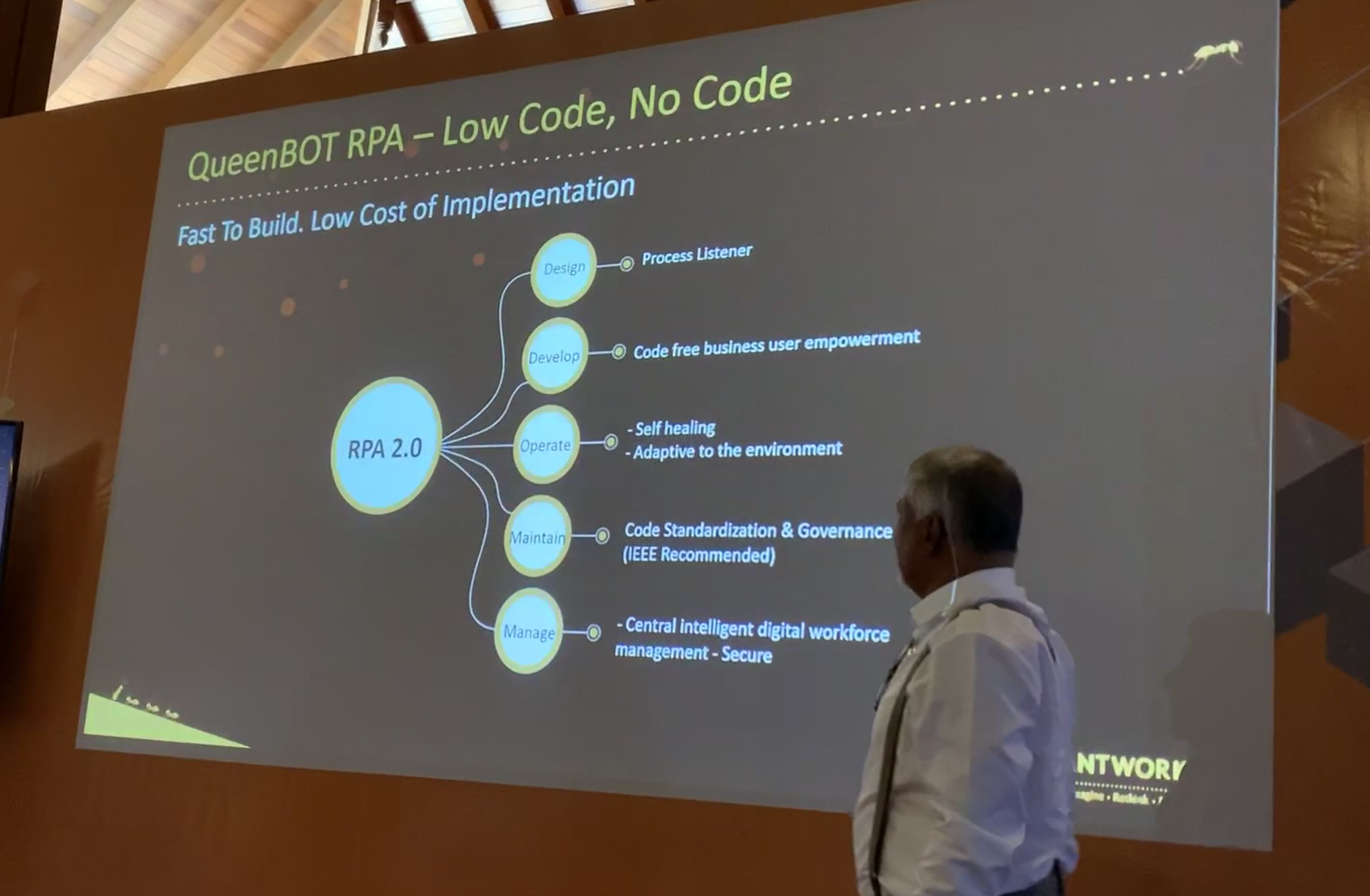

RPA 2.0 “QueenBOTs” Offer “Bot Productivity” through Cognitive Responsiveness, Intelligent Digital Automation, and Multi-Tenancy

AntWorks is positioning to compete against the established RPA vendors with a combination of intelligent data harvesting, cognitive bots, and intelligent real-time digital workforce management. In particular, AntWorks is looking to differentiate at each stage of the RPA lifecycle, encompassing:

- Design, process listener and discoverer

- Development, aiming to move towards low code business user empowerment

- Operation, including self-learning and self-healing in terms of exception handling to become more adaptive to the environment

- Maintenance, incorporating code standardization into pre-built components

- Management, based on “central intelligent digital workforce management.

Beyond CMR, much of this functionality is delivered by QueenBOTs. Once the data has been harvested it is orchestrated by the QueenBOT, with each QueenBOT able to orchestrate up to 50 individual RPA bots referred to as AntBOTs.

The QueenBOT incorporates:

- Cognitive responsiveness

- Intelligent digital automation

- Multi-tenancy.

“Cognitive responsiveness” is the ability of the software to adjust automatically to unknown exceptions in the bot environment, and AntWorks demonstrated the ability of ANTstein SQUARE to adjust in real-time to situations where non-critical data is missing or the portal layout has changed. In addition, where a bot does fail, ANTstein aims to support diagnosis on a more granular basis by logging each intermittent step in a process and providing a screenshot to show where the process failed.

AntWorks’ is aiming to put use case development into the hands of the business user rather than data scientists. For example, ANTstein doesn’t require the data science expertise for model selection typically required when using neural network based technologies and does its own model selection.

AntWorks also stressed ANTstein’s ease of use via use of pre-built components and also by developing its own code via the recorder facility and one client talking at the event is aiming to handle simple use cases in-house and just outsourcing the building of complex use cases.

AntWorks also makes a major play on reducing the cost of infrastructure compared to traditional RPA implementations. In particular, ANTstein addresses the issue of servers or desktops being allocated to, or controlled by, an individual bot by incorporating dynamic scheduling of bots based on SLAs rather than timeslots and enabling multi-tenancy occupancy so that a user can use a desktop while it is simultaneously running an AntBOTs or several AntBOTs can run simultaneously on the same desktop or server.

Building Out Vertical Point Solutions

A number of the AntWorks founders came from a BPO background, which gave them a focus on automating the process middle- and back-office and the recognition that bringing domain and technology together is critical to process transformation and building a significant business case.

Accordingly, verticalization is a major theme for AntWorks in 2019. In addition to support for a number of horizontal solutions, AntWorks will be focusing on building point solutions in nine verticals in 2019, namely:

- Banking: trade finance, retail banking account maintenance, and anti-money laundering

- Mortgage (likely to be the first area targeted): new application processing, title search, and legal description

- Insurance: new account set up, policy maintenance, claims handling, and KYC

- Healthcare & life sciences: BOB reader, PRM chat, payment posting, and eligibility

- Transportation & logistics: examination evaluation

- Retail & CPG: no currently defined point solutions

- Telecom: customer account maintenance

- Media & entertainment: no currently defined point solutions

- Technology & consulting: no currently defined point solutions.

The aim is to build point solutions (initially in conjunction with clients and partners) that will be 80% ready for consumption with a further 20% of effort required to train the bot/point solution on the individual company’s data.

Building a Partner Ecosystem for RPA 2.0

The company claims to have missed the RPA 1.0 bus by design (the company commenced development of “full-stack ANTstein in 2017) and is now trying to get out the message that the next generation of Intelligent Automation requires more than OCR combined with RPA to automate unstructured data-heavy industry-specific processes.

The company is not targeting companies with small numbers of bot implementations but is ideally seeking dozens of clients, each with the potential to build into $10m relationships. Accordingly the bulk of the company’s revenues currently comes from, and is likely to continue to come from, CMR-centric sales with major enterprises either direct or through relationships with major consultancies.

Nonetheless, AntWorks is essentially targeting three market segments:

- Major enterprises with CMR-centric deals

- RPA 2.0, through channels

- Point solutions.

In the case of major enterprises, CMR is typically pulling AntWorks’ RPA products through to support the same use cases.

AntWorks is trying to dissociate itself from RPA 1.0, strongly positioning against the competition on the basis of “full stack”, and is slightly schizophrenic about whether to utilize a partner ecosystem which is already tied to the mainstream RPA products. Nonetheless, the company is in the early stages of building a partner ecosystem for its RPA product based on:

- Referral partners

- Authorized resellers

- Managed Services Program, where partners such as EXL build their own solutions incorporating AntWorks

- Technology Alliance partners

- Authorized training partners

- University partners, to develop up a critical mass of entry-level automation personnel with experience in AntWorks and Intelligent Automation in general.

Great Unstructured Data Accuracy but Needs to Continue to Enhance Ease of Use

A number of AntWorks’ clients presented at the event and it is clear that they perceive ANTstein to deliver superior capture and classification of unstructured data. In particular, clients liked the product’s:

- Superior natural language-based classification using limited datasets

- Ability to use codeless recorders

- Ability to deliver greater than 70% accuracy at PoC stage

However, despite some the product’s advantages in terms of ease of use, clients would like further fine tuning of the product in areas such as:

- The CMR UI/UX is not particularly user-friendly. The very long list of options is hard for business users to understand who require shorter more structured UI

- Improved ease of workflow management including ability to connect to popular workflows.

So, overall, while users should not yet consider mass replacement of their existing RPAs, particularly where these are being used for simple rule-based process joins and data movement, ANTstein SQUARE is well worth evaluation by major organizations that have high-volume industry-specific or back-office processes involving multiple types of unstructured documents in text or handwritten form and where achieving accuracy of 75%+ will have a major impact on business outcomes. Here, and in the industry solutions being developed by AntWorks, it probably makes sense to use the full-stack of ANTstein utilizing both CMR and RPA functionality. In addition, CMR could be used in standalone form to facilitate extending an existing RPA-enabled process to handle large volumes of unstructured text.

Secondly, major organizations that have an outstanding major RPA roll-out to conduct at scale, are becoming frustrated at their level of bot productivity, and are prepared to introduce a new RPA technology should consider evaluating AntWorks' QueenBOT functionality.

The Challenge of Differentiating from RPA 1.0

If it is to take advantage of its current functionality, AntWorks urgently needs to differentiate its offerings from those of the established RPA software vendors and its founders are clearly unhappy with the company’s past positioning on the majority of analyst quadrants. The company aimed to achieve a turnaround of the analyst mindset by holding a relatively intimate event with a high level of interaction in the setting of the Maldives. No complaints there!

The company is also using “shapes” rather than numbers to designate succeeding versions of its software. Quirky and could be incomprehensible downstream.

However, these marketing actions are probably insufficient in themselves. To complement the merits of its software, the company needs to improve its messaging to its prospects and channel partners in a number of ways:

- Firstly, the company’s tagline “reimagining, rethink, recreate” shows the founders’ backgrounds and is arguably more suitable for a services company than for a product company

- Secondly, establishing an association with Intelligent Automation 2.0 and RPA 2.0 is probably too incremental to attract serious attention.

Here the company needs to think big and establish a new paradigm to signal a significant move beyond, and differentiation from, traditional RPA.

]]>

IBM recently announced the first ‘commercial-ready’ quantum computer, the 20-qubit Q System One. The date is certainly worth recording in the annals of computing history. But, in much the same way that mainframes, micros, and PCs all began with an ‘iron launch’ and then required a long pragmatic use case maturity curve, so too will this initial offering from IBM be the first step on a long evolution path. With so much conjecture and contemplation happening in the industry surrounding this announcement, let’s unpack what IBM’s announcement means – and how organizations should be reacting.

First, although Q System One is being billed as commercial-ready, that designation means that the product is ready for usage on a traditional cloud computing basis, not necessarily that it is ready to contribute meaningfully to solving business problems (although the device will certainly mature quickly in both capability and speed). What Q System One does offer is a keystone for the industry to begin working with quantum technology in much the same way that any other cloud utility supercomputing devices are available, and a testbed for beginning to explore and develop quantum code and quantum computing strategies. As such, while Q System One may not outperform traditional cloud computing resources today, its successors will likely do so in short order – perhaps as soon as 2020.

As I noted in my blockchain predictions blog for 2019, quantum computing has long been the shadow over blockchain adoption, owing to the concern that quantum computing will make blockchain’s security aspect obsolete. That watershed lies years in our future, if indeed at all, and it is important to note that quantum computing can as easily be tasked to enhance cryptographic strength as it can to break it down. As a result, expect that the impact of quantum computing on blockchain will net to a zero-sum game, with quantum capabilities powering ever-more evolved cryptographic standards in much the same way that the cybersecurity arms race has proceeded to date.

With this in mind, what should organizations have on their quantum readiness roadmaps? The short answer is that quantum readiness is more the beginning of many long-term projects rather than the consummation of any short-term ones, so quantum is more a component of IT strategy than near-term tactical change. Here are five recommendations I’m making for beginning to ready your organization for quantum computing during 2019.

Migrate to SHA-3 – and build an agile cybersecurity faculty

There is no finish line for cybersecurity, especially with quantum capabilities on the horizon, but when I speak with enterprise organizations on the subject, I recommend that a combination of NIST and RSA/ECC technologies approximates to something that will be quantum-proof for the foreseeable future. Migration off of SHA-2 is a strong prescriptive regardless, given the flaws that platform shared with its predecessor. But perhaps more importantly than the construction of a cryptographic standard to meet quantum’s capabilities is the design of an agile cybersecurity faculty that can shorten the time to transition from one standard to the next. Quantum computing will produce overnight gains in both security and exposure as the technology evolves; being ready to take swift counteraction will be key in the next decade of information technology.

Begin asking entirely new questions in a Quantum CoE

Traditional computing technology has taught us clear phase lines of the possible and impossible with respect to solving business problems. Quantum, over the course of the next decade, will completely redraw those lines, with more capability coming online with each passing year (and, eventually, quarter). Tasks like modeling new supply chain algorithms, new modes of product delivery, even new projections of complex M&A activity in a sector over a long forecast span will become normal requests by 2030.

Make sure data hygiene and MDM protocols are quantum-ready

Already, there have been multiple technologies – Big Data, automation, and blockchain are just three – that have strongly suggested the need to ensure that organizations are running on clean, reliable data.

As business task flow accelerates, and more cognitive automation and smart contracts touch and interact with information as the first actor in the process chain, it is increasingly vital to ensure that these technologies are handling quality data. Quantum may be the last such opportunity to bring the car into the pit for adjustments before racing at full speed commences in sectors like retail, telecom, technology, and logistics. This is a to-do that benefits a broad array of technological deployment projects, so while it may not be relevant for quantum computing until the next decade begins, the benefits will begin to accrue from these efforts today.

Aim at a converged point involving data, analytics, automation & AI

Quantum computing is often discussed in the context of moonshot computing problems – and, indeed, the technology is currently best deployed against problems outside the realm of capability for legacy iron. But quantum will also power the move from offline or nearline processing to ‘now’ processing, so tasks that involve putting insights from Big Data environments to work in real-time will also fall within reach over the course of the next decade. What you may find from a combination of this action and the two prior is that some of the questions and projects you had slated for a quantum computing environment may actually be addressable today through a combination of cognitive technologies.

Reach out to partners, suppliers & customers to build a holistic quantum perspective

Legacy enterprise computing grew up as a ‘four-walls’ concept in part because of the complexity of tackling large, complex business optimization problems that involved moving parts outside the organization. Quantum does not automatically erase those boundary lines from an integration perspective, but the next decade will see more than enough computing power come online to optimize long, global supply chain performance challenges and cross-border regulatory and financing networks. Again, efforts in this area can also benefit organizational initiatives today; projects in IoT and blockchain, in particular, can achieve greater benefits when solutions are designed with partners, suppliers, regulators, and financiers involved up front.

Conclusion

Quantum computing is not going to change the landscape of enterprise IT tomorrow, or next month, or even next year. But when it does effect that change, organizations should expect its new capabilities to be game-changers – especially for those firms that planned well in advance to take advantage of quantum computing’s immense power.

This short checklist of quantum-readiness tasks can provide a framework for pre-quantum technology projects, too – making them an ideal roster of 2019 ‘to-dos’ for enterprise organizations.

]]>

Automation marketplaces are swiftly becoming a standard offering for leading RPA and intelligent automation providers, and with good cause. Automation is coming of age in the era of the ‘appified’ technology space, one in which tech buyers increasingly expect best practices and new capabilities to be offered on an as-needed, storefront basis. Within the space of a single year, such marketplaces are well on their way to expected-offering status for top providers. Blue Prism’s entry into this space, the Blue Prism Digital Exchange (Blue Prism DX), is a compelling environment built on the principles of “value delivery and organization and curation of automation content by skill”.

The first of these – value delivery – is aligned with Blue Prism’s overall message of ‘democratization of technology,’ a strategy that prioritizes access to automation technology at the operational level. Placing technologies like machine learning, OCR, and analytics directly into the hands of the business can accelerate the speed of automation projects to deployment and fiscal return. Blue Prism is also providing Blue Prism DX contributors with multiple informational content slots on their content upload pages to offer prospective content users video and text guidance on usage and deployment. The Forum area of Blue Prism DX also offers users peer-to-peer content sharing with respect to best practices in deploying Exchange capabilities and automations.

The second – organization by skill –categorizes the Blue Prism DX automation marketplace in the same way as the company’s existing training and automation thinking along the lines of six discrete skills: knowledge and insight, visual perception, learning, planning and sequencing, problem-solving, and collaboration. By organizing the Blue Prism DX along these same lines, Blue Prism is encouraging users to begin thinking of automation problems as collections of tasks in these areas, and – Blue Prism hopes – curating their own capabilities using these same categories.

Blue Prism DX visually looks similar to competing automation app stores, and perhaps that’s a sound decision given that many organizations have begun utilizing two or more automation vendors to address increasingly diverse challenges. The store does offer considerable detail on component authorship, supported Blue Prism releases (as not all will immediately be 6.4-capable), and dependencies.

Where Blue Prism has worked to differentiate its offering is very much ‘under the hood.’ The company has done considerable work in ‘wrapping’ third-party capabilities – like Microsoft Vision – in interface components that ease the process of integrating those capabilities directly into automation script. In the demonstration provided, the Blue Prism-customized Microsoft Vision block made the process of image analysis straightforward, with traditional UI components used to make the process of managing a complex task simple for the user.

At launch, Blue Prism DX contains a broad variety of assets, ranging from comprehensive task automation code (Avanade offers an entire intelligent help desk application that includes Blue Prism and Microsoft Cognitive Services software in a single package) to particulate technology components, such as connectors for ABBYY’s FlexiCapture and Cogito’s text analytics offering. Blue Prism has cast a wide net for Blue Prism DX content and intends to curate solutions of both types – and points between – as the content library expands. In doing so, Blue Prism offers filtering options for asset type (VBO/WebAPI/Solution) and industry relevance to assist users in finding the type of content best suited to their needs.

At the moment, Blue Prism has no near-term plans to monetize Blue Prism DX, but the environment is built on a platform capable of monetization, and contributors are free to charge for their content. At launch, Blue Prism expected the content on Blue Prism DX to be approximately 60/40 internally developed content to partner content but sees that evolving as more partners become involved in contributing automation capabilities and code.

On the roadmap for Blue Prism’s Digital Exchange offering are a number of improvements, including new tools for simplifying the collaboration process between human and digital workers, interactive user communities, and expansion of the ecosystem with a broader array of technological capability contributors. Blue Prism DX aims to be the first stop for clients seeking to complete existing automation projects, launch new ones, and keep pace with best practices in the automation technology sector.

]]>

UiPath held its 2018 UiPathForward event October 3-4, 2018, in Miami, Florida. The focus of proceedings was the October release of the company’s software and a related trio of major announcements: a new automation marketplace, new investment in partner technology and marketing, and a new academic alliance program.

The analyst session included a visit from CEO Daniel Dines and an update on the company’s performance and roadmap. UiPath has grown from a $1m ARR to a $100m ARR in just 21 months, and the company is trending on a $140m ARR for 2018 en route to Dines’ forecasted $200m ARR in early 2019. UiPath is adding nearly six enterprise clients a day and has begun staking a public claim – not without defensible merit – to being the fastest-growing enterprise software company in history.

During the event, UiPath announced a new academic alliance program, consisting of three sub-programs – one aimed at training higher education students for careers in automation, another providing educators with resources and examples to utilize in the classroom setting, and the third focused on educating youth in elementary and secondary educational settings. UiPath has a stated goal of partnering with ~1k schools and training ~1m students on its RPA platform.

The centerpiece of the event, however, was Release 2018.3 (Dragonfly), which was built around the launch of UiPath Go!, the company’s new online automation marketplace. It would be easy to characterize Go! as a direct response to Automation Anywhere’s Bot Store, but that would be overly simplistic. Where currently the Bot Store skews more toward apps as automation task solutions, Go! is an app store for particulate task components – so while the former might offer a complete end-to-end document processing bot, Go! would instead offer a set of smaller, more atomic components like signature verification, invoice number identification, address lookup and correction, etc.

The specific goal of Go! is to accelerate adoption of RPA in enterprise-scale clients, and the component focus of the offering is intended to fill in gaps in processes to allow them to be more entirely automated. The example presented was, the aforementioned signature verification; given that a human might take two seconds to verify a signature, is it really worth automating this phase of the process? Not in and of itself, but failing to do so creates an attended automation out of an unattended one, requiring human input to complete. With Go!, companies can automate the large, obvious task phases from their existing automation component libraries, and then either build new components or download Go! components to complete the task automation in toto.

Dragonfly is designed to integrate Go! components into the traditional UiPath development environment, providing a means for automation architects to combine self-designed automation components with downloaded third-party components. Given the increased complexity of managing project automation software dependencies for automations built from both self-designed and downloaded components, UiPath has also improved the dependency and library management tools in 2018.3. For example, automation tasks that reuse components already developed can include libraries of such components stored centrally, reducing the amount of rework necessary for new projects.

In addition, the new dependencies management toolset allows automation designers to point projects at specific versions of automations and task components, instead of defaulting to the most recent, for advanced debugging purposes. Dragonfly also moves UiPath along the Citrix certification roadmap, as this release is designated Ready for Citrix, another step toward becoming Certified for Citrix. Finally, Dragonfly also adds new capabilities in VDI management, new localization capabilities in multiple languages, and UI improvements in the Studio environment.

In the interest of spurring development of Go! components, UiPath has designated $20m for investment in its partners during 2019. The investment is split between two funds, the UiPath Venture Innovation Fund and the UiPath Partner Acceleration Fund. The first of these is aimed directly at the Go! marketplace by providing incentives for developers to build UiPath Go! components. In at least one instance, UiPath has lent developers directly to an ISV along with funding to support such development. UiPath expects that these investment dollars will enable the Go! initiative to populate the store faster than a more passive approach of waiting for developers to share their automation code.

The second fund is a more traditional channel support fund, aimed at encouraging partners to develop on the UiPath platform and support joint marketing and sales efforts. The timing of this latter fund’s rollout, on the heels of UiPath’s deal registration/marketing and technical content portal announcement, demonstrates the company’s commitment to improving channel performance. Partners are key to UiPath’s ability to sustain its ongoing growth rate and the strength of its partner sales channels will be vital in securing the company’s next round of financing. (UiPath's split of partner/direct deployments is approaching 50/50, with an organizational goal of reaching 100% partner deployments by 2020.) Accordingly, it is clear that the company’s leadership team is now placing a strong and increasing emphasis on channel management as a driver of continued growth.

]]>

I recently attended IPsoft’s Digital Workforce Summit in New York City, an intriguing event that in some ways represented a microcosm of the challenges clients are experiencing in moving from RPA to cognitive automation.

The AI challenge

Chetan Dube loomed large over proceedings. IPsoft’s president and CEO was onstage more than is common at events of this type, chairing several fireside chats himself in addition to his own technology keynote, and participating (with sleeves rolled up) at the analyst day that followed. He brought a clear challenge to the stage, while at the same time conveying the complexity and capability of IPsoft’s flagship cognitive products, Amelia and 1DESK, and making them understandable to the audience, in part by framing them in terms of commercial value and ROI.

RPA vendors have a simpler form of this challenge, but both robotic process automation and cognitive automation vendors have a hill to climb in gaining clients’ trust in the underlying technology and reassuring service buyers that automation will be both a net reducer of cost and a net creator of jobs (rather than a net displacer of them).

From a technological perspective, RPA sounds from the stage (and sells) much more like enterprise software than neuroscience or linguistics, so the overall pitch can be sited much more in the wheelhouse of IT buyers. The product does what it says on the tin, and the cavalcade of success stories that appear on event stages are designed to put clients’ concerns to rest. To be sure, RPA is by no means easy to implement, nor is it yet a mature offering in toto, but the bulk of the technological work to achieve a basic business result has been done. And overall, most vendors are working on incremental and iterative improvements to their core technology at this time.

AI differs in that it is still at the start of the journey towards robust, reliable customer-facing solutions. While Amelia is compelling technology (and is performing competently in a variety of settings across multiple industries), the version that IPsoft fields in 2025 will likely make today’s version seem almost like ELIZA by comparison, if Dube’s roadmap comes to fruition. He was keen to stress that Amelia is about much more than just software development, and he spent a lot of time explaining aspects of the core technology and how it was derived from cognitive theory. The underlying message, broadly supported by the other presenters at the event, was clearly one of power through simplicity.

IPsoft’s vision

The messaging statements coming from the stage during the event portrayed a diverse and wide-ranging vision for the future of Amelia. Dube sees Amelia as an end-to-end automation framework, while Chief Cognitive Officer Edwin van Bommel sees Amelia as a UI component able to escape the bounds of the chatbox and guide users through web and mobile content and actions. Chief Marketing Officer Anurag Harsh focused on AI though the lens of the business, and van Bommel presented a mature model for measuring the business ROI of AI.

Digging deeper, some of what Dube had to say was best read metaphorically. At one point he announced that by 2025 we will be unable to pass an employee in the hallway and know if he or she is human or digital. That comment elicited some degree of social media protest. But consider that what he was really saying is that most interaction in an enterprise today is performed electronically – in that case, ‘the hallways’ can be read as a metaphor for ‘day-to-day interaction’.

The question discussed by clients, prospects, and analysts was whether Dube was conveying a visionary roadmap or fueling hype in an often overhyped sector. Listening to his words and their context carefully, I tend towards the former. Any enterprise technology purchase demands three forms of reassurance from the vendor community:

- That the product is commercially ready today and can take up the load it is promising to address

- That the company has a long-term roadmap to ensure that a client’s investment stays relevant, and the product is not overtaken by the competition in terms of capacity and innovation

- And perhaps most importantly, that the roadmap is portrayed realistically and not in an overstated fashion that might cause clients to leave in favor of competitors’ offerings.

I took away from Digital Workforce Summit that Dube was underscoring the first and second of these points, and doing so through transparency of operation and vision.

There are only two means of conveying the idea that you sell a complex product which works simply from the user perspective – you either portray it as a black box and ask that clients trust your brand promise, or you open the box and let clients see how complex the work really is. IPsoft opted for the latter, showing the product’s operation at multiple levels in live demonstrations. Time and again, Dube reminded the audience that it is unnecessary to grasp evolved scientific principles in order to take advantage of technologies that use those principles – so light switches work, in Dube’s example, without the user needing to grasp Faraday’s principles of induction. It still benefits all parties involved to see the complexity and grasp the degree to which IPsoft has worked to make that complexity accessible and actionable.

Conclusion

The challenge, of course, is that clients attend events of this kind to assess solutions. The majority of attendees at Digital Workforce Summit were there to learn whether IPsoft’s Amelia, in its latest form, is up-to-speed to manage customer interactions, and will continue to evolve apace to become a more complete conversational technology solution and fulfill the company’s ROI promises.

I came away with the sense that both are true. Now it is up to the firm’s technology group to translate Dube’s sweeping vision into fiscally rewarding operational reality for clients.

]]>

EdgeVerve, an Infosys Product subsidiary, this week announced a new blockchain-powered application for supply chain management as part of its product line. Nia Provenance is designed to address the challenges faced by organizations managing complex supply chain networks with multiple IT stacks engaged across multiple stakeholders. Here I take a quick look at the new application and its potential impact.

Supply chain traceability, transparency & trust

Nia Provenance is designed to provide traceability of products from source of origin to point of purchase with full transparency at every point along the supply chain. The product establishes trust through the utilization of a version of Bitcore, the blockchain architecture used by Bitcoin. While this can be a relatively simple task in agribusiness and other supply environments in which a product involves only processing as it moves through the supply chain, environments such as consumer electronics or medical devices are much more complex, involving integration and assembly of multiple components along the way. The ability to isolate a specific component and trace it to its source of origin, through phases of value addition timestamped on a blockchain ledger, is invaluable in case of recall or consumer danger.

Transparency in Nia Provenance is provided through proof of process as the product or commodity moves through the system – so attributes that must be agreed on at specific phases of the supply chain, such as conflict-free or locally-sourced, can be seen in the system as they are accumulated. Similarly, regulatory inspections and certifications are more easily tracked and audited through a blockchain solution like Nia Provenance.

Finally, trust is gained in a system with a combination of data immutability, equality in network participation as a result of decentralization of the overall SCM ledger, and cryptographic information security. Over time, the benefits of a blockchain SCM environment accrue both to the organizational bottom line, in the form of cost savings, and to the organization’s brand as a function of increased consumer trust in the brand promise.

Agribusiness client case

As one example of how Nia Provenance is being leveraged in the real world, a global agribusiness firm undertook a proof of concept for its coffee sourcing division in Indonesia to track the journey of coffee from the growing site, through the roasting plant, the blend manufacturer, the quality control operation, the logistics providers, and on to the importer. This enabled the trader to provide trusted accreditation and certification information to the importer for properties such as organic or fair trade status, or that the coffee was grown using sustainable agriculture standards.

Providing strategic blockchain reach

Nia Provenance provides Infosys with three important sources of strategic blockchain ‘reach’ in an increasingly competitive market, because:

- It is platform-agnostic and purpose-built to dock with multiple blockchain architectures. A supply chain solution that relies too heavily on the specific capabilities of one common blockchain architecture or another – for example, Ethereum or HyperLedger – would encounter difficulty working with other upstream or downstream architectures. By keeping the DLT technology in an abstraction layer, Nia Provenance eases the process of incorporating different blockchain architectures in a complex SCM task environment

- It is designed to benefit multiple supply chain stakeholders, not just the client. Blockchain adoption becomes more appealing to upstream and downstream stakeholders, as well as horizontal entities like banks, insurers and regulators, when the ecosystem is built with clear benefits for them as well as the organizing entity. Nia Provenance is designed from the ground up with a mindset inclusive of suppliers, inspectors, insurers, shippers, traders, manufacturers, banks, distributors, and end customers

- It is designed to span multiple industries. Although the platform has its origins in agribusiness, Nia Provenance looks to be up to the task of SCM applications in manufacturing, consumer goods/FMCG, food and beverage, and specialized applications such as cold-chain pharmaceuticals.

Summary

Supply chain provenance is a core application for blockchain, and one that we expect to be a clear value delivery vehicle for blockchain technology through 2025. The combination of – as Infosys puts it – traceability, transparency, and trust that blockchain provides is a compelling proposition. Nia Provenance offers a solution across a broad variety of industry applications for organizations seeking lower cost and greater security in their supply chain operations.

]]>

For all the discussion in the blockchain solution industry around platform selection (are they choosing Fabric or Sawtooth? Quorum or Corda?), you’d be forgiven for thinking that every provider’s first stop is the open-source infrastructure shelf. But the reality is that blockchain is more a concept than a fixed architecture, and the platforms mentioned do not encompass the totality of use case needs for solution developers. As a result, some solution developers have elected to start with a blank sheet of paper and build blockchain solutions from the ground up.

One such company is Symbiont, who started down this road much earlier than most. Faced with the task of building a smart contracts platform for the BFSI industry, the company examined what was available in prebuilt blockchain platform infrastructure and did not see their solution requirements represented in those offerings – so they built their own. Symbiont’s concerns centered around the two areas of scalability and security, and for the firm’s pursuit target accounts in capital markets and mortgages, those were red-letter issues.

The company addressed these concerns with Symbiont Assembly, the company’s proprietary distributed ledger technology. Assembly was designed to address three specific demands of high-volume transactional processes in the financial services sector: fault tolerance, volume management, and security.

Supporting fault tolerance

Assembly addresses the first of these through the application of a design called Byzantine Fault Tolerance (BFT). Where some blockchain platforms allow for node failure within a distributed ledger environment, platforms using BFT broaden that definition to include the possibility of a node acting maliciously, and can control for actions taken by these nodes as well. The Symbiont implementation of BFT is on the BFT-SMaRt protocol.

Volume management

In addressing the volume demands of financial services processing, deciding on the BFT-SMaRt protocol was again important, as it enables Assembly to reach performance levels in the ~80k/s range consistently.

This has two specific benefits, one obvious and one less so. First, it means that Assembly can manage the very high-volume transaction pace of applications in specialized financial trading markets without scale concerns. Secondly, it means that in lower-volume environments, the extra ‘headroom’ that BFT-SMaRt affords Assembly can be used to store related data on the ledger without the need to resort to a centralized data store to hold, for example, scanned legal documents that support smart contracts.

Addressing security concerns

The same BFT architecture that supports Assembly’s fault tolerance also provides an additional layer of security, in that malicious node activity is actively identified and quarantined, while ‘honest’ nodes can continue to communicate and transact via consensus. Add in encryption of data, whereby Assembly creates a private security ledger within the larger ledger, and the result is a robust level of security for applications with significant risk of malicious activity in high-value trading and exchange.

Advantages of building a bespoke blockchain platform

Building its own blockchain platform cost Symbiont many hours and R&D dollars that competitors did not have to spend, but ultimately this decision provides Symbiont with three strategic advantages over competitors:

- Assembly is purpose-built for BFT-relevant, high-volume environments. As a result, the platform has performance and throughput benefits for applications in these environments compared with broader-use blockchain platforms that are intended to be used across a variety of business DLT needs. To some degree this limits the flexibility of the platform in other use cases, but just as a Formula One engine is a bespoke tool for a specific job, so too is Assembly specifically designed to excel in its native use case environment. That provides real benefits to users electing to build their banking DLT applications on the Assembly architecture

- Symbiont can provide for third-party smart contract writing, should it elect to do so. While this is not in the roadmap for the moment, and Symbiont appears content to build client solutions on proprietary deliverables from the contract-writing layer through the complete infrastructure of the solution, the company could elect to allow clients to write their own smart contracts ‘at the top of the stack’. Symbiont does intend to keep the core Assembly platform proprietary to the company for the foreseeable future