Search posts by keywords:

Filter posts by author:

Related NEAT Reports

Other blog posts

posted on Oct 15, 2024 by Dominique Raviart

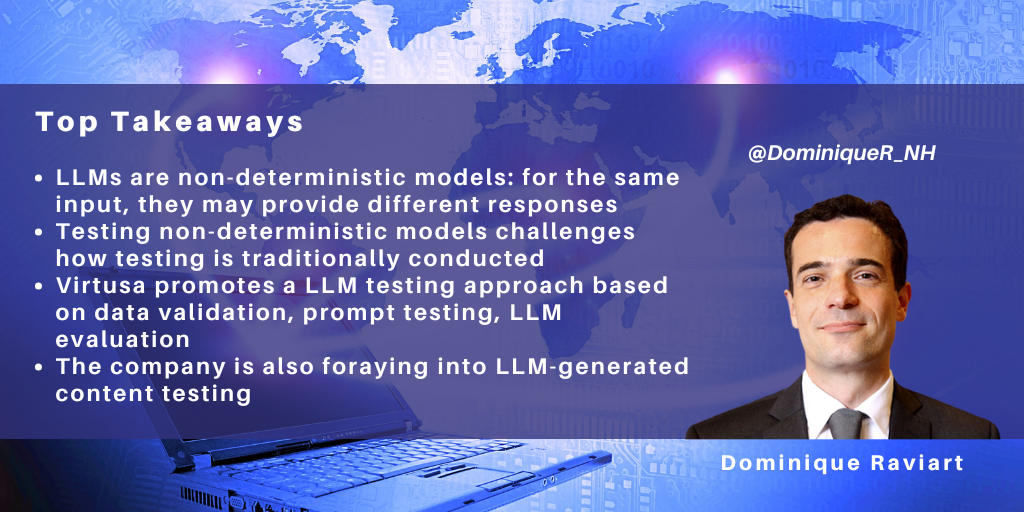

Virtusa recently briefed NelsonHall about how it conducts GenAI testing. With the emergence of LLMs, Virtusa has seen a rising interest in understanding how to validate them. However, testing LLMs is not easy, as traditional testing approaches are not relevant to LLMs. It requires reinventing software testing and looking beyond the output of a transaction.

Non-Deterministic LLMs Challenge How Testing Is Conducted

Welcome to the world of leading-edge technology and complexity! LLM testing is not easy and differs from testing other AI models: LLMs are non-deterministic (i.e., for the same input, they may provide different responses); other AI models, such as ML, provide the same output for the same input.

The non-deterministic nature of LLMs raises several challenges for testing/QE. The broad principle of functional testing is to validate that a specific transaction on a web application or website provides the intended result, e.g., ordering a good on a website and validating that payment has been processed and completed. However, with GenAI, the output is dynamic and can only be broadly defined. For example, testing a generated response to a question, summary, or picture under a traditional approach is not working, as there is no right or wrong answer. Also, several answers can be correct.

As part of its efforts to deal with this complexity, Virtusa has organized its capabilities under the Helio Assure Framework, which covers LLM data, prompts, models, and output.

Data Complexity

Data validation is a starting point for any LLM project. Virtusa offers traditional data validation services, such as checks around data integrity, consistency, and schema/models.

Virtusa also conducts statistical assessments specific to data used for training AI models; for example:

- Data outliers, i.e., identifying data that deviates from the rest of the dataset

- Data skewness review, e.g., detecting a data distribution asymmetry. Several statistical models indeed require normally distributed data.

Beyond data and distribution validation, both well-understood activities, Virtusa emphasizes two approaches:

- Data bias detection

- Unstructured data validation (going through semantic search, grammatic search, and context evaluation).

Of these two, data bias detection is the most difficult, mainly because bias identification varies across cultures and contexts and is challenging to automate. Virtusa continues to work on data bias detection.

Prompt Validation

For prompt validation, Virtusa relies on several approaches, including bias checks, toxicity analysis (e.g., obscenity, threats), and conciseness assessments (e.g., redundant word identification, readability). Virtusa highlights that prompt templatization, through a shared repository of standard prompts, also mitigates security threats.

Virtusa also uses adversarial attacks to identify PII and security breaches. Adversarial attack is the equivalent of pen-testing in security, initially developed in ML. The approach is technical and rapidly evolving as LLM vendors finetune their LLMs to protect them from hackers. Nevertheless, it includes methods such as prompt injection and direct attacks/jailbreaks.

LLM Accuracy Evaluation

For evaluating AI models such as LLMs, which is particularly challenging, Virtusa relies on a model accuracy benchmarking approach, creating first a baseline model. The baseline is an LLM whose training is augmented by a vector database/RAG approach relying on 100% reliable data (‘ground truth data’). It will evaluate the accuracy of LLMs vs. this baseline model.

The Roadmap is Creative Content Validation and LLMOps

Virtusa has worked on GenAI creative output/content validation, looking at three elements: content toxicity, its flow (e.g., readability, complexity, and legibility), and IP infringement (e.g., plagiarism or trademark infringement). Virtusa uses computer vision to identify content patterns present in an image or a video, classifying them into themes (clarity and coherence vs. the intent, blur detection, and sometimes assessing the relevancy of the images/video vs. its objectives. We think the relevancy of this offering for social media, education, marketing, and content moderation is enormous.

We think that GenAI is the next cloud computing and will have significant adoption: enterprises are still enthusiastic about what GenAI can bring, though recognizing they need to pay much closer attention to costs, IP violation, data bias, and toxicity. Governance and FinOps, to keep cost and usage under control, are becoming increasing priorities. GenAI vendors and other stakeholders are eager to move from T&M to a usage-based consumption model and want to monetize their investments.